文章目录

在Kubernetes中,pod是最小的调度单元。当Pod无法在所分配的节点上正常运行时,它可能会被驱逐(evicted)。这种情况可能是由多种原因引起,比如节点资源不足、Pod超出了所分配的资源限制、镜像拉取失败等。

驱逐原因

- 节点资源不足:节点资源包括CPU、内存、存储空间等。当节点资源不足以满足Pod的资源需求时,调度器会选择其中一个或多个Pod驱逐出节点。

- 超出资源限制:Pod可以指定资源上线,例如CPU和内存的限制。如果Pod使用的资源超过指定的限制,该Pod可能会被驱逐。

- 镜像拉取失败:如果Pod所需的镜像无法拉取或拉取失败,K8S可能会重试一定次数后将其标记为“evicted”状态。

问题复现

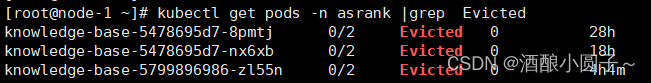

(1)查看K8S中Pod的状态,发现部分pod的STATUS显示“evicted”状态:

(2)使用 kubectl describe 命令查看Pod的状态和事件。

kubectl describe pod <pod-name>-n <namespace-name>

kubectl describe pod knowledge-base-5799896986-zl55n -n asrank

[root@node-1~]# kubectl describe pod knowledge-base-5799896986-zl55n -n asrank

Name: knowledge-base-5799896986-zl55n

Namespace: asrank

Priority:0

Node: node-1/

Start Time: Fri,29 Mar 202411:12:16+0800

Labels: app=knowledge-base

istio.io/rev=default

pod-template-hash=5799896986

security.istio.io/tlsMode=istio

service.istio.io/canonical-name=knowledge-base

service.istio.io/canonical-revision=latest

Annotations: kubectl.kubernetes.io/default-container: knowledge-base

kubectl.kubernetes.io/default-logs-container: knowledge-base

prometheus.io/path:/stats/prometheus

prometheus.io/port:15020

prometheus.io/scrape: true

sidecar.istio.io/status:{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-data","istio-podinfo","istiod-ca-cert"],"ima...

Status: Failed

Reason: Evicted

Message: The node was low on resource: memory. Container knowledge-base was using 43775192Ki, which exceeds its request of 5000Mi.

IP:

IPs:<none>

Controlled By: ReplicaSet/knowledge-base-5799896986

Init Containers:

istio-init:

Image: hub.pmlabs.com.cn/sail/istio/proxyv2:1.10.6

Port:<none>

Host Port:<none>

Args:

istio-iptables

-p

15001-z

15006-u

1337-m

REDIRECT

-i

*-x

-b

*-d

15090,15021,15020

Limits:

cpu:2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Environment:<none>

Mounts:/var/run/secrets/kubernetes.io/serviceaccount from default-token-cfzc5 (ro)

Containers:

knowledge-base:

Image: hub.pmlabs.com.cn/asrank/knowledge-base:1.01.201

Port:9303/TCP

Host Port:0/TCP

Limits:

cpu:24

memory: 48Gi

Requests:

cpu:1

memory: 5000Mi

Environment:

PROFILE: prod

MYSQL_PASSWORD:<set to the key 'MYSQL_ROOT_PASSWORD'in secret 'mysql-root-password'> Optional: false

Mounts:/data/from datasets (rw)/etc/localtime from date-config (rw)/log/from app-log (rw)/var/run/secrets/kubernetes.io/serviceaccount from default-token-cfzc5 (ro)

istio-proxy:

Image: hub.pmlabs.com.cn/sail/istio/proxyv2:1.10.6

Port:15090/TCP

Host Port:0/TCP

Args:

proxy

sidecar

--domain

$(POD_NAMESPACE).svc.cluster.local

--serviceCluster

knowledge-base.$(POD_NAMESPACE)--proxyLogLevel=warning

--proxyComponentLogLevel=misc:error

--log_output_level=default:info

--concurrency

2

Limits:

cpu:2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Readiness: http-get http://:15021/healthz/ready delay=1s timeout=3s period=2s #success=1 #failure=30

Environment:

JWT_POLICY: first-party-jwt

PILOT_CERT_PROVIDER: istiod

CA_ADDR: istiod.istio-system.svc:15012

POD_NAME: knowledge-base-5799896986-zl55n (v1:metadata.name)

POD_NAMESPACE: asrank (v1:metadata.namespace)

INSTANCE_IP:(v1:status.podIP)

SERVICE_ACCOUNT:(v1:spec.serviceAccountName)

HOST_IP:(v1:status.hostIP)

CANONICAL_SERVICE:(v1:metadata.labels['service.istio.io/canonical-name'])

CANONICAL_REVISION:(v1:metadata.labels['service.istio.io/canonical-revision'])

PROXY_CONFIG:{}

ISTIO_META_POD_PORTS:[{"containerPort":9303,"protocol":"TCP"}]

ISTIO_META_APP_CONTAINERS: knowledge-base

ISTIO_META_CLUSTER_ID: Kubernetes

ISTIO_META_INTERCEPTION_MODE: REDIRECT

ISTIO_META_WORKLOAD_NAME: knowledge-base

ISTIO_META_OWNER: kubernetes://apis/apps/v1/namespaces/asrank/deployments/knowledge-base

ISTIO_META_MESH_ID: cluster.local

TRUST_DOMAIN: cluster.local

Mounts:/etc/istio/pod from istio-podinfo (rw)/etc/istio/proxy from istio-envoy (rw)/var/lib/istio/data from istio-data (rw)/var/run/secrets/istio from istiod-ca-cert (rw)/var/run/secrets/kubernetes.io/serviceaccount from default-token-cfzc5 (ro)

Volumes:

istio-envoy:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit:<unset>

istio-data:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit:<unset>

istio-podinfo:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.labels -> labels

metadata.annotations -> annotations

limits.cpu -> cpu-limit

requests.cpu -> cpu-request

istiod-ca-cert:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: istio-ca-root-cert

Optional: false

date-config:

Type: HostPath (bare host directory volume)

Path:/etc/localtime

HostPathType:

datasets:

Type: HostPath (bare host directory volume)

Path:/var/asrank/knowledge-base/data

HostPathType:

app-log:

Type: HostPath (bare host directory volume)

Path:/var/log/app/asrank/knowledge-base

HostPathType:

default-token-cfzc5:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-cfzc5

Optional: false

QoS Class: Burstable

Node-Selectors:<none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 2s

node.kubernetes.io/unreachable:NoExecute op=Exists for 2s

Events:

Type Reason Age From Message

-------------------------

Warning Evicted 59m kubelet The node was low on resource: memory. Container knowledge-base was using 43775192Ki, which exceeds its request of 5000Mi.

Normal Killing 59m kubelet Stopping container knowledge-base

Normal Killing 59m kubelet Stopping container istio-proxy

在Events事件中可以看到,由于该Pod在运行中所需内存超出deployments中request的内存,导致该Pod的container被killed,Pod变为被驱逐(evicted)状态。

Events:

Type Reason Age From Message

-------------------------

Warning Evicted 59m kubelet The node was low on resource: memory. Container knowledge-base was using 43775192Ki, which exceeds its request of 5000Mi.

Normal Killing 59m kubelet Stopping container knowledge-base

Normal Killing 59m kubelet Stopping container istio-proxy

解决方案

- 分析Pod资源使用情况:检查被驱逐的Pod的资源使用情况,如内存、CPU和磁盘使用率。可以使用kubectl describe pod <pod_name>命令查看Pod的状态和事件。

- 调整资源限制:根据实际需求调整Pod的资源限制,如增加内存限制或CPU限制。可以在Pod YAML文件中修改资源限制,然后使用kubectl apply -f <pod_yaml_file>命令更新Pod。

- 扩容节点:如果集群中的所有节点都面临资源不足的情况,可以考虑扩容节点以提供更多资源。可以使用云服务提供商的管理控制台或API扩容节点。

- 优化应用:优化应用程序以减少资源使用,如减少内存泄漏、优化CPU使用等。

- 使用优先级和抢占:为Pod设置优先级,以便在资源紧张时根据优先级驱逐Pod。可以在Pod的YAML文件中设置priorityClassName字段。

- 批量清理

根据上述分析,本次事件是由于资源不足导致的。可以根据实际需求调整Pod的资源限制,在Pod的YAML文件中修改资源限制,然后使用kubectl apply -f <pod_yaml_file>命令更新Pod。

有k9s界面的情况下,也可进行如下操作:

(1)基于k9s,输入 :deployments

(2)选择对应的Pod,按 e 键进入编辑模式

(3)修改 resource 资源信息,使得满足程序运行所需资源大小。如:

requests:

cpu:10

memory: 5Gi

limits:

cpu:24

memory: 48Gi

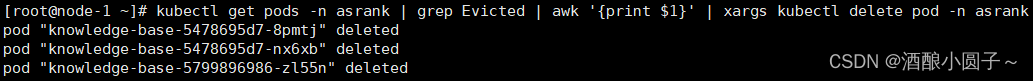

(4)清理Pod

确认没问题后再做删除被驱逐(evicted)的Pod。

# 查看被驱逐(evicted)的Pod

kubectl get pods -n <namespace-name>|grep Evicted

# 执行批量删除

kubectl get pods -n <namespace-name>| grep Evicted | awk '{print $1}'| xargs kubectl delete pod -n <namespace-name>

版权归原作者 酒酿小圆子~ 所有, 如有侵权,请联系我们删除。