今天下午使用 datagrip 远程连接 hive 突然中断,无法连接,拒绝连接

看了一下 hiveserver2 日志

2022-08-08 15:41:37,407 Log4j2-TF-2-AsyncLogger[AsyncContext@2c8d66b2]-1 ERROR Unable to invoke factory method in class org.apache.hadoop.hive.ql.log.HushableRandomAccessFileAppender for element HushableMutableRandomAccess: java.lang.OutOfMemoryError: Java heap space java.lang.reflect.InvocationTargetException

...

2022-08-08 15:41:37,783 Log4j2-TF-2-AsyncLogger[AsyncContext@2c8d66b2]-1 ERROR Unable to create Appender of type HushableMutableRandomAccess

2022-08-08 15:41:40,031 Log4j2-TF-2-AsyncLogger[AsyncContext@2c8d66b2]-1 ERROR Unable to invoke factory method in class org.apache.hadoop.hive.ql.log.HushableRandomAccessFileAppender for element HushableMutableRandomAccess: java.lang.OutOfMemoryError: GC overhead limit exceeded java.lang.reflect.InvocationTargetException

...

2022-08-08 15:41:40,726 Log4j2-TF-2-AsyncLogger[AsyncContext@2c8d66b2]-1 ERROR Unable to create Appender of type HushableMutableRandomAccess

AsyncLogger error handling event seq=19835, value='[ERROR calling class org.apache.logging.log4j.core.async.RingBufferLogEvent.toString(): java.lang.NullPointerException]':

java.lang.OutOfMemoryError: GC overhead limit exceeded

2022-08-08 15:41:42,918 Log4j2-TF-2-AsyncLogger[AsyncContext@2c8d66b2]-1 ERROR Unable to invoke factory method in class org.apache.hadoop.hive.ql.log.HushableRandomAccessFileAppender for element HushableMutableRandomAccess: java.lang.OutOfMemoryError: Java heap space java.lang.reflect.InvocationTargetException

...

Caused by: java.lang.OutOfMemoryError: Java heap space

...

2022-08-08 15:41:43,285 Log4j2-TF-2-AsyncLogger[AsyncContext@2c8d66b2]-1 ERROR Unable to create Appender of type HushableMutableRandomAccess

AsyncLogger error handling event seq=19841, value='[ERROR calling class org.apache.logging.log4j.core.async.RingBufferLogEvent.toString(): java.lang.NullPointerException]':

java.lang.OutOfMemoryError: GC overhead limit exceeded

Exception in thread "HiveServer2-Handler-Pool: Thread-252" java.lang.OutOfMemoryError: GC overhead limit exceeded

...

这是很明显的

OutOfMemoryError

问题

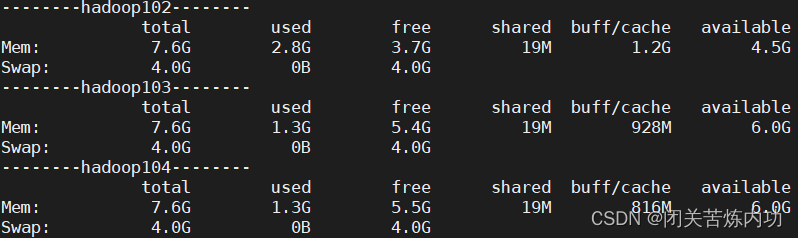

首先,排查集群虚机内存

发现,并没有什么问题,没有超标

有大佬说是内存配置的鬼

看了一眼,确实没配

说干就干,开始操作

1. 修改堆内存大小

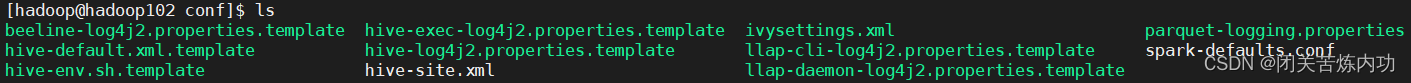

cd /datafs/module/hive/conf/

cp hive-env.sh.template hive-env.sh

vim hive-env.sh

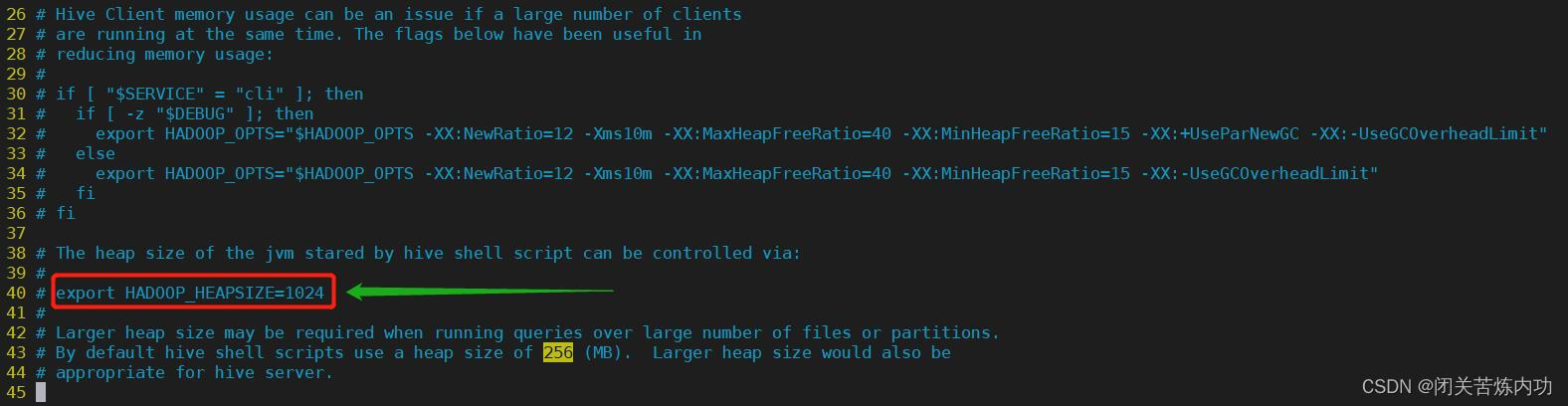

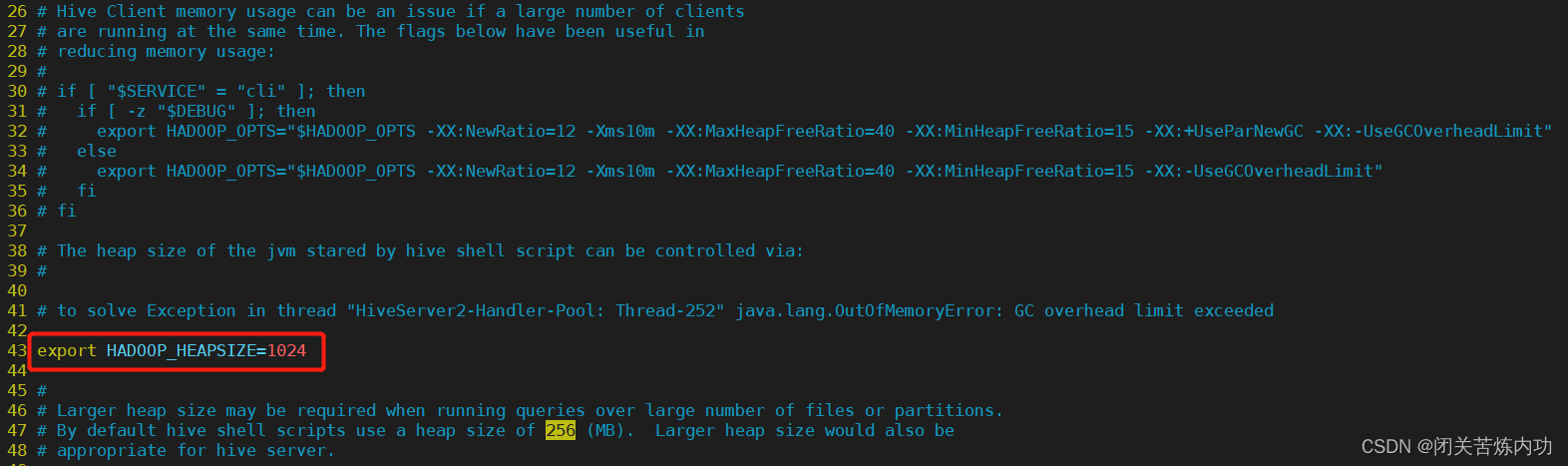

添加内容

export HADOOP_HEAPSIZE=1024

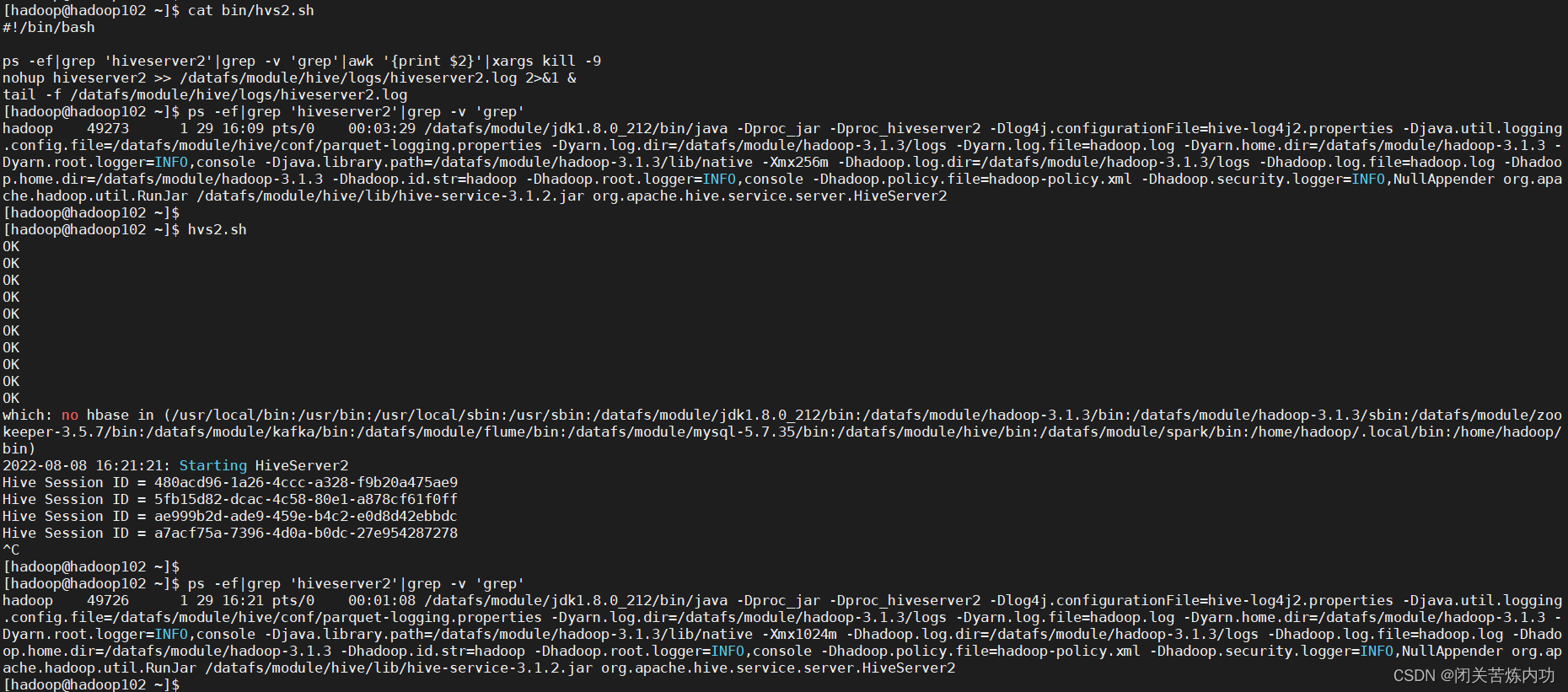

2. 重启 hiveserver2

hiveserver2 后台启动脚本

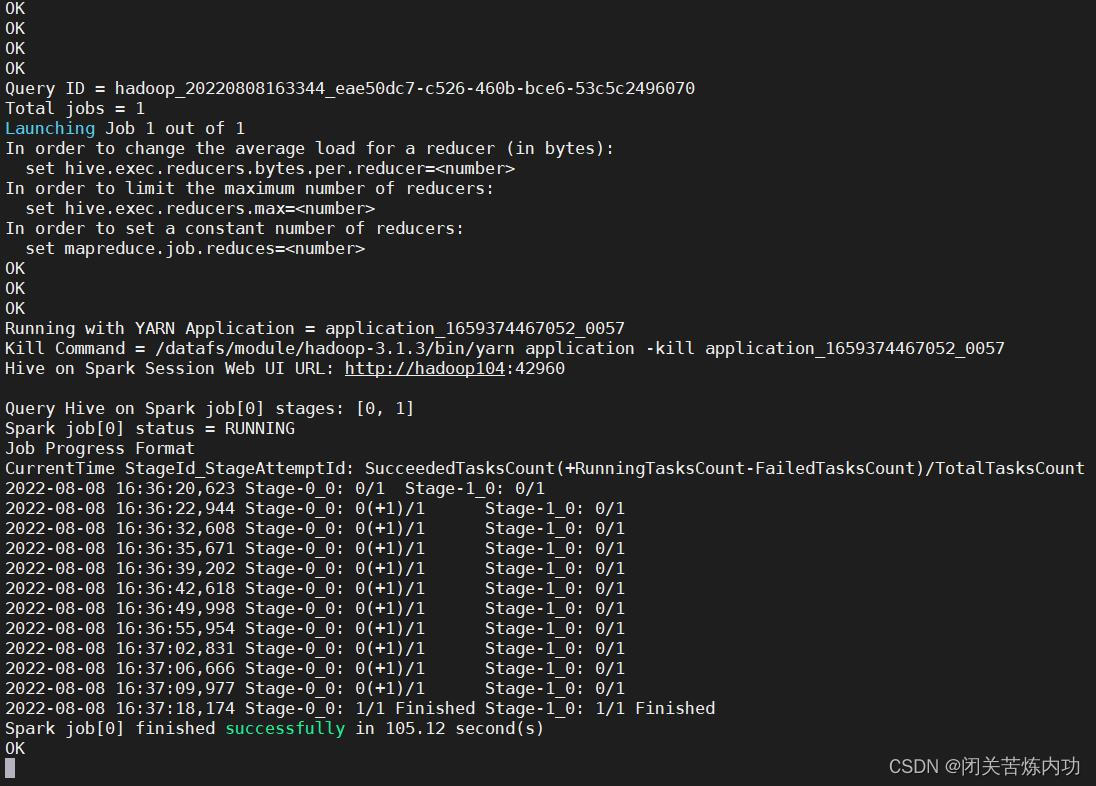

3. 再使用 datagrip 阔以连接hive,而且执行 sql 成功,无报错

所以使用 hiveserver2 如果再遇到 报错

OutOfMemoryError

我们的解决思路可以如下:

a. 修改 hive 目录下的

conf/hive-env.sh

文件

export HADOOP_HEAPSIZE=1024

调大

HADOOP_HEAPSIZE

堆内存的大小

b. 增加虚机内存

c. 使用物理机,加大内存

d. 优化 sql

待更新…

我们下期见,拜拜!

版权归原作者 闭关苦炼内功 所有, 如有侵权,请联系我们删除。