Cursor AI IDE 开发者工具

Cursor

是一款智能开发者编程工具,底层是由

Chat-GPT3.5

or

Chat-GPT4.0

支持的,不需要科学上网,国内可以直接使用。

重点:免费的 ,同时不需要账号登录。

安装支持:windwos、linux、mac

支持语言:支持 java、php、html、js、py、vue、go、css、c 等

Cursor 用法

先说下用法,很简单就两个快捷键操作:

- Ctrl+K 快捷键:把输出数据直接写入文件中。

- Ctrl+L 快捷键:把输出数据展示到右侧面板中(

输出的数据不会直接输出的文件中),类似智能问答系统,根据上下文有问有答。

Ctrl+K

Ctrl + K 快捷键:输入问题后,会把生成的答案直接写入到文档中。

- 不选中内容(代码)时,直接在文件光标位置开始编写内容。

- 选中内容(代码) 时,会在选择范围内进行编辑内容。

手动创建文件

main.html

:

#快捷键

Ctrl + K

输入:使用html编写一个小游戏

#下面会帮你自动随机生成一个小游戏,由于没有指定游戏名称或类型。

效果:

<!DOCTYPEhtml><html><head><title>小游戏</title></head><body><h1>欢迎来到小游戏</h1><p>游戏规则:点击下面的按钮,看看你能得到多少分!</p><buttononclick="addScore()">点击得分</button><p>得分:<spanid="score">0</span></p><script>var score =0;functionaddScore(){

score++;

document.getElementById("score").innerHTML = score;}</script></body></html>

Ctrl+L

Ctrl + KL 快捷键:输入问题后,会把生成的答案,展示到右侧的面板中,并且支持根据上下文继续问答。区别于

Ctrl + K,不会把答写入到文档中。

- 不选中内容(代码)时,根据全文内容+问题 进行一个上下文解答,然后在右侧面板中展示问题答案。

- 选中内容(代码) 时,根据选中内容+问题 进行一个上下文解答,然后在右侧面板中展示问题答案。

#快捷键

Ctrl + K

输入内容为:使用html编写一个小游戏

#下面会帮你在面板中自动随机生成一个小游戏,由于没有指定游戏名称或类型。

Cursor 应用示例

支持 java、php、html、js、py、vue、go、css、c 等

尝试了哪些功能:

- 生成文件上传Controller

- 添加swagger描述

- 添加方法代码实现

- 生成方法的注释和示例

- 新建接口以及接口实现类

- 完善实现类代码

- 优化代码

- 修复代码

- 排查代码存在什么问题

- 生成文件管理接口及实现类(多步骤,本地、minio、mongodb等实现类)

- 生成分片上传的前后端代码(多步骤)

- html代码生成

- vue代码生成

- Neo4j图库操作生成

- … …

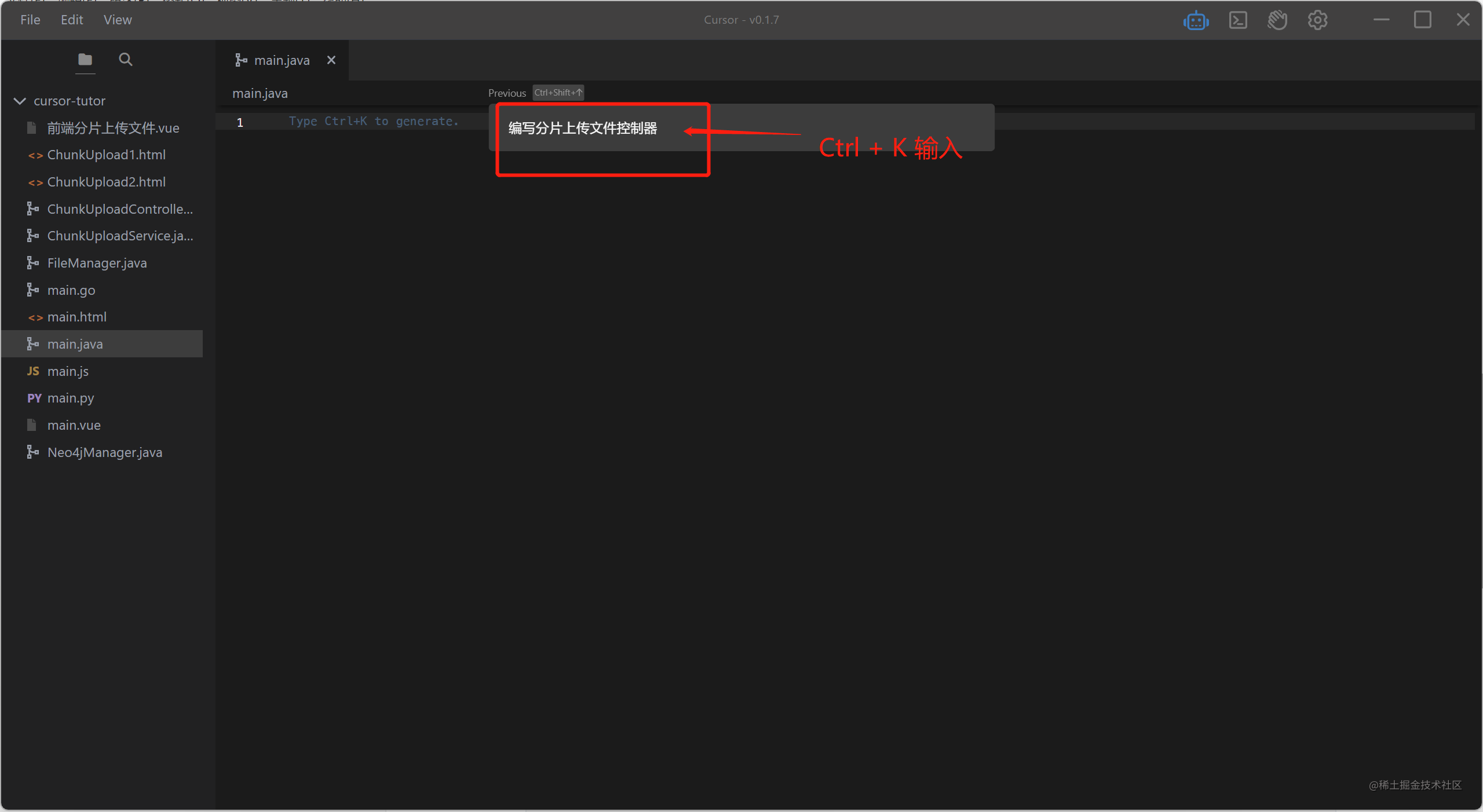

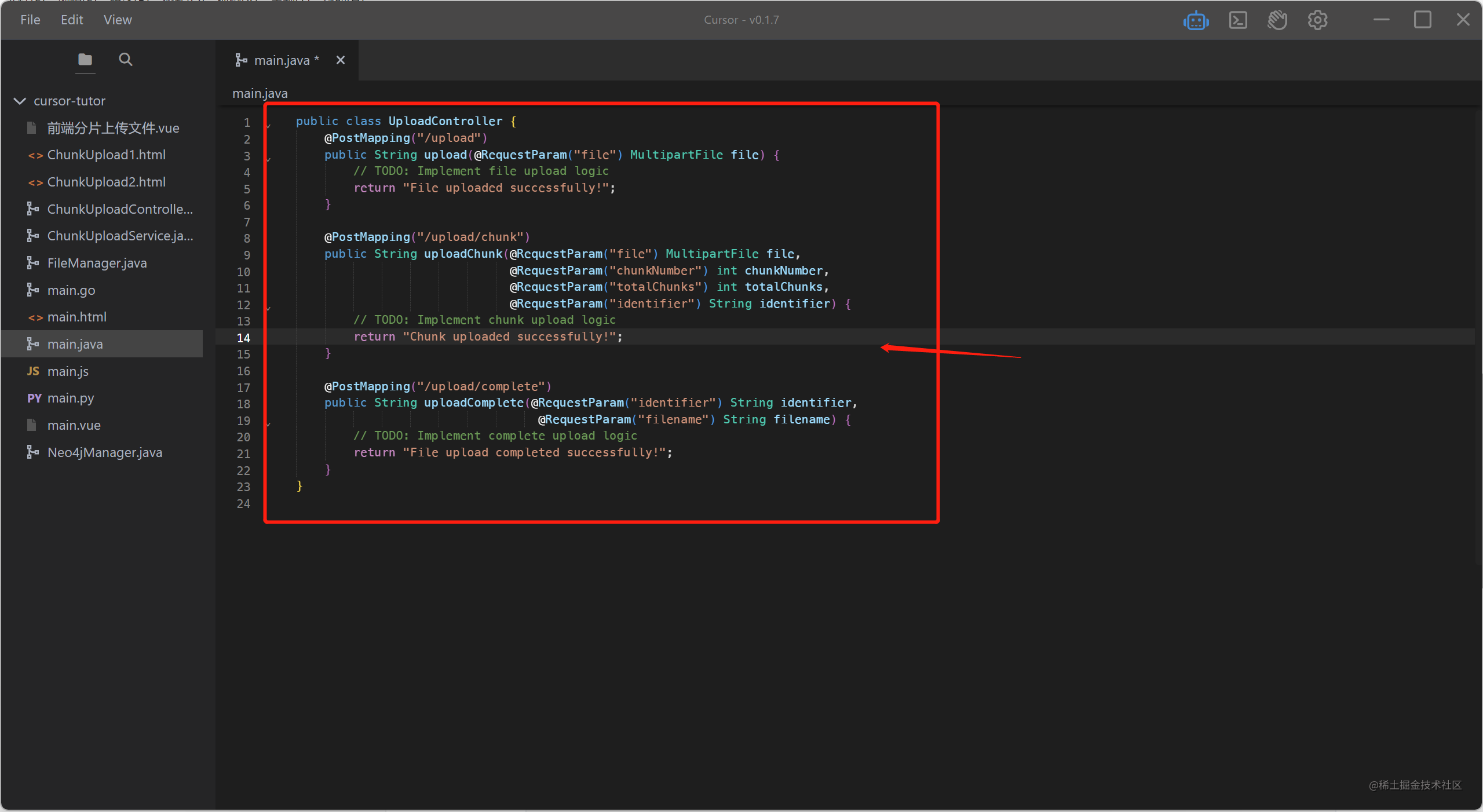

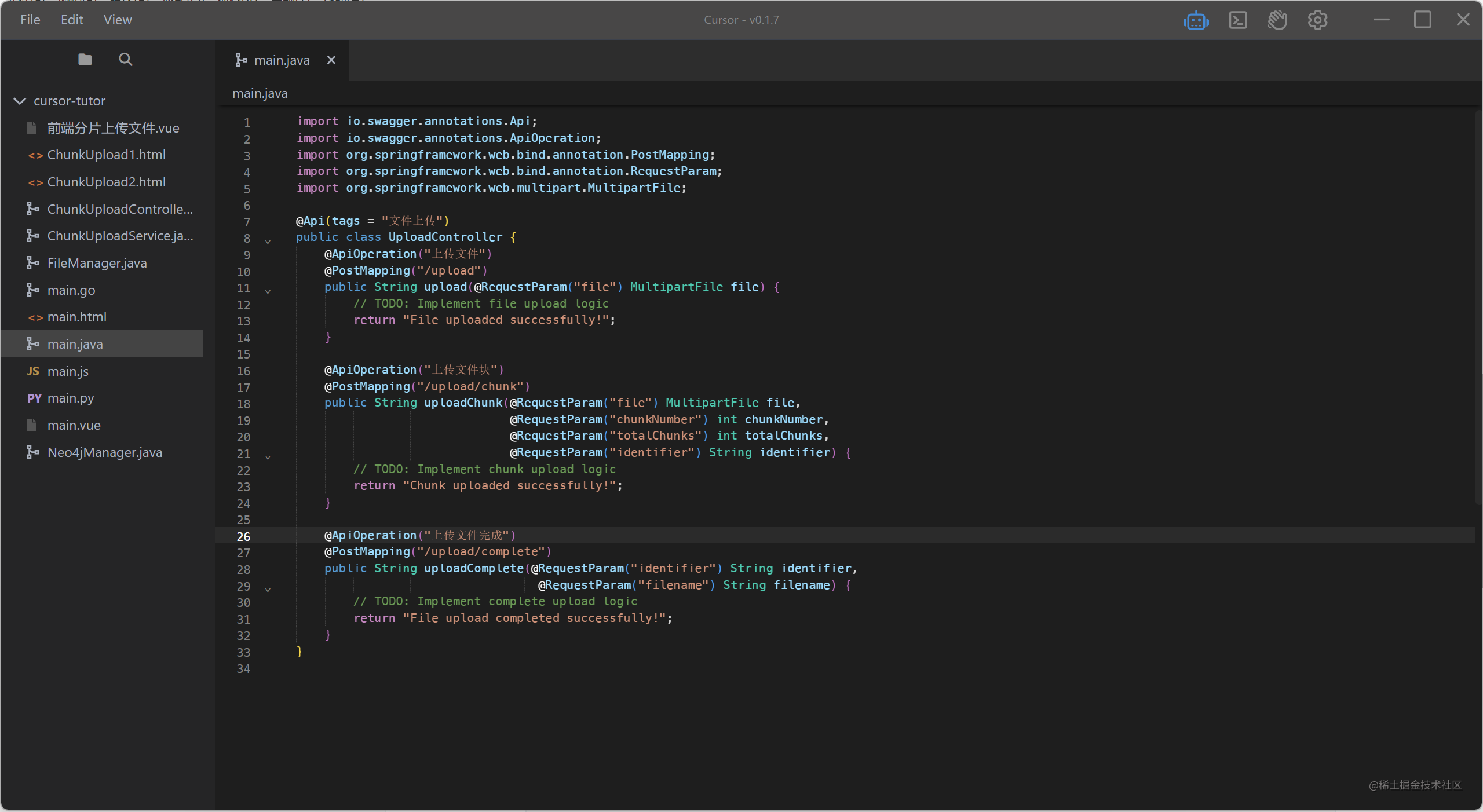

分片上传示例

使用java语言,先创建 main.java 文件,文件名可自定义

#快捷键

Ctrl + K

输入:编写分片上传文件控制器

效果图:

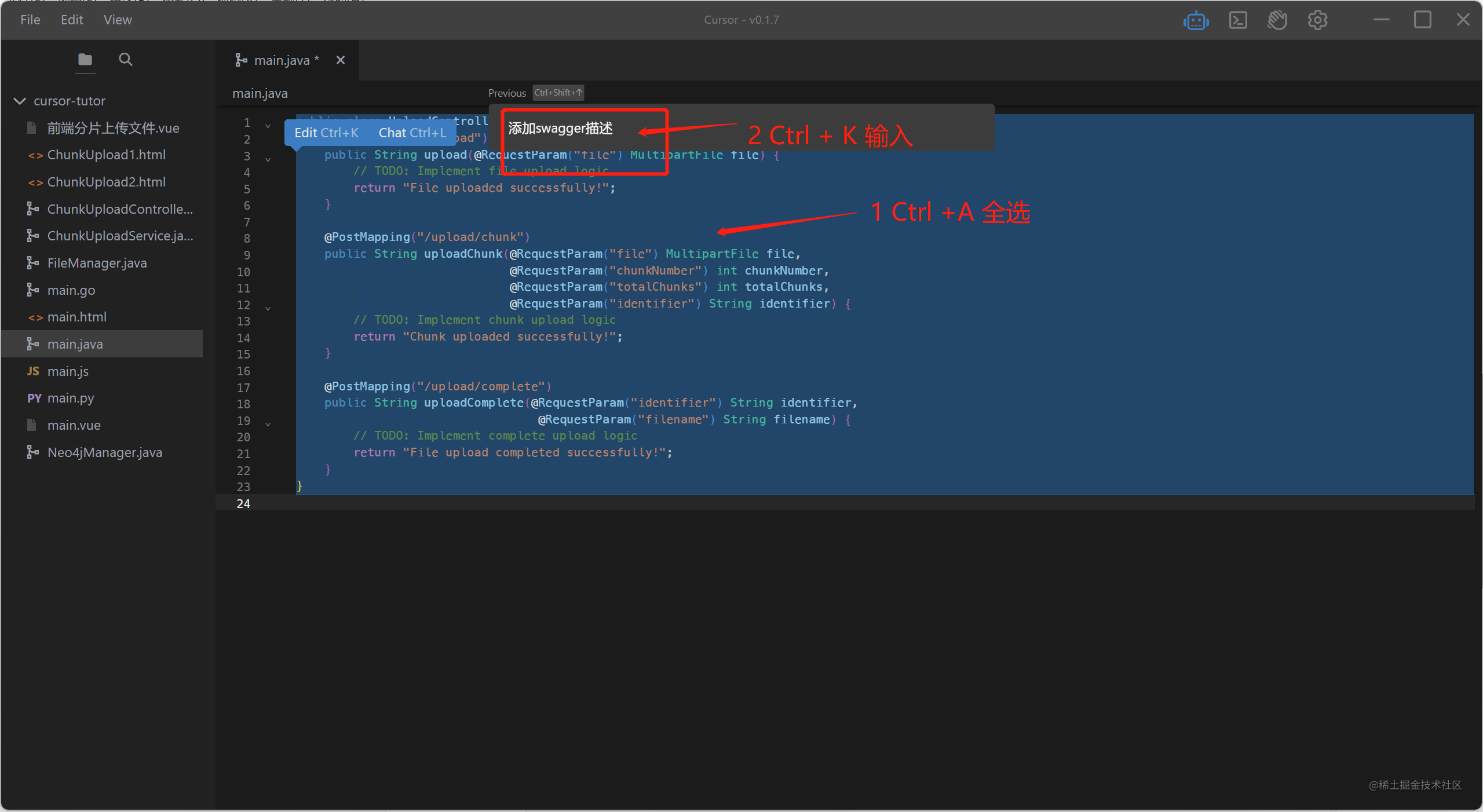

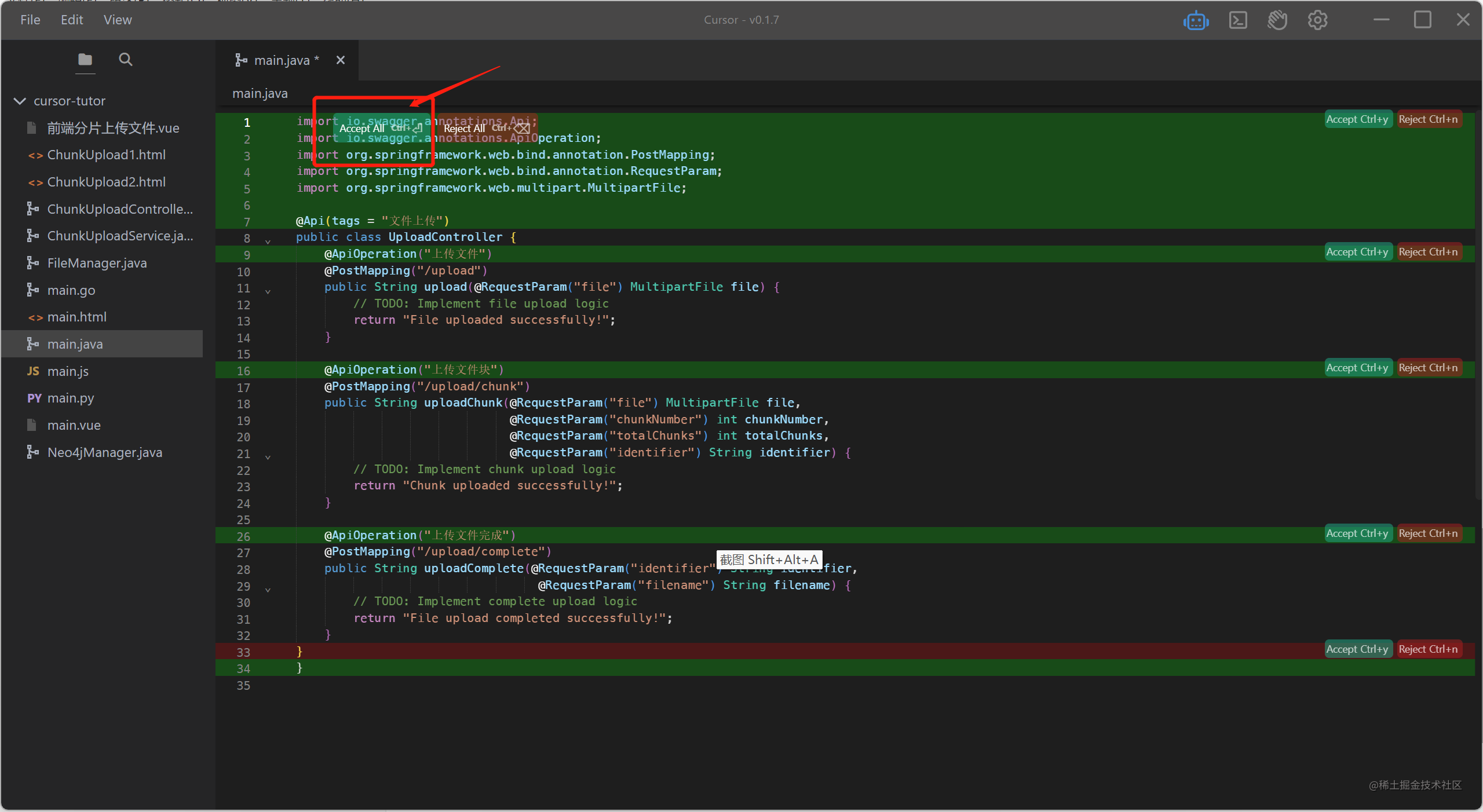

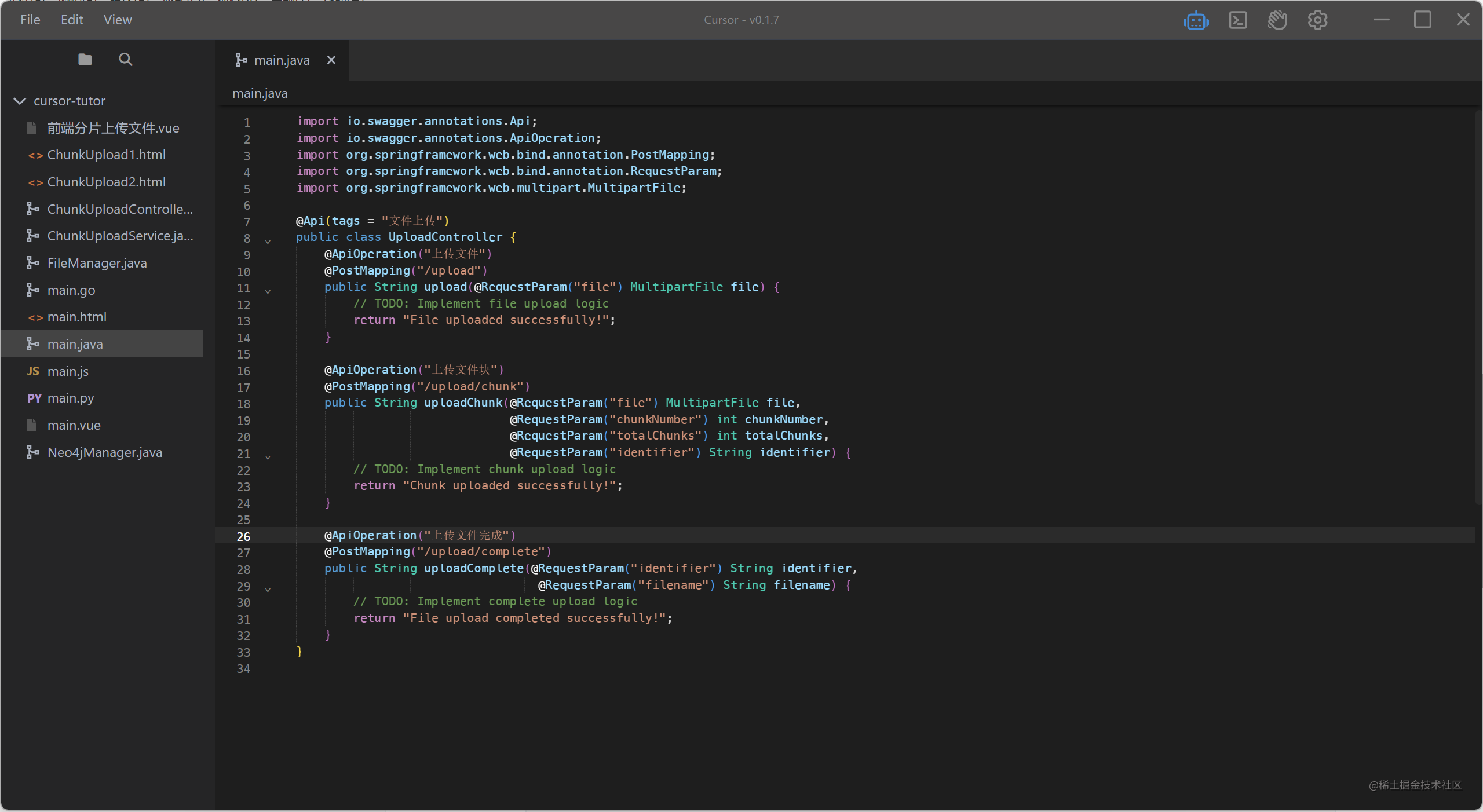

#快捷键

Ctrl + A 全选中

#快捷键

Ctrl + K

输入:添加swagger描述

到此,

Ctrl + K

基本用法已经演示完成了,是不是发现了一些问题,生成的方法内部并

没有具体代码实现

,仅仅是定义了类和方法,那么我们要如何让其帮助我们实现方法内部的代码,下面将展开演示:

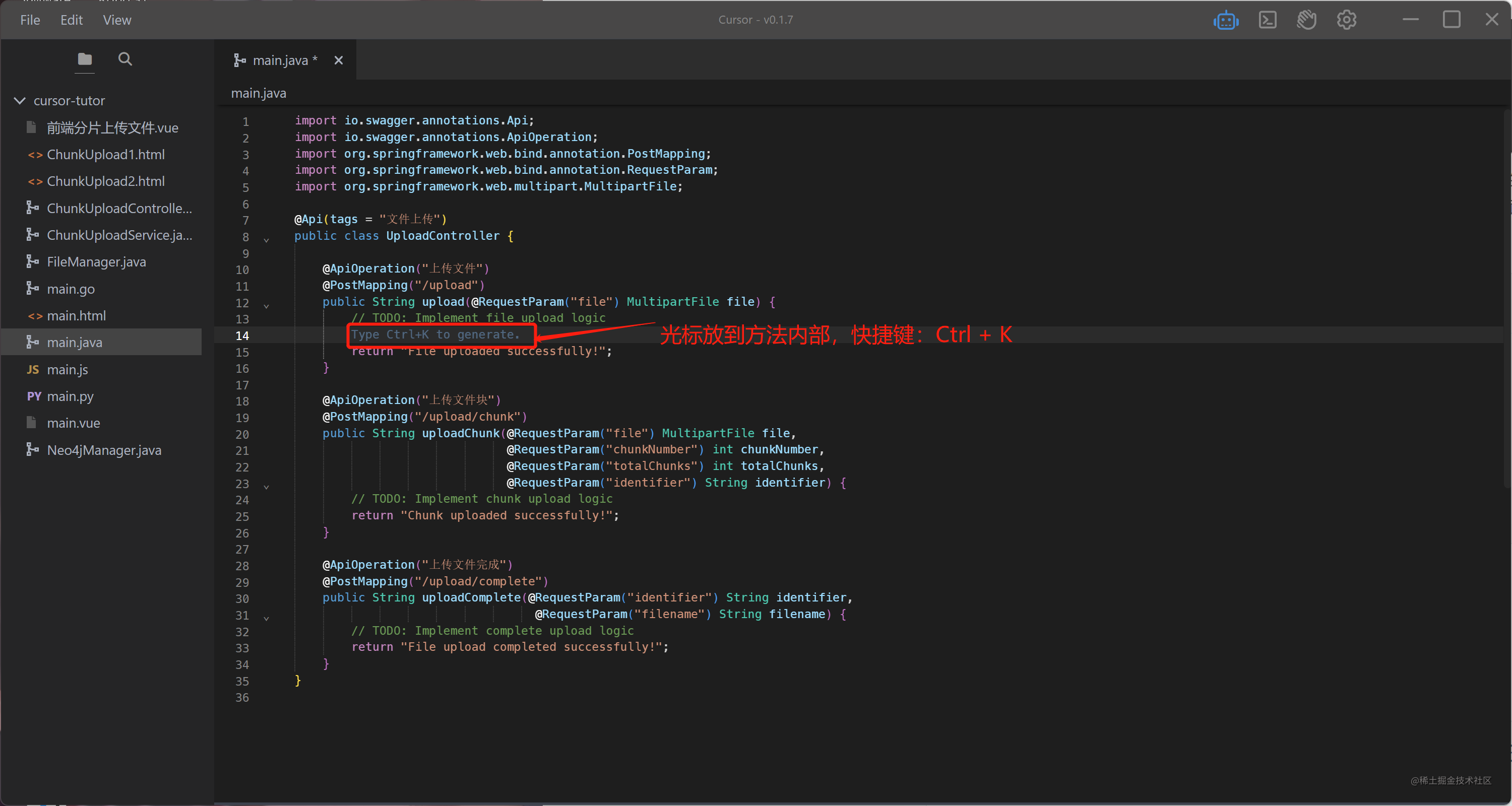

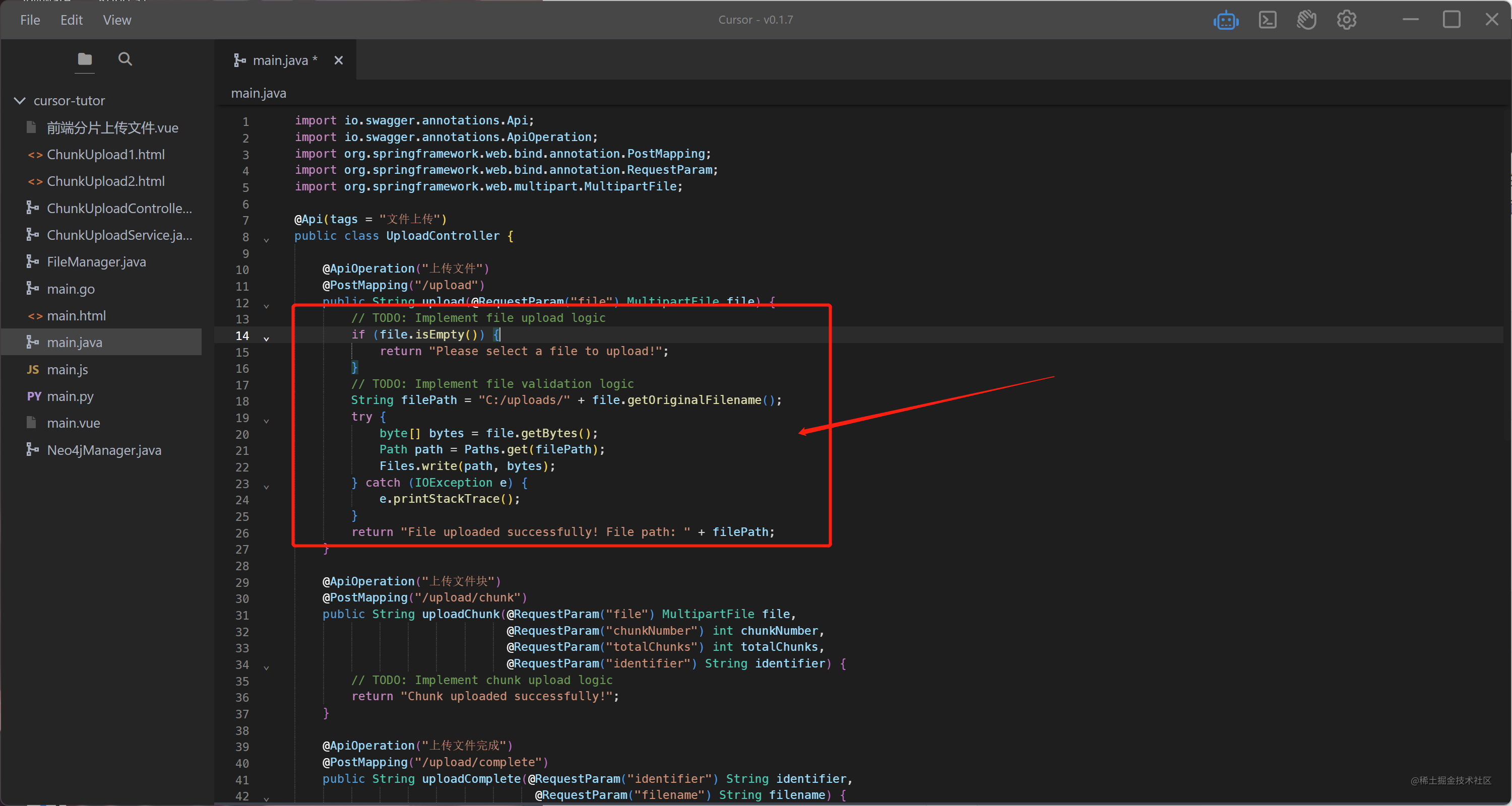

操作:

补充方法中的代码实现

Ctrl + K

输入:先校验文件,再把文件存储到服务器中,返回文件存储路径

演示图:

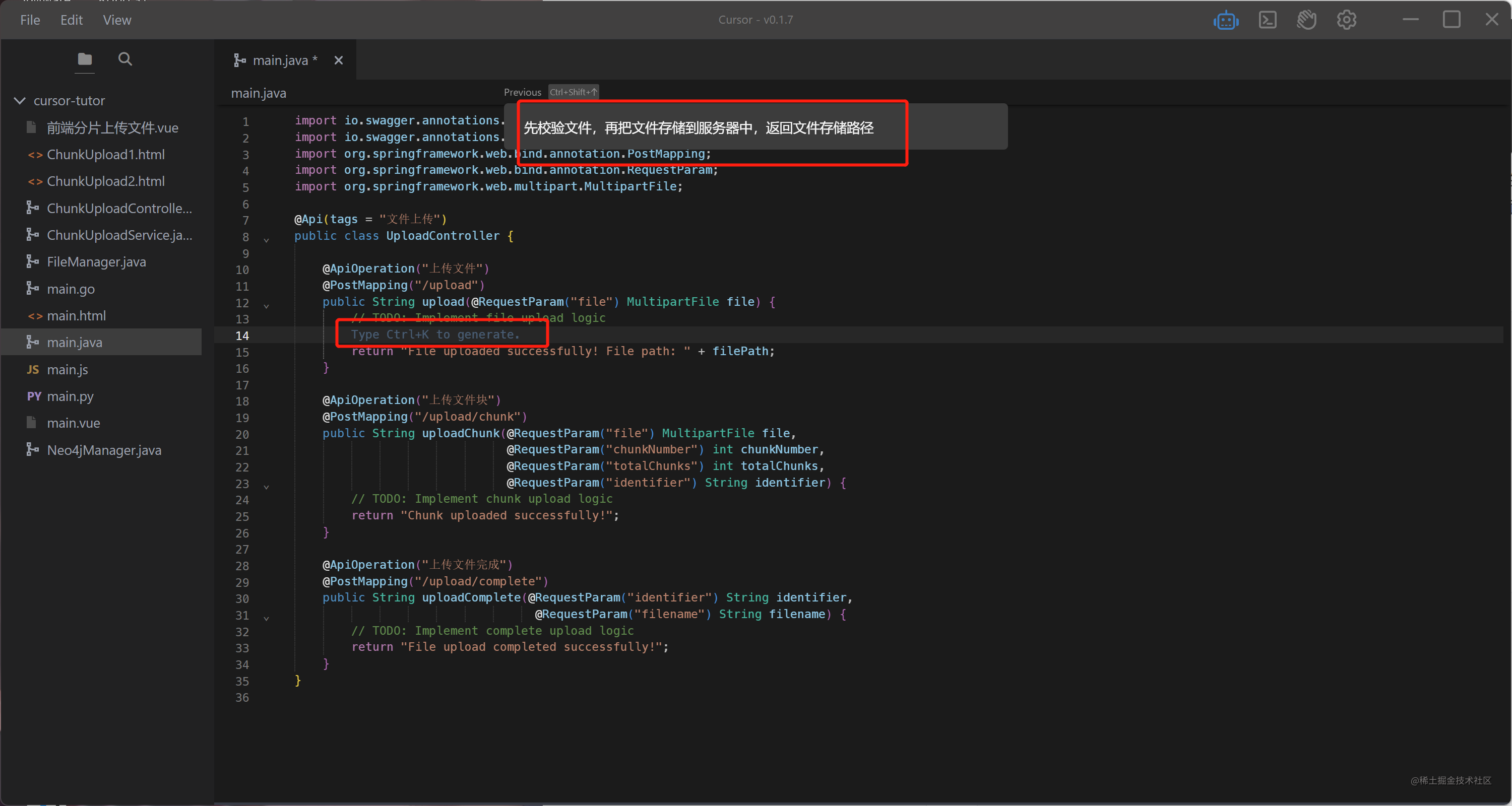

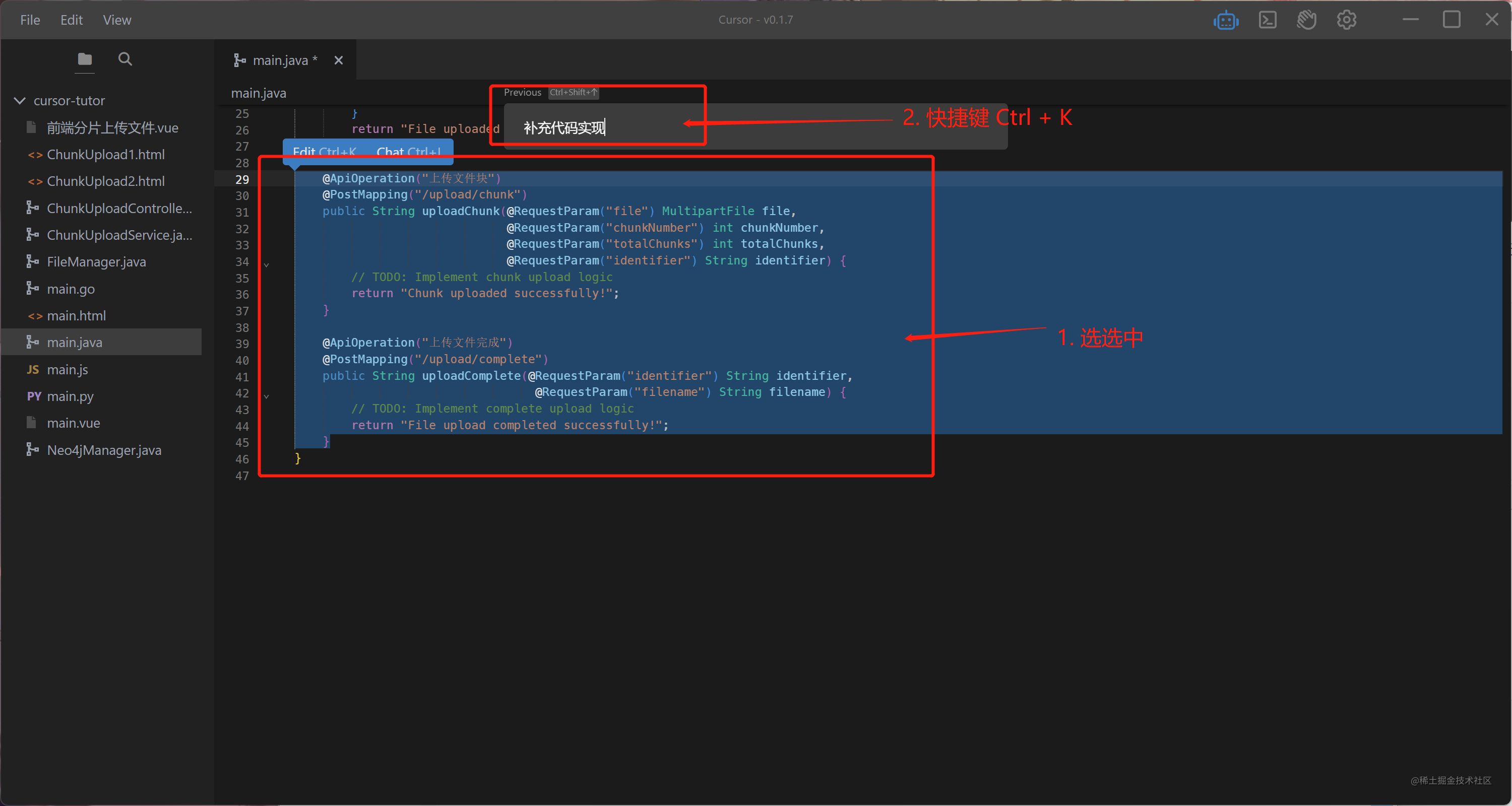

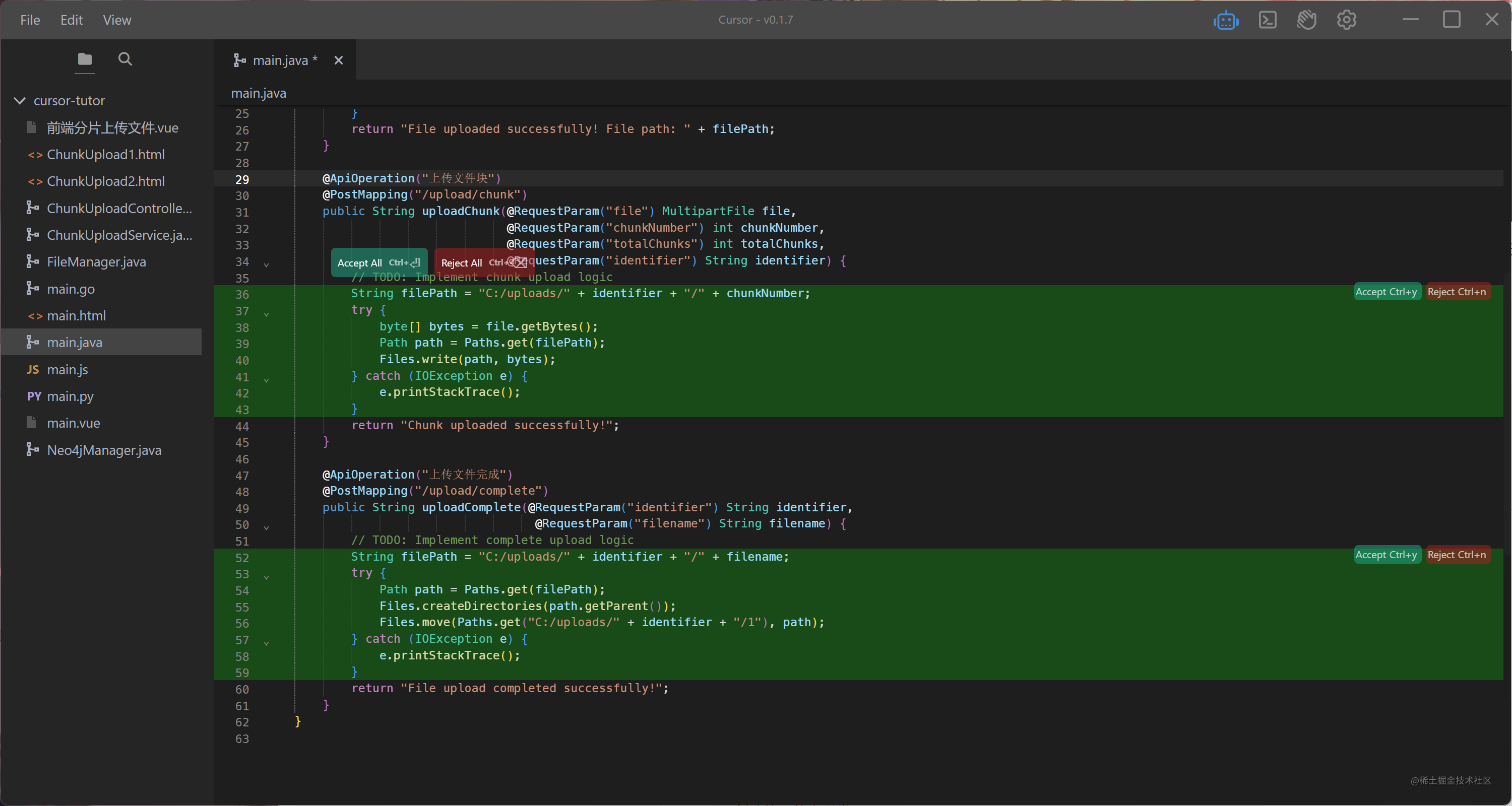

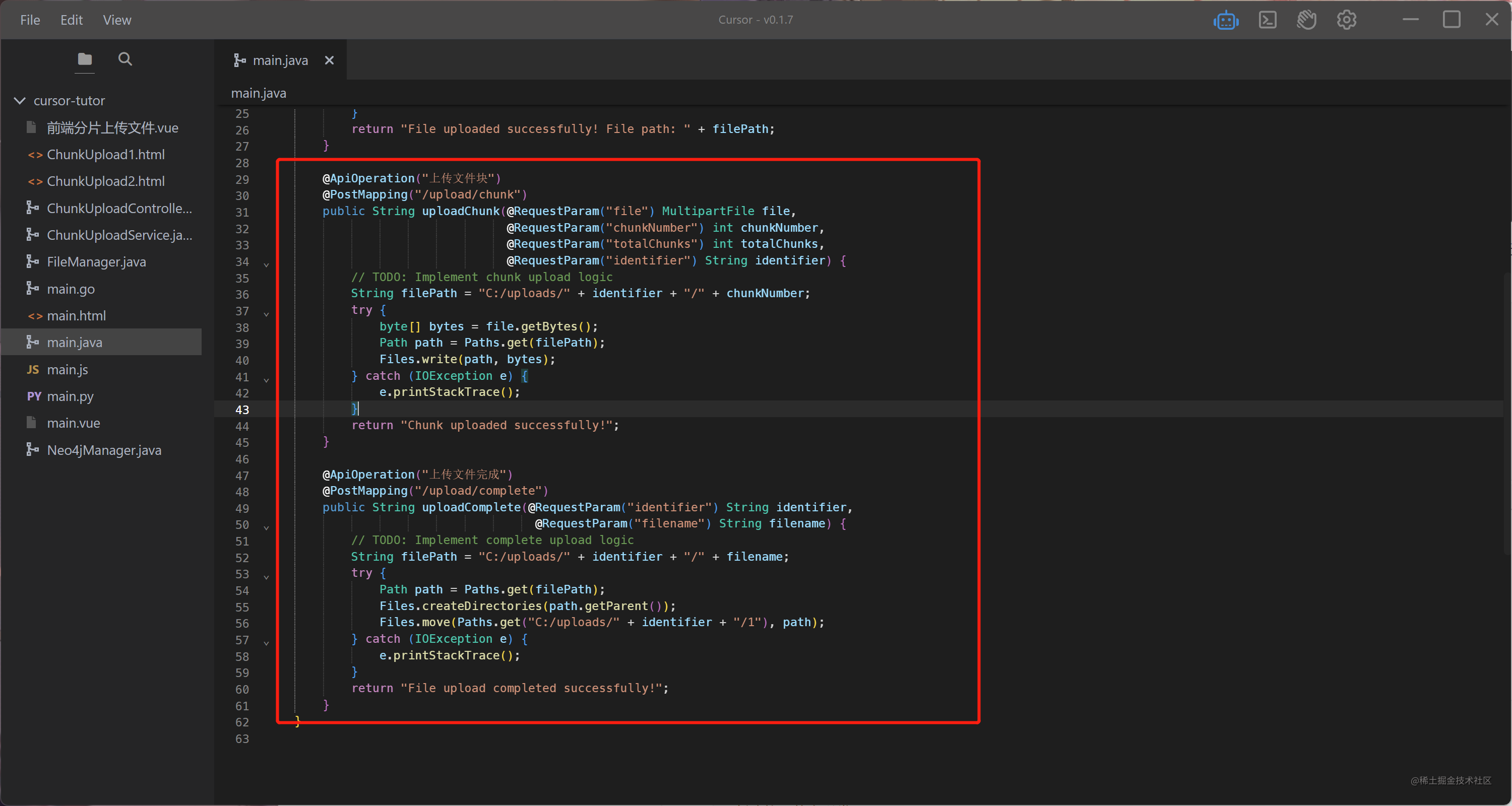

上传接口已经好了,上传文件块和上次文件完成(合并)这两个接口是有关系的,因此,要先选中这两个方法,再进行

Ctrl + K

,输入:补充代码实现,演示如下:

#快捷键

手动先选中 上传文件块和上传文件完成 方法

#快捷键

Ctrl + K

输入:补充代码实现

演示图:

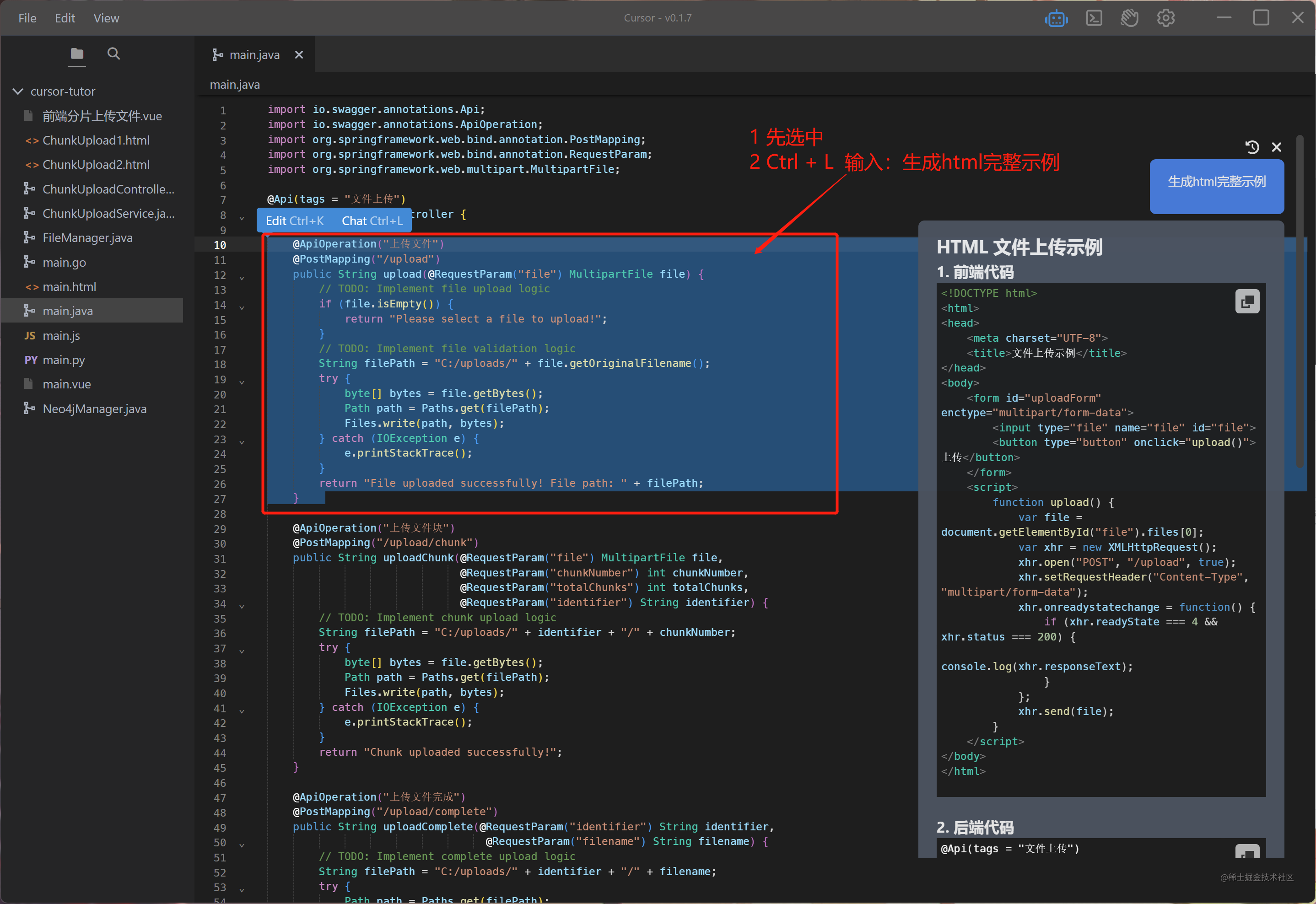

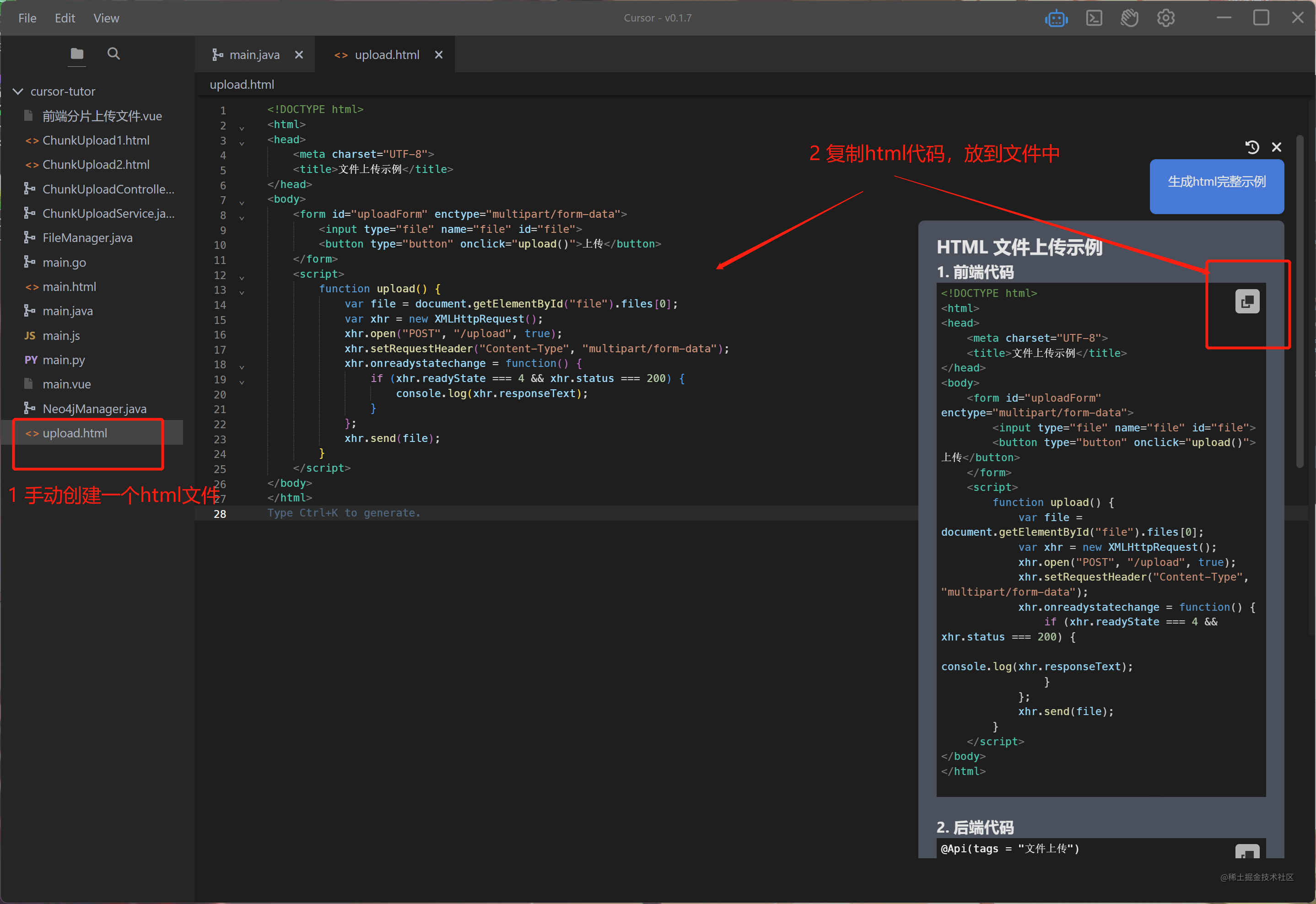

根据和后台接口生成前端请求示例

#手动选择类中的方法#快捷键

Ctrl + L

输入:生成html完整示例

#快捷键

Ctrl + L

输入:依据选中的方法,生成html完整示例

继续

演示图就不放了,和上面差不多。

通用文件管理接口及多实现示例

使用java语言,先创建 main.java 文件,文件名可自定义

#创建接口及接口的方法

Ctrl + K

输入:写一个通用文件管理接口,支持分片上传、合并、上传、下载、获取所有列表

#创建接口的实现类

Ctrl + K

输入:实现类 or minio实现类 or mongodb实现类

#完善接口实现类中代码实现, 先选中实现类

Ctrl + K

输入:补充代码实现

演示图:

不贴出来了,看最总成果展示贴出来的代码。

最总成果展示

以下代码,全部由Cursor生成,但是整个调试过程还是挺漫长的。

成果1-示例

注:代码全部是由ai自动生成

importorg.apache.commons.io.IOUtils;importorg.bson.types.ObjectId;importcom.mongodb.client.MongoClient;importcom.mongodb.client.MongoClients;importcom.mongodb.client.MongoCollection;importcom.mongodb.client.MongoCursor;importcom.mongodb.client.gridfs.GridFSBucket;importcom.mongodb.client.gridfs.GridFSBuckets;importcom.mongodb.client.gridfs.GridFSDownloadByNameOptions;importcom.mongodb.client.gridfs.GridFSDownloadStream;importcom.mongodb.client.gridfs.GridFSFile;importcom.mongodb.client.gridfs.GridFSUploadOptions;importcom.mongodb.client.gridfs.GridFSUploadStream;importorg.apache.commons.net.ftp.FTP;importorg.apache.commons.net.ftp.FTPClient;importorg.apache.commons.net.ftp.FTPFile;importorg.bson.Document;importio.minio.MinioClient;importio.minio.Result;importio.minio.errors.MinioException;importio.minio.messages.Item;importjava.io.ByteArrayInputStream;importjava.io.ByteArrayOutputStream;importjava.io.File;importjava.io.FileInputStream;importjava.io.FileOutputStream;importjava.io.InputStream;importjava.util.ArrayList;importjava.util.List;/**

* 文件管理器接口

*/publicinterfaceFileManager{/**

* 上传文件分片

* @param inputStream 文件输入流

* @param fileName 文件名

* @param contentType 文件类型

* @param chunkIndex 分片索引

* @param totalChunks 总分片数

* @throws Exception 异常

*/voiduploadFileChunk(InputStream inputStream,String fileName,String contentType,int chunkIndex,int totalChunks)throwsException;/**

* 合并文件

* @param fileName 文件名

* @param chunkSize 分片大小

* @param totalChunks 总分片数

* @throws Exception 异常

*/voidmergeFile(String fileName,int chunkSize,int totalChunks)throwsException;/**

* 上传文件

* @param inputStream 文件输入流

* @param fileName 文件名

* @param contentType 文件类型

* @throws Exception 异常

*/voiduploadFile(InputStream inputStream,String fileName,String contentType)throwsException;/**

* 下载文件

* @param fileName 文件名

* @return 文件输入流

* @throws Exception 异常

*/InputStreamdownloadFile(String fileName)throwsException;/**

* 删除文件

* @param fileName 文件名

* @throws Exception 异常

*/voiddeleteFile(String fileName)throwsException;/**

* 列出所有文件

* @return 文件列表

*/List<Document>listFiles();}publicclassFileManagerImplimplementsFileManager{privatestaticfinalString FILE_DIRECTORY ="C:/fileStorage/";@OverridepublicvoiduploadFileChunk(InputStream inputStream,String fileName,String contentType,int chunkIndex,int totalChunks)throwsException{File directory =newFile(FILE_DIRECTORY);if(!directory.exists()){

directory.mkdirs();}try(FileOutputStream outputStream =newFileOutputStream(newFile(directory, fileName +"_"+ chunkIndex))){IOUtils.copy(inputStream, outputStream);}catch(Exception e){thrownewException("Failed to upload file chunk", e);}}@OverridepublicvoidmergeFile(String fileName,int chunkSize,int totalChunks)throwsException{File directory =newFile(FILE_DIRECTORY);if(!directory.exists()){

directory.mkdirs();}try(FileOutputStream outputStream =newFileOutputStream(newFile(directory, fileName))){for(int i =0; i < totalChunks; i++){try(FileInputStream inputStream =newFileInputStream(newFile(directory, fileName +"_"+ i))){IOUtils.copy(inputStream, outputStream);}}}catch(Exception e){thrownewException("Failed to merge file", e);}}@OverridepublicvoiduploadFile(InputStream inputStream,String fileName,String contentType)throwsException{File directory =newFile(FILE_DIRECTORY);if(!directory.exists()){

directory.mkdirs();}try(FileOutputStream outputStream =newFileOutputStream(newFile(directory, fileName))){IOUtils.copy(inputStream, outputStream);}catch(Exception e){thrownewException("Failed to upload file", e);}}@OverridepublicInputStreamdownloadFile(String fileName)throwsException{File file =newFile(FILE_DIRECTORY + fileName);if(!file.exists()){thrownewException("File not found");}returnnewFileInputStream(file);}@OverridepublicvoiddeleteFile(String fileName)throwsException{File file =newFile(FILE_DIRECTORY + fileName);if(!file.exists()){thrownewException("File not found");}

file.delete();}@OverridepublicList<Document>listFiles(){File directory =newFile(FILE_DIRECTORY);File[] files = directory.listFiles();List<Document> documents =newArrayList<>();if(files !=null){for(File file : files){Document document =newDocument();

document.setName(file.getName());

document.setSize(file.length());

document.put("storageType","local");

documents.add(document);}}return documents;}}publicclassMinioFileManagerImplimplementsFileManager{privatestaticfinalString BUCKET_NAME ="file-storage";privatestaticfinalString ENDPOINT ="http://localhost:9000";privatestaticfinalString ACCESS_KEY ="minioadmin";privatestaticfinalString SECRET_KEY ="minioadmin";privatefinalMinioClient minioClient;publicMinioFileManagerImpl()throwsException{

minioClient =newMinioClient(ENDPOINT, ACCESS_KEY, SECRET_KEY);if(!minioClient.bucketExists(BUCKET_NAME)){

minioClient.makeBucket(BUCKET_NAME);}}@OverridepublicvoiduploadFileChunk(InputStream inputStream,String fileName,String contentType,int chunkIndex,int totalChunks)throwsException{String objectName = fileName +"_"+ chunkIndex;

minioClient.putObject(BUCKET_NAME, objectName, inputStream, contentType);}@OverridepublicvoidmergeFile(String fileName,int chunkSize,int totalChunks)throwsException{List<String> objectNames =newArrayList<>();for(int i =0; i < totalChunks; i++){

objectNames.add(fileName +"_"+ i);}String mergedObjectName = fileName;

minioClient.composeObject(BUCKET_NAME, objectNames, mergedObjectName);for(String objectName : objectNames){

minioClient.removeObject(BUCKET_NAME, objectName);}}@OverridepublicvoiduploadFile(InputStream inputStream,String fileName,String contentType)throwsException{

minioClient.putObject(BUCKET_NAME, fileName, inputStream, contentType);}@OverridepublicInputStreamdownloadFile(String fileName)throwsException{return minioClient.getObject(BUCKET_NAME, fileName);}@OverridepublicvoiddeleteFile(String fileName)throwsException{

minioClient.removeObject(BUCKET_NAME, fileName);}@OverridepublicList<Document>listFiles()throwsException{List<Document> documents =newArrayList<>();Iterable<Result<Item>> results = minioClient.listObjects(BUCKET_NAME);for(Result<Item> result : results){Item item = result.get();Document document =newDocument();

document.setName(item.objectName());

document.setSize(item.size());

document.put("storageType","minio");

documents.add(document);}return documents;}}publicclassMongoFileManagerImplimplementsFileManager{privatestaticfinalString DATABASE_NAME ="fileStorage";privatestaticfinalString COLLECTION_NAME ="files";privatefinalMongoCollection<Document> collection;// public MongoFileManagerImpl() {// MongoClient mongoClient = MongoClients.create();// MongoDatabase database = mongoClient.getDatabase(DATABASE_NAME);// collection = database.getCollection(COLLECTION_NAME);// }publicMongoFileManagerImpl(){MongoClient mongoClient =MongoClients.create(MongoClientSettings.builder().applyToClusterSettings(builder ->

builder.hosts(Arrays.asList(newServerAddress("localhost",27017)))).credential(MongoCredential.createCredential("username","fileStorage","password".toCharArray())).build());MongoDatabase database = mongoClient.getDatabase(DATABASE_NAME);

collection = database.getCollection(COLLECTION_NAME);}@OverridepublicvoiduploadFileChunk(InputStream inputStream,String fileName,String contentType,int chunkIndex,int totalChunks)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName +"_"+ chunkIndex, options)){IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to upload file chunk", e);}}@OverridepublicvoidmergeFile(String fileName,int chunkSize,int totalChunks)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSDownloadByNameOptions options =newGridFSDownloadByNameOptions().revision(0);try(FileOutputStream outputStream =newFileOutputStream(newFile(fileName))){for(int i =0; i < totalChunks; i++){try(GridFSDownloadStream downloadStream = gridFSBucket.openDownloadStreamByName(fileName +"_"+ i, options)){IOUtils.copy(downloadStream, outputStream);}}}catch(Exception e){thrownewException("Failed to merge file", e);}}@OverridepublicvoiduploadFile(InputStream inputStream,String fileName,String contentType)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to upload file", e);}}@OverridepublicInputStreamdownloadFile(String fileName)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSDownloadByNameOptions options =newGridFSDownloadByNameOptions().revision(0);GridFSDownloadStream downloadStream = gridFSBucket.openDownloadStreamByName(fileName, options);if(downloadStream ==null){thrownewException("File not found");}return downloadStream;}@OverridepublicvoiddeleteFile(String fileName)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);

gridFSBucket.delete(newObjectId(fileName));}@OverridepublicList<Document>listFiles(){List<Document> documents =newArrayList<>();GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);try(MongoCursor<GridFSFile> cursor = gridFSBucket.find().iterator()){while(cursor.hasNext()){GridFSFile file = cursor.next();Document document =newDocument();

document.setName(file.getFilename());

document.setSize(file.getLength());

document.put("storageType","mongo");

documents.add(document);}}return documents;}}publicclassFtpFileManagerImplimplementsFileManager{privatestaticfinalString SERVER_ADDRESS ="localhost";privatestaticfinalint SERVER_PORT =21;privatestaticfinalString USERNAME ="username";privatestaticfinalString PASSWORD ="password";privatestaticfinalString REMOTE_DIRECTORY ="/fileStorage/";privatefinalFTPClient ftpClient;publicFtpFileManagerImpl()throwsException{

ftpClient =newFTPClient();

ftpClient.connect(SERVER_ADDRESS, SERVER_PORT);

ftpClient.login(USERNAME, PASSWORD);

ftpClient.enterLocalPassiveMode();

ftpClient.setFileType(FTP.BINARY_FILE_TYPE);

ftpClient.changeWorkingDirectory(REMOTE_DIRECTORY);}@OverridepublicvoiduploadFileChunk(InputStream inputStream,String fileName,String contentType,int chunkIndex,int totalChunks)throwsException{String remoteFileName = fileName +"_"+ chunkIndex;

ftpClient.storeFile(remoteFileName, inputStream);}@OverridepublicvoidmergeFile(String fileName,int chunkSize,int totalChunks)throwsException{try(FileOutputStream outputStream =newFileOutputStream(newFile(fileName))){for(int i =0; i < totalChunks; i++){String remoteFileName = fileName +"_"+ i;

ftpClient.retrieveFile(remoteFileName, outputStream);}}catch(Exception e){thrownewException("Failed to merge file", e);}}@OverridepublicvoiduploadFile(InputStream inputStream,String fileName,String contentType)throwsException{

ftpClient.storeFile(fileName, inputStream);}@OverridepublicInputStreamdownloadFile(String fileName)throwsException{ByteArrayOutputStream outputStream =newByteArrayOutputStream();boolean success = ftpClient.retrieveFile(fileName, outputStream);if(!success){thrownewException("File not found");}returnnewByteArrayInputStream(outputStream.toByteArray());}@OverridepublicvoiddeleteFile(String fileName)throwsException{boolean success = ftpClient.deleteFile(fileName);if(!success){thrownewException("File not found");}}@OverridepublicList<Document>listFiles()throwsException{FTPFile[] files = ftpClient.listFiles();List<Document> documents =newArrayList<>();if(files !=null){for(FTPFile file : files){Document document =newDocument();

document.setName(file.getName());

document.setSize(file.getSize());

document.put("storageType","ftp");

documents.add(document);}}return documents;}}importorg.junit.jupiter.api.Test;importstaticorg.junit.jupiter.api.Assertions.*;importjava.io.ByteArrayInputStream;importjava.io.InputStream;importjava.util.List;publicclassFileManagerTest{privatefinalFileManager fileManager;publicFileManagerTest()throwsException{

fileManager =newMongoFileManagerImpl();}@TestpublicvoidtestUploadFile()throwsException{String fileName ="test.txt";String content ="This is a test file";InputStream inputStream =newByteArrayInputStream(content.getBytes());

fileManager.uploadFile(inputStream, fileName,"text/plain");InputStream downloadedInputStream = fileManager.downloadFile(fileName);byte[] downloadedBytes = downloadedInputStream.readAllBytes();String downloadedContent =newString(downloadedBytes);assertEquals(content, downloadedContent);

fileManager.deleteFile(fileName);}@TestpublicvoidtestUploadFileChunk()throwsException{String fileName ="test.txt";String content ="This is a test file";int chunkSize =5;int totalChunks =(int)Math.ceil((double) content.length()/ chunkSize);InputStream inputStream =newByteArrayInputStream(content.getBytes());for(int i =0; i < totalChunks; i++){byte[] chunkBytes =newbyte[chunkSize];int bytesRead = inputStream.read(chunkBytes);InputStream chunkInputStream =newByteArrayInputStream(chunkBytes,0, bytesRead);

fileManager.uploadFileChunk(chunkInputStream, fileName,"text/plain", i, totalChunks);}

fileManager.mergeFile(fileName, chunkSize, totalChunks);InputStream downloadedInputStream = fileManager.downloadFile(fileName);byte[] downloadedBytes = downloadedInputStream.readAllBytes();String downloadedContent =newString(downloadedBytes);assertEquals(content, downloadedContent);

fileManager.deleteFile(fileName);}@TestpublicvoidtestDeleteFile()throwsException{String fileName ="test.txt";String content ="This is a test file";InputStream inputStream =newByteArrayInputStream(content.getBytes());

fileManager.uploadFile(inputStream, fileName,"text/plain");

fileManager.deleteFile(fileName);boolean fileExists =true;try{

fileManager.downloadFile(fileName);}catch(Exception e){

fileExists =false;}assertFalse(fileExists);}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file)){IOUtils.copy(downloadFile(fileName), outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file)){IOUtils.copy(downloadFile(fileName), outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoidcontinueUploadFile(InputStream inputStream,String fileName,String contentType,long position)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){

uploadStream.setPosition(position);IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to continue upload file", e);}}@OverridepublicvoidcontinueUploadFile(InputStream inputStream,String fileName,String contentType,long position)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){

uploadStream.setPosition(position);IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to continue upload file", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file)){IOUtils.copy(downloadFile(fileName), outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath,long position)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file,true)){InputStream inputStream =downloadFile(fileName);

inputStream.skip(position);IOUtils.copy(inputStream, outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoidcontinueUploadFile(InputStream inputStream,String fileName,String contentType,long position)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){

uploadStream.setPosition(position);IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to continue upload file", e);}}@OverridepublicvoidcontinueUploadFile(InputStream inputStream,String fileName,String contentType,long position)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){

uploadStream.setPosition(position);IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to continue upload file", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file)){IOUtils.copy(downloadFile(fileName), outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath,long position)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file,true)){InputStream inputStream =downloadFile(fileName);

inputStream.skip(position);IOUtils.copy(inputStream, outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoidcontinueUploadFile(InputStream inputStream,String fileName,String contentType,long position)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){

uploadStream.setPosition(position);IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to continue upload file", e);}}@OverridepublicvoidcontinueUploadFile(InputStream inputStream,String fileName,String contentType,long position)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){

uploadStream.setPosition(position);IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to continue upload file", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file)){IOUtils.copy(downloadFile(fileName), outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath,long position)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file,true)){InputStream inputStream =downloadFile(fileName);

inputStream.skip(position);IOUtils.copy(inputStream, outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoidcontinueUploadFile(InputStream inputStream,String fileName,String contentType,long position)throwsException{GridFSBucket gridFSBucket =GridFSBuckets.create(collection.getDatabase(), COLLECTION_NAME);GridFSUploadOptions options =newGridFSUploadOptions().chunkSizeBytes(1024*1024).metadata(newDocument("fileName", fileName));try(GridFSUploadStream uploadStream = gridFSBucket.openUploadStream(fileName, options)){

uploadStream.setPosition(position);IOUtils.copy(inputStream, uploadStream);}catch(Exception e){thrownewException("Failed to continue upload file", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file)){IOUtils.copy(downloadFile(fileName), outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@OverridepublicvoiddownloadFileToDisk(String fileName,String localFilePath,long position)throwsException{File file =newFile(localFilePath);try(FileOutputStream outputStream =newFileOutputStream(file,true)){InputStream inputStream =downloadFile(fileName);

inputStream.skip(position);IOUtils.copy(inputStream, outputStream);}catch(Exception e){thrownewException("Failed to download file to disk", e);}}@TestpublicvoidtestListFiles()throwsException{List<Document> documents = fileManager.listFiles();assertNotNull(documents);}}

成果2-示例

ChunkUploadController.java

注:代码全部是由ai自动生成

importio.swagger.annotations.Api;importio.swagger.annotations.ApiOperation;importorg.springframework.beans.factory.annotation.Autowired;importorg.springframework.http.ResponseEntity;importorg.springframework.web.bind.annotation.PostMapping;importorg.springframework.web.bind.annotation.RequestParam;importorg.springframework.web.bind.annotation.RestController;importorg.springframework.web.multipart.MultipartFile;importjava.io.IOException;/**

* 这个控制器处理分块文件上传和合并。

*/@CrossOrigin@Api(tags ="分块上传")@RestController@RequestMapping("/chunk")publicclassChunkUploadController{@AutowiredprivateChunkUploadService chunkUploadService;/**

* 上传文件的一个分片

* @param file 文件分片

* @param chunkNumber 当前分片编号

* @param totalChunks 总分片数

* @param identifier 文件唯一标识

* @param filename 文件名

* @throws IOException

*/@ApiOperation(value ="上传文件的一个分片", notes ="上传文件的一个分片以便稍后合并")@PostMapping("/upload")publicResponseEntity<?>upload(@RequestParam("file")MultipartFile file,@RequestParam("chunkNumber")Integer chunkNumber,@RequestParam("totalChunks")Integer totalChunks,@RequestParam("identifier")String identifier,@RequestParam("filename")String filename)throwsIOException{

chunkUploadService.upload(file, chunkNumber, totalChunks, identifier, filename);returnResponseEntity.ok().build();}/**

* 合并上传的文件分片

* @param identifier 文件唯一标识

* @param filename 文件名

* @throws IOException

*/@ApiOperation(value ="合并上传的文件分片", notes ="将所有上传的文件分片合并成一个文件")@PostMapping("/merge")publicResponseEntity<?>merge(@RequestParam("identifier")String identifier,@RequestParam("filename")String filename,@RequestParam("totalChunks")Integer totalChunks)throwsIOException{

chunkUploadService.merge(identifier, filename, totalChunks);returnResponseEntity.ok().build();}}

ChunkUploadService.java

注:代码全部是由ai自动生成

importjava.io.IOException;importjava.io.InputStream;importjava.io.OutputStream;importjava.io.UncheckedIOException;importjava.nio.file.Files;importjava.nio.file.Path;importjava.nio.file.Paths;importjava.util.Comparator;importorg.apache.commons.io.IOUtils;importorg.springframework.web.multipart.MultipartFile;importorg.springframework.stereotype.Service;/**

* 此类提供上传和合并文件块的方法。

*/@ServicepublicclassChunkUploadService{/**

* 上传具有给定参数的文件块。

*

* @param file 要上传的块文件

* @param chunkNumber 正在上传的块的编号

* @param totalChunks 文件分成的总块数

* @param identifier 正在上传的文件的标识符

* @param filename 正在上传的文件的名称

* @throws IOException 如果发生I/O错误

*/publicvoidupload(MultipartFile file,Integer chunkNumber,Integer totalChunks,String identifier,String filename)throwsIOException{Path chunkPath =Paths.get("uploads", identifier, chunkNumber.toString());Files.createDirectories(chunkPath.getParent());if(!Files.exists(chunkPath)){Files.write(chunkPath, file.getBytes());}else{// 处理块文件已存在的情况}}/**

* 此方法将所有已上传的块合并为给定文件标识符和文件名的单个文件。

* 如果没有上传所有块,则返回而不进行合并。

* @param identifier 正在上传的文件的标识符

* @param filename 正在上传的文件的名称

* @param totalChunks 文件分成的总块数

* @throws IOException 如果发生I/O错误

*/publicvoidmerge(String identifier,String filename,Integer totalChunks)throwsIOException{if(!isUploadComplete(identifier, totalChunks)){// 处理未上传所有块的情况return;}Path dirPath =Paths.get("uploads", identifier);Path filePath =Paths.get("uploads", filename);try(OutputStream out =Files.newOutputStream(filePath)){Files.list(dirPath).filter(path ->!Files.isDirectory(path)).sorted(Comparator.comparingInt(path ->Integer.parseInt(path.getFileName().toString()))).forEachOrdered(path ->{try(InputStream in =Files.newInputStream(path)){IOUtils.copy(in, out);}catch(IOException e){thrownewUncheckedIOException(e);}});}}/**

* 此方法检查是否已上传给定文件标识符和总块数的所有块。

* 如果已上传所有块,则返回true。否则,返回false。

* @param identifier 正在上传的文件的标识符

* @param totalChunks 文件分成的总块数

* @return 如果已上传所有块,则为true,否则为false

* @throws IOException 如果发生I/O错误

*/publicbooleanisUploadComplete(String identifier,Integer totalChunks)throwsIOException{Path dirPath =Paths.get("uploads", identifier);long count =Files.list(dirPath).filter(path ->!Files.isDirectory(path)).count();return count == totalChunks;}}

ChunkUpload-1.html

分片上传

注:代码全部是由ai自动生成

<!DOCTYPEhtml><html><head><metacharset="UTF-8"><title>Chunk Upload</title></head><body><inputtype="file"id="file"><buttononclick="upload()">Upload</button><scriptsrc="https://cdn.jsdelivr.net/npm/axios/dist/axios.min.js"></script><script>/**

* 上传文件

*/asyncfunctionupload(){const file = document.getElementById('file').files[0];// 获取文件const chunkSize =1024*1024*3;// 3MBconst totalSize = file.size;// 文件总大小const totalChunks = Math.ceil(totalSize / chunkSize);// 总块数const identifier = file.name +'-'+ totalSize +'-'+ Date.now();// 文件标识符const filename = file.name;// 文件名for(let currentChunk =0; currentChunk < totalChunks; currentChunk++){// 循环上传每一块const chunk = file.slice(currentChunk * chunkSize,(currentChunk +1)* chunkSize);// 获取当前块const formData =newFormData();// 创建表单数据

formData.append('file', chunk);// 添加文件块

formData.append('chunkNumber', currentChunk);// 添加当前块数

formData.append('totalChunks', totalChunks);// 添加总块数

formData.append('identifier', identifier);// 添加文件标识符

formData.append('filename', filename);// 添加文件名try{const response =await axios.post('http://localhost:19000/chunk/upload', formData);// 发送上传请求if(response.status !==200){// 如果上传失败thrownewError('Upload failed');// 抛出异常}}catch(error){

console.error(error);// 输出错误信息thrownewError('Upload failed');// 抛出异常}}const formData =newFormData();// 创建表单数据

formData.append('identifier', identifier);// 添加文件标识符

formData.append('filename', filename);// 添加文件名

formData.append('totalChunks', totalChunks);// 添加总块数try{const response =await axios.post('http://localhost:19000/chunk/merge', formData);// 发送合并请求if(response.status !==200){// 如果合并失败thrownewError('Merge failed');// 抛出异常}}catch(error){

console.error(error);// 输出错误信息thrownewError('Merge failed');// 抛出异常}}</script></body></html>

ChunkUpload-2.html

分片上传,并带进度条(分块进度条和总进度条)

注:代码全部是由ai自动生成

<!DOCTYPEhtml><html><head><metacharset="UTF-8"><title>Chunk Upload-带进度条</title></head><body><inputtype="file"id="file"><buttononclick="upload()">Upload</button><progressid="progressBar"value="0"max="100"></progress><progressid="totalProgressBar"value="0"max="100"></progress><scriptsrc="https://cdn.jsdelivr.net/npm/axios/dist/axios.min.js"></script><script>/**

* 上传文件

*/asyncfunctionupload(){const file = document.getElementById('file').files[0];// 获取文件const chunkSize =1024*1024*3;// 3MBconst totalSize = file.size;// 文件总大小const totalChunks = Math.ceil(totalSize / chunkSize);// 总块数const identifier = file.name +'-'+ totalSize +'-'+ Date.now();// 文件标识符const filename = file.name;// 文件名let totalPercentCompleted =0;for(let currentChunk =0; currentChunk < totalChunks; currentChunk++){// 循环上传每一块const chunk = file.slice(currentChunk * chunkSize,(currentChunk +1)* chunkSize);// 获取当前块const formData =newFormData();// 创建表单数据

formData.append('file', chunk);// 添加文件块

formData.append('chunkNumber', currentChunk);// 添加当前块数

formData.append('totalChunks', totalChunks);// 添加总块数

formData.append('identifier', identifier);// 添加文件标识符

formData.append('filename', filename);// 添加文件名try{const response =await axios.post('http://localhost:19000/chunk/upload', formData,{onUploadProgress:function(progressEvent){var percentCompleted = Math.round((progressEvent.loaded *100)/ progressEvent.total);

document.getElementById('progressBar').value = percentCompleted;

totalPercentCompleted = Math.round(((currentChunk +1)*100)/ totalChunks);

document.getElementById('totalProgressBar').value = totalPercentCompleted;}});// 发送上传请求if(response.status !==200){// 如果上传失败thrownewError('Upload failed');// 抛出异常}}catch(error){

console.error(error);// 输出错误信息thrownewError('Upload failed');// 抛出异常}}const formData =newFormData();// 创建表单数据

formData.append('identifier', identifier);// 添加文件标识符

formData.append('filename', filename);// 添加文件名

formData.append('totalChunks', totalChunks);// 添加总块数try{const response =await axios.post('http://localhost:19000/chunk/merge', formData);// 发送合并请求if(response.status !==200){// 如果合并失败thrownewError('Merge failed');// 抛出异常}}catch(error){

console.error(error);// 输出错误信息thrownewError('Merge failed');// 抛出异常}}</script></body></html>

Cursor常见问题及解决

编写内容不完成,写了部分代码就停了,如何继续向下编写

使用

Ctrl + Kor

Ctrl +L时,编写内容总是不完整,写到一半就不写了,如何继续向下编写:

#两个命令内容继续向下编写:输入 continue 或 继续

Ctrl + K 输入 继续

使用了Cursor之后一些感受心得汇总

- 具有不确定性,输入的问题后,返回的结果数据会存在很大差异。哪怕与上次输入一样的问题,结果数据也不一定与上次一样。

- 无法写通篇程序代码,可能存在代码不完整情况,需要多次调试(偶尔使用成本也挺高)。

- 偶尔会提示,使用的人数过多,请稍后再试。

- 生成的代码,很可能不具备通用性,也存在不能直接放到项目中使用,大部分是需要开发手动微调一下,理想情况下是可以减少开发的时间成本。

- Cursor不支持直接运行代码调试,需要拷贝到自己的IDE中进行调试。

本片文章阅读结束

作者:宇宙小神特别萌

- 个人博客:www.zhengjiaao.cn

- 个人博客-CSDN:https://blog.csdn.net/qq_41772028?type=lately

- 个人博客-掘金:https://juejin.cn/user/3227821871211390/posts

- 个人博客-简书:https://www.jianshu.com/u/70d69269bd09

代码仓库:

- Gitee 仓库:https://gitee.com/zhengjiaao

- Github 仓库:https://github.com/zhengjiaao

描述:喜欢文章的点赞收藏一下,关注不迷路,避免以后找不到哦,大家遇到问题下方可评论

本片文章阅读结束

版权归原作者 宇宙小神特别萌 所有, 如有侵权,请联系我们删除。