mdadm命令来自于英文词组“multiple devices admin”的缩写,其功能是用于管理RAID磁盘阵列组。作为Linux系统下软RAID设备的管理神器,mdadm命令可以进行创建、调整、监控、删除等全套管理操作。

语法格式:mdadm [参数] 设备名

参数大全

-D 显示RAID设备的详细信息

-A 加入一个以前定义的RAID

-l 指定RAID的级别

-n 指定RAID中活动设备的数目

-f 把RAID成员列为有问题,以便移除该成员

-r 把RAID成员移出RAID设备

-a 向RAID设备中添加一个成员

-S 停用RAID设备,释放所有资源

-x 指定初始RAID设备的备用成员的数量

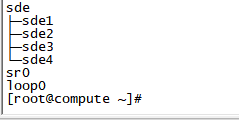

先创四个分区

[root@compute ~]# mdadm -C /dev/md7 -n 3 -l 5 -x 1 /dev/sde{1,2,3,4}

mdadm: /dev/sde1 appears to be part of a raid array:

level=raid5 devices=3 ctime=Tue Mar 7 17:24:53 2023

mdadm: /dev/sde2 appears to be part of a raid array:

level=raid5 devices=3 ctime=Tue Mar 7 17:24:53 2023

mdadm: /dev/sde3 appears to be part of a raid array:

level=raid5 devices=3 ctime=Tue Mar 7 17:24:53 2023

mdadm: /dev/sde4 appears to be part of a raid array:

level=raid5 devices=3 ctime=Tue Mar 7 17:24:53 2023

mdadm: largest drive (/dev/sde4) exceeds size (2094080K) by more than 1%

Continue creating array? y

mdadm: Fail create md7 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md7 started.

sde 8:64 0 10G 0 disk

├─sde1 8:65 0 2G 0 part

│ └─md7 9:7 0 4G 0 raid5

├─sde2 8:66 0 2G 0 part

│ └─md7 9:7 0 4G 0 raid5

├─sde3 8:67 0 2G 0 part

│ └─md7 9:7 0 4G 0 raid5

└─sde4 8:68 0 4G 0 part

└─md7 9:7 0 4G 0 raid5

[root@compute ~]# mdadm -D /dev/md7

/dev/md7:

Version : 1.2

Creation Time : Tue Mar 7 17:29:25 2023

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Mar 7 17:29:36 2023

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : compute:7 (local to host compute)

UUID : dcdfacfb:9b2f17cd:ce176b58:9e7c56a4

Events : 18

Number Major Minor RaidDevice State

0 8 65 0 active sync /dev/sde1

1 8 66 1 active sync /dev/sde2

4 8 67 2 active sync /dev/sde3

3 8 68 - spare /dev/sde4

mdadm -S /dev/md7 //一次性停止有个硬盘的阵列组

[root@compute ~]# mdadm -C /dev/md7 -x 1 -n 3 -l 5 /dev/sdd{1..4}

mdadm: /dev/sdd1 appears to be part of a raid array:

level=raid5 devices=3 ctime=Tue Mar 7 17:25:51 2023

mdadm: /dev/sdd2 appears to be part of a raid array:

level=raid5 devices=3 ctime=Tue Mar 7 17:25:51 2023

mdadm: largest drive (/dev/sdd4) exceeds size (2094080K) by more than 1%

Continue creating array? yes

mdadm: Fail create md7 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md7 started.

└─cinder--volumes-cinder--volumes--pool 253:5 0 69G 0 lvm

sdd 8:48 0 10G 0 disk

├─sdd1 8:49 0 2G 0 part

│ └─md7 9:7 0 4G 0 raid5

├─sdd2 8:50 0 2G 0 part

│ └─md7 9:7 0 4G 0 raid5

├─sdd3 8:51 0 2G 0 part

│ └─md7 9:7 0 4G 0 raid5

└─sdd4 8:52 0 4G 0 part

└─md7 9:7 0 4G 0 raid5

sde 8:64 0 10G 0 disk

[root@compute ~]# mdadm -D /dev/md7

/dev/md7:

Version : 1.2

Creation Time : Wed Mar 8 14:52:00 2023

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Wed Mar 8 14:52:11 2023

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : compute:7 (local to host compute)

UUID : 700d9a77:0babc26d:3b5a6768:223d47a8

Events : 18

Number Major Minor RaidDevice State

0 8 49 0 active sync /dev/sdd1

1 8 50 1 active sync /dev/sdd2

4 8 51 2 active sync /dev/sdd3

3 8 52 - spare /dev/sdd4

[root@compute ~]# mdadm /dev/md7 -f /dev/sdd1

mdadm: set /dev/sdd1 faulty in /dev/md7

[root@compute ~]# mdadm -D /dev/md7

/dev/md7:

Version : 1.2

Creation Time : Wed Mar 8 14:52:00 2023

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Wed Mar 8 14:59:00 2023

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 66% complete

Name : compute:7 (local to host compute)

UUID : 700d9a77:0babc26d:3b5a6768:223d47a8

Events : 30

Number Major Minor RaidDevice State

3 8 52 0 spare rebuilding /dev/sdd4

1 8 50 1 active sync /dev/sdd2

4 8 51 2 active sync /dev/sdd3

0 8 49 - faulty /dev/sdd1

[root@compute ~]# mdadm /dev/md7 -r /dev/sdd1

mdadm: hot removed /dev/sdd1 from /dev/md7

[root@compute ~]# mdadm -D /dev/md7

/dev/md7:

Version : 1.2

Creation Time : Wed Mar 8 14:52:00 2023

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Wed Mar 8 15:00:22 2023

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : compute:7 (local to host compute)

UUID : 700d9a77:0babc26d:3b5a6768:223d47a8

Events : 38

Number Major Minor RaidDevice State

3 8 52 0 active sync /dev/sdd4

1 8 50 1 active sync /dev/sdd2

4 8 51 2 active sync /dev/sdd3

[root@compute ~]# mdadm /dev/md7 -a /dev/sdd1

mdadm: added /dev/sdd1

[root@compute ~]# mdadm -D /dev/md7

/dev/md7:

Version : 1.2

Creation Time : Wed Mar 8 14:52:00 2023

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Wed Mar 8 15:01:29 2023

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : compute:7 (local to host compute)

UUID : 700d9a77:0babc26d:3b5a6768:223d47a8

Events : 39

Number Major Minor RaidDevice State

3 8 52 0 active sync /dev/sdd4

1 8 50 1 active sync /dev/sdd2

4 8 51 2 active sync /dev/sdd3

5 8 49 - spare /dev/sdd1

版权归原作者 邵小 所有, 如有侵权,请联系我们删除。