前面的抓包分析和java层定位我这里就不分析了,添加依赖包

org.apache.kafka

kafka-clients

2.6.0

<!-- https://mvnrepository.com/artifact/org.apache.curator/curator-client -->

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-framework</artifactId>

<version>4.2.0</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.9.5</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.google.protobuf/protobuf-java -->

<dependency>

<groupId>com.google.protobuf</groupId>

<artifactId>protobuf-java</artifactId>

<version>3.11.4</version>

</dependency>

</dependencies>

————————————————

此方法可以测试ip是否能用是否不被封禁

public class SimpleProducer {

public static void main(String[] args) {

Properties pros=new Properties();

//pros.put(“bootstrap.servers”,“192.168.44.161:9093,192.168.44.161:9094,192.168.44.161:9095”);

pros.put(“bootstrap.servers”,“192.168.3.128:9092”);

pros.put(“key.serializer”,“org.apache.kafka.common.serialization.StringSerializer”);

pros.put(“value.serializer”,“org.apache.kafka.common.serialization.StringSerializer”);

// 0 发出去就确认 | 1 leader 落盘就确认| all(-1) 所有Follower同步完才确认

pros.put(“acks”,“1”);

// 异常自动重试次数

pros.put(“retries”,3);

// 多少条数据发送一次,默认16K

pros.put(“batch.size”,16384);

// 批量发送的等待时间

pros.put(“linger.ms”,5);

// 客户端缓冲区大小,默认32M,满了也会触发消息发送

pros.put(“buffer.memory”,33554432);

// 获取元数据时生产者的阻塞时间,超时后抛出异常

pros.put(“max.block.ms”,3000);

// 创建Sender线程

Producer<String,String> producer = new KafkaProducer<String,String>(pros);

for (int i =0 ;i<10;i++) {

producer.send(new ProducerRecord<String,String>("mytopic",Integer.toString(i),Integer.toString(i)));

System.out.println("发送:"+i);

}

//producer.send(new ProducerRecord<String,String>("mytopic","1","1"));

//producer.send(new ProducerRecord<String,String>("mytopic","2","2"));

producer.close();

}

}

————————————————

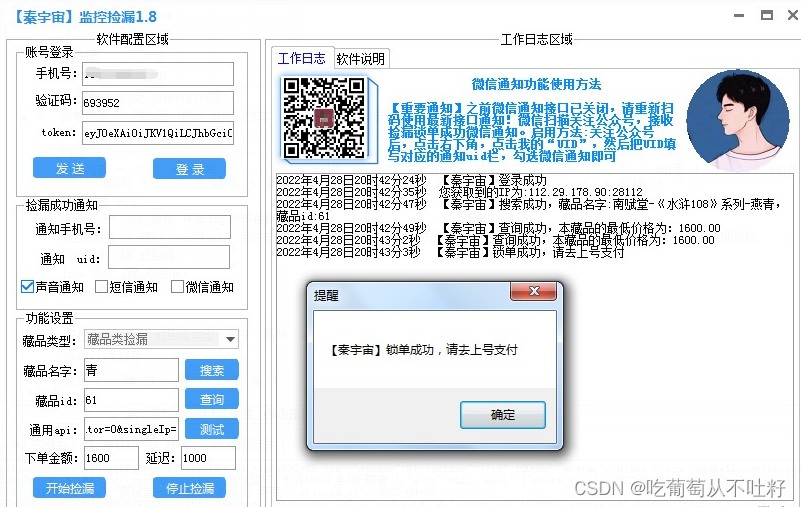

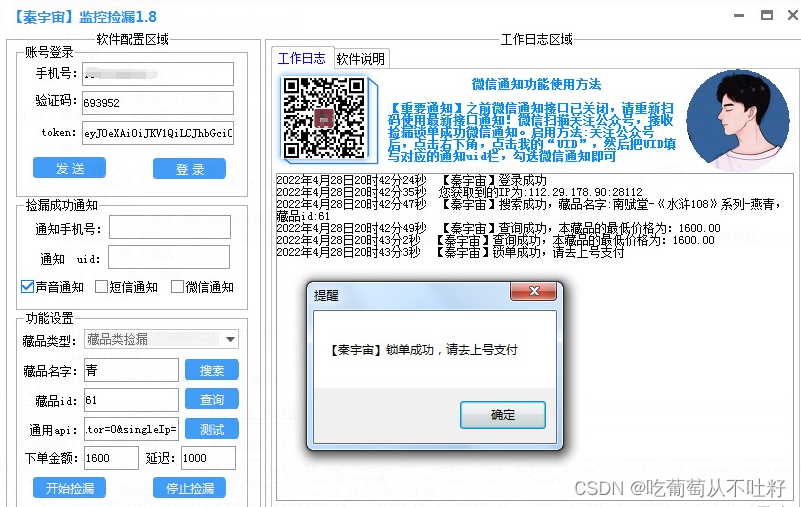

具体锁单方法:

public class SimpleConsumer {

public static void main(String[] args) {

Properties props= new Properties();

//props.put(“bootstrap.servers”,“192.168.44.161:9093,192.168.44.161:9094,192.168.44.161:9095”);

props.put(“bootstrap.servers”,“192.168.3.128:9092”);

props.put(“group.id”,“gp-test-group”);

// 是否自动提交偏移量,只有commit之后才更新消费组的 offset

props.put(“enable.auto.commit”,“true”);

// 消费者锁单间隔、自动提交的间隔

props.put(“auto.commit.interval.ms”,“1000”);

// 从最早的数据开始消费 earliest | latest | none

props.put(“auto.offset.reset”,“earliest”);

props.put(“key.deserializer”,“org.apache.kafka.common.serialization.StringDeserializer”);

props.put(“value.deserializer”,“org.apache.kafka.common.serialization.StringDeserializer”);

KafkaConsumer<String,String> consumer=new KafkaConsumer<String, String>(props);

// 订阅topic

consumer.subscribe(Arrays.asList("mytopic"));

try {

while (true){

ConsumerRecords<String,String> records=consumer.poll(Duration.ofMillis(1000));

for (ConsumerRecord<String,String> record:records){

System.out.printf("offset = %d ,key =%s, value= %s, partition= %s%n" ,record.offset(),record.key(),record.value(),record.partition());

}

}

}finally {

consumer.close();

}

}

————————————————

此类完成分析完流程,接下来就要开始进行操作了。

后续框架可以咨询我,核心方法已提供

仅供学习、咨询、参考、有学习问题可以交流

版权归原作者 吃葡萄从不吐籽 所有, 如有侵权,请联系我们删除。