Apache Kafka系列文章

1、kafka(2.12-3.0.0)介绍、部署及验证、基准测试

2、java调用kafka api

3、kafka重要概念介紹及示例

4、kafka分区、副本介绍及示例

5、kafka监控工具Kafka-Eagle介绍及使用

文章目录

本文主要介绍了kafka的作用、部署及验证、基本的shell操作和进行基准测试。

本文的前置依赖是zookeeper部署好、免密登录也设置完成。如果未完成,则可参考本人zookeeper专栏内容。

本文分为四个部分,即kafka简介、环境部署、基本shell操作和基准测试。

一、Kafka简介

1、什么是Kafka

https://kafka.apache.org/

https://kafka.apache.org/documentation/#quickstart_startserver

Kafka是由Apache软件基金会开发的一个开源流平台,由Scala和Java编写。

Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

Kafka combines three key capabilities so you can implement your use cases for event streaming end-to-end with a single battle-tested solution:

- To publish (write) and subscribe to (read) streams of events, including continuous import/export of your data from other systems.

- To store streams of events durably and reliably for as long as you want.

- To process streams of events as they occur or retrospectively.

And all this functionality is provided in a distributed, highly scalable, elastic, fault-tolerant, and secure manner. Kafka can be deployed on bare-metal hardware, virtual machines, and containers, and on-premises as well as in the cloud. You can choose between self-managing your Kafka environments and using fully managed services offered by a variety of vendors.

重点关键三个部分:

- Publish and subscribe:发布与订阅

- Store:存储

- Process:处理

2、Kafka的应用场景

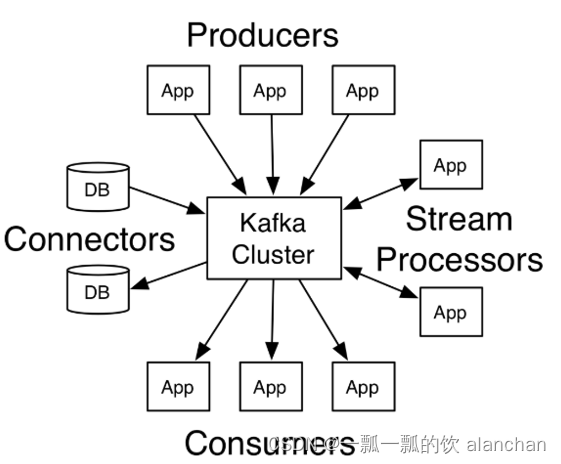

通常Apache Kafka用在两类程序:

- 建立实时数据管道,以可靠地在系统或应用程序之间获取数据

- 构建实时流应用程序,以转换或响应数据流

可以发现:

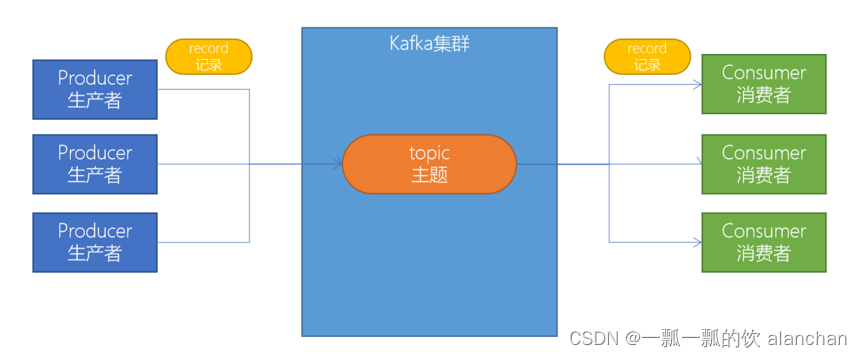

可以发现: - Producers:可以有很多的应用程序,将消息数据放入到Kafka集群中

- Consumers:可以有很多的应用程序,将消息数据从Kafka集群中拉取出来

- Connectors:Kafka的连接器可以将数据库中的数据导入到Kafka,也可以将Kafka的数据导出到数据库中

- Stream Processors:流处理器可以Kafka中拉取数据,也可以将数据写入到Kafka中

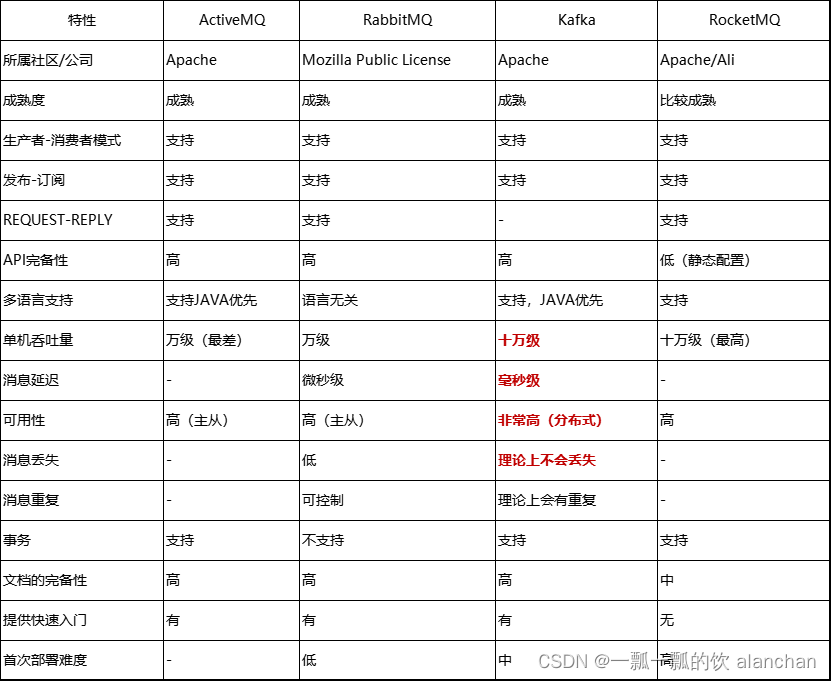

3、Kafka的优势

消息队列中间件有很多,为什么我们要选择Kafka?

在大数据技术领域,一些重要的组件、框架都支持Apache Kafka,不论成成熟度、社区、性能、可靠性,Kafka基本是首选。

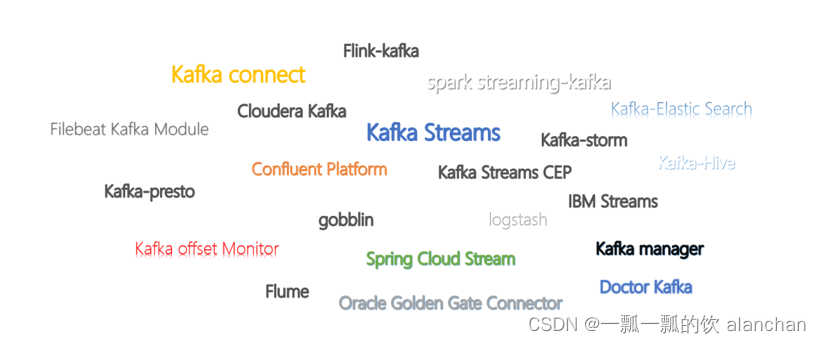

4、Kafka生态圈介绍

Kafka生态圈官网地址:https://cwiki.apache.org/confluence/display/KAFKA/Ecosystem

5、Kafka版本

本示例使用的Kafka版本为kafka_2.12-3.0.0。

Kafka的版本号为:kafka_2.12-3.0.0,因为kafka主要是使用scala语言开发的,2.12为scala的版本号,3.0.0是kafka自身的版本号。

http://kafka.apache.org/downloads可以查看到每个版本的发布时间

二、环境搭建

1、搭建Kafka集群

1)、将Kafka的安装包上传到虚拟机,并解压

cd /usr/local/tools

tar-xvzf kafka_2.12-3.0.0.tgz -C /usr/local/bigdata/

cd /usr/local/bigdata/kafka_2.12-3.0.0

2)、修改 server.properties

cd /usr/local/bigdata/kafka_2.12-3.0.0/config

vim server.properties

# 指定broker的idbroker.id=0# 指定Kafka数据的位置log.dirs=/usr/local/bigdata/kafka_2.12-3.0.0/data

# 配置zk的三个节点zookeeper.connect=server1:2118,server2:2118,server3:2118

3)、将安装好的kafka复制到另外两台服务器

cd /usr/local/bigdata

scp-r kafka_2.12-3.0.0/ server2:$PWDscp-r kafka_2.12-3.0.0/ server3:$PWD

修改另外两个节点的broker.id分别为1和2

---------server2--------------

cd /usr/local/bigdata/kafka_2.12-3.0.0/config

vim erver.properties

broker.id=1

--------server3--------------

cd /usr/local/bigdata/kafka_2.12-3.0.0/config

vim server.properties

broker.id=2

4)、 配置KAFKA_HOME环境变量

vim /etc/profile

exportKAFKA_HOME=/usr/local/bigdata/kafka_2.12-3.0.0

exportPATH=:$PATH:${KAFKA_HOME}# 分发到各个节点scp /etc/profile server2:$PWDscp /etc/profile server3:$PWD# 每个节点加载环境变量source /etc/profile

5)、启动服务器

# 启动ZooKeepernohup bin/zookeeper-server-start.sh config/zookeeper.properties &# 启动Kafka 整個集群每臺均需啓動cd /usr/local/bigdata/kafka_2.12-3.0.0/bin

nohup /usr/local/bigdata/kafka_2.12-3.0.0/bin/kafka-server-start.sh /usr/local/bigdata/kafka_2.12-3.0.0/config/server.properties &# 测试Kafka集群是否启动成功,也可以使用jps查看

kafka-topics.sh --bootstrap-server server1:9092 --list

kafka-topics.sh --bootstrap-server server2:9092 --list

kafka-topics.sh --bootstrap-server server3:9092 --list[alanchan@server1 onekeystart]$ jps

813 Kafka

# 删除topic中的数据

kafka-topics.sh --delete--topictest --bootstrap-server server1:9092

[alanchan@server3 bin]$ kafka-topics.sh --delete--topictest --bootstrap-server server1:9092

[alanchan@server3 bin]$

# 剛啓動成功后,應該是沒有隊列的[alanchan@server2 bin]$ kafka-topics.sh --bootstrap-server server3:9092 --list

__consumer_offsets

metrics

test# 關閉服務

kafka-server-stop.sh

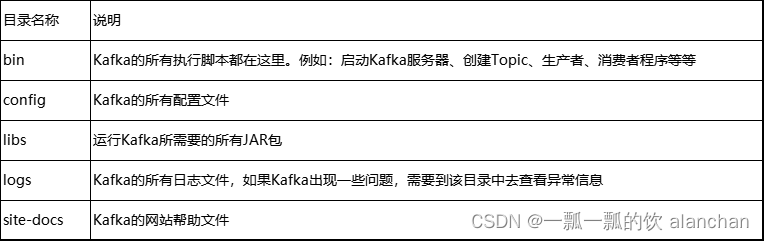

2、目录结构说明

3、Kafka一键启动/关闭脚本

为了方便将来进行一键启动、关闭Kafka,我们可以编写一个shell脚本来操作。

将来只要执行一次该脚本就可以快速启动/关闭Kafka。

1. 在server1中创建 /usr/local/bigdata/kafka_2.12-3.0.0/onekeystart 目录

cd /usr/local/bigdata/kafka_2.12-3.0.0/onekeystart

2. 编写kafkaCluster.sh脚本

vim kafkaCluster.sh

#!/bin/shcase$1in"start"){forhostin server1 server2 server3

dossh$host"source /etc/profile; nohup ${KAFKA_HOME}/bin/kafka-server-start.sh ${KAFKA_HOME}/config/server.properties > /dev/null 2>&1 &"echo"$host kafka is running..."sleep1.5s

done};;"stop"){forhostin server1 server2 server3

dossh$host"source /etc/profile; nohup ${KAFKA_HOME}/bin/kafka-server-stop.sh > /dev/null 2>&1 &"echo"$host kafka is stopping..."sleep1.5s

done};;esac

3. 给kafkaCluster.sh配置执行权限

chmod u+x kafkaCluster.sh

# 如果是非root用戶,則需要授權chown-R alanchan:root /usr/local/bigdata/kafka_2.12-3.0.0/onekeystart

4. 驗證一键启动、一键关闭

cd /usr/local/bigdata/kafka_2.12-3.0.0/onekeystart

kafkaCluster.sh start

kafkaCluster.sh stop

[alanchan@server1 onekeystart]$ jps

813 Kafka

三、基础Shell操作

1、创建topic

创建一个topic(主题)。Kafka中所有的消息都是保存在主题中,要生产消息到Kafka,首先必须要有一个确定的主题。

# 创建名为test的主题 1个分区,一个副本# kafka-topics.sh --create --bootstrap-server server1:9092 --topic test --partitions 1 --replication-factor 1

kafka-topics.sh --create --bootstrap-server server1:9092 --topictest--partitions1 --replication-factor 1[alanchan@server1 bin]$ kafka-topics.sh --create --bootstrap-server server1:9092 --topictest--partitions1 --replication-factor 1

Created topic test.

# 查看目前Kafka中的主题

bin/kafka-topics.sh --list --bootstrap-server server1:9092

[alanchan@server2 bin]$ kafka-topics.sh --bootstrap-server server1:9092 --listtest

2、生产消息到Kafka

使用Kafka内置的测试程序,生产一些消息到Kafka的test主题中。

bin/kafka-console-producer.sh --broker-list server1:9092 --topictest[alanchan@server1 bin]$ kafka-console-producer.sh --broker-list server1:9092 --topictest>i am testing

>;>quit

3、从Kafka消费消息

使用下面的命令来消费 test 主题中的消息。

kafka-console-consumer.sh --bootstrap-server server1:9092 --topictest --from-beginning

[alanchan@server2 bin]$ kafka-console-consumer.sh --bootstrap-server server1:9092 --topictest --from-beginning

i am testing

;

quit

4、使用Kafka Tools操作Kafka

下載地址:https://www.kafkatool.com/download.html

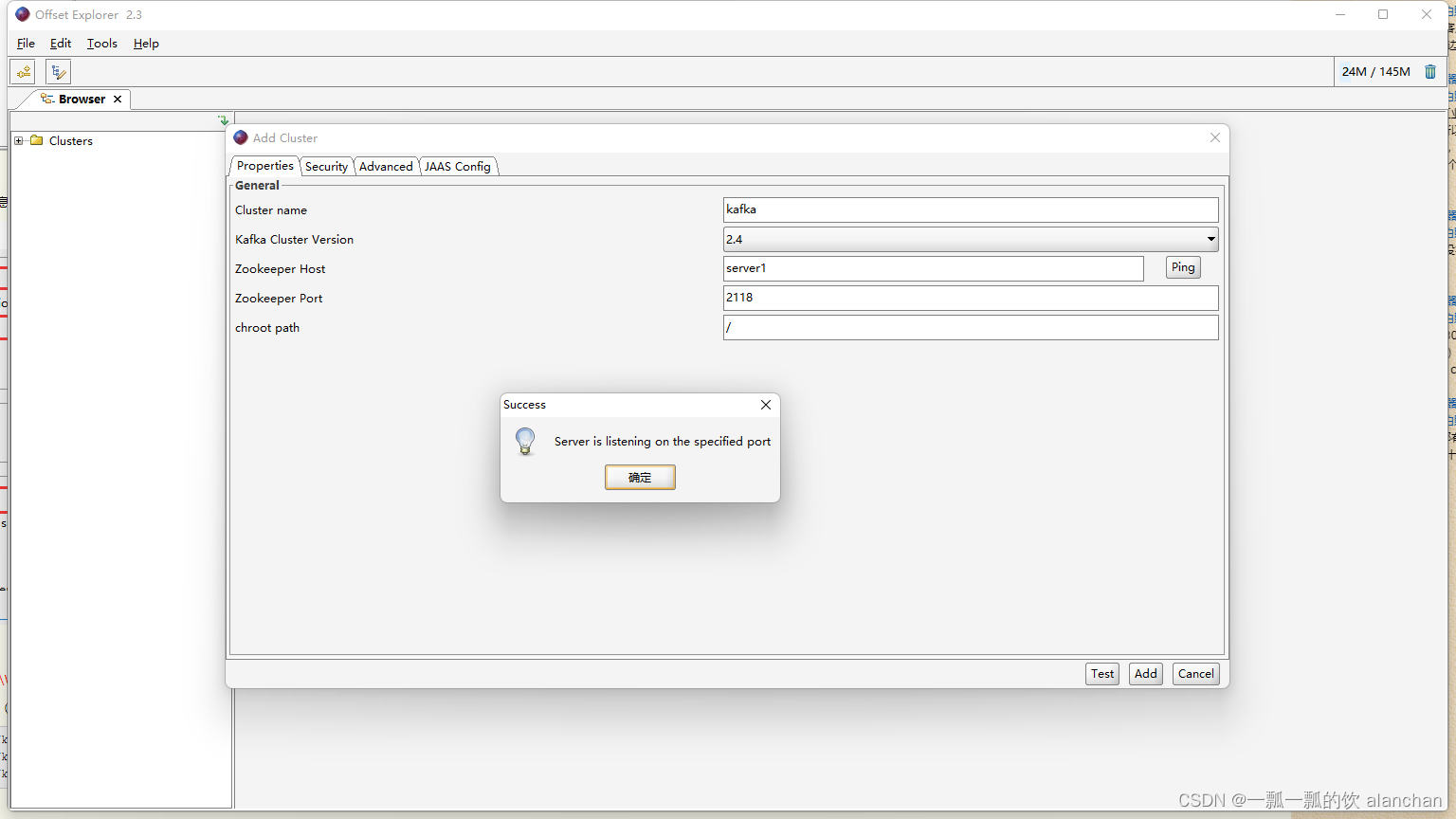

1)、连接Kafka集群

安装Kafka Tools后启动Kafka

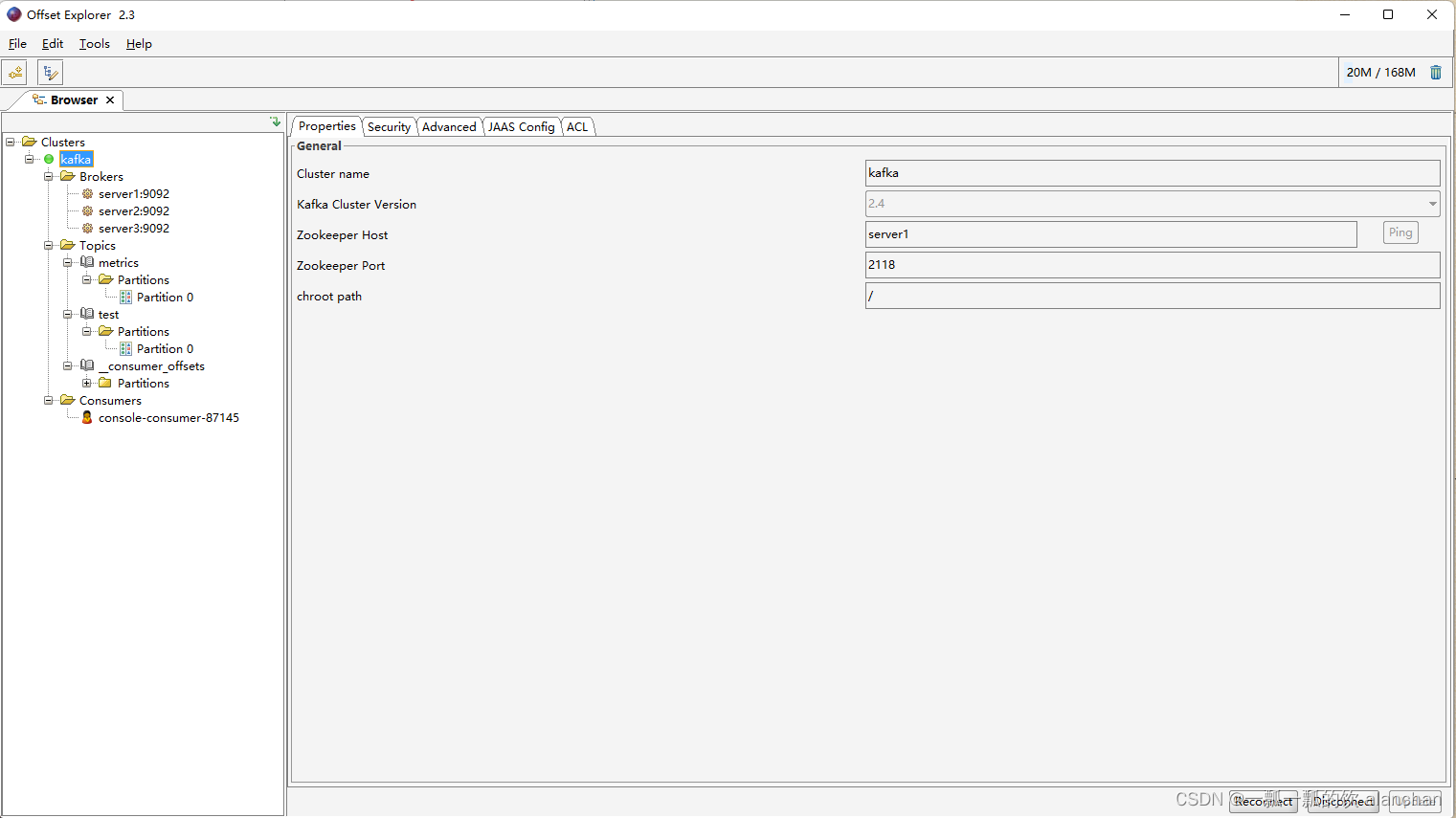

2)、鏈接成功

具體使用參考:https://www.cnblogs.com/miracle-luna/p/11299345.html

四、Kafka基准测试

1、基准测试

基准测试(benchmark testing)是一种测量和评估软件性能指标的活动。我们可以通过基准测试,了解到软件、硬件的性能水平。主要测试负载的执行时间、传输速度、吞吐量、资源占用率等。

1)、基于1个分区1个副本的基准测试

测试步骤:

- 启动Kafka集群

- 创建一个1个分区1个副本的topic: benchmark

- 同时运行生产者、消费者基准测试程序

- 观察结果

1、创建topic

cd /usr/local/bigdata/kafka_2.12-3.0.0/bin

kafka-topics.sh --create --bootstrap-server server1:9092 --topic benchmark --partitions1 --replication-factor 1[alanchan@server3 bin]$ kafka-topics.sh --create --bootstrap-server server1:9092 --topic benchmark --partitions1 --replication-factor 1

Created topic benchmark.

2、生产消息基准测试

测试基准数据选择主要视具体的环境而定,本示例是使用5000万条数据。

cd /usr/local/bigdata/kafka_2.12-3.0.0/bin

kafka-producer-perf-test.sh --topic benchmark --num-records 5000000--throughput-1 --record-size 1000 --producer-props bootstrap.servers=server1:9092,server2:9092,server3:9092 acks=1

kafka-producer-perf-test.sh

--topic topic的名字

--num-records 总共指定生产数据量(默认5000W)

--throughput 指定吞吐量——限流(-1不指定)

--record-size record数据大小(字节)

--producer-props

bootstrap.servers=server1:9092,server2:9092,server3:9092 acks=1 指定Kafka集群地址,ACK模式

[alanchan@server1 bin]$ kafka-producer-perf-test.sh --topic benchmark --num-records 5000000--throughput-1 --record-size 1000 --producer-props bootstrap.servers=server1:9092,server2:9092,server3:9092 acks=1245671 records sent, 49134.2 records/sec (46.86 MB/sec), 33.9 ms avg latency, 454.0 ms max latency.

325562 records sent, 65112.4 records/sec (62.10 MB/sec), 0.6 ms avg latency, 10.0 ms max latency.

323099 records sent, 64619.8 records/sec (61.63 MB/sec), 0.6 ms avg latency, 14.0 ms max latency.

322463 records sent, 64492.6 records/sec (61.50 MB/sec), 0.9 ms avg latency, 30.0 ms max latency.

318373 records sent, 63674.6 records/sec (60.72 MB/sec), 0.6 ms avg latency, 15.0 ms max latency.

320421 records sent, 64084.2 records/sec (61.12 MB/sec), 4.5 ms avg latency, 143.0 ms max latency.

325321 records sent, 65064.2 records/sec (62.05 MB/sec), 0.9 ms avg latency, 47.0 ms max latency.

326565 records sent, 65313.0 records/sec (62.29 MB/sec), 0.6 ms avg latency, 15.0 ms max latency.

325938 records sent, 65187.6 records/sec (62.17 MB/sec), 0.6 ms avg latency, 16.0 ms max latency.

322950 records sent, 64590.0 records/sec (61.60 MB/sec), 0.6 ms avg latency, 13.0 ms max latency.

[2023-01-11 08:23:49,368] WARN [Producer clientId=producer-1] Got error produce response with correlation id467082 on topic-partition benchmark-0, retrying (2147483646 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,368] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Got error produce response with correlation id467083 on topic-partition benchmark-0, retrying (2147483646 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Got error produce response with correlation id467084 on topic-partition benchmark-0, retrying (2147483646 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Got error produce response with correlation id467085 on topic-partition benchmark-0, retrying (2147483646 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Got error produce response with correlation id467086 on topic-partition benchmark-0, retrying (2147483646 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:23:49,369] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)7879 records sent, 129.6 records/sec (0.12 MB/sec), 8.2 ms avg latency, 60000.0 ms max latency.

org.apache.kafka.clients.producer.BufferExhaustedException: Failed to allocate memory within the configured max blocking time60000 ms.

[2023-01-11 08:24:19,502] WARN [Producer clientId=producer-1] Got error produce response with correlation id467090 on topic-partition benchmark-0, retrying (2147483645 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,502] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,502] WARN [Producer clientId=producer-1] Got error produce response with correlation id467091 on topic-partition benchmark-0, retrying (2147483645 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,502] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,502] WARN [Producer clientId=producer-1] Got error produce response with correlation id467092 on topic-partition benchmark-0, retrying (2147483645 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,502] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,503] WARN [Producer clientId=producer-1] Got error produce response with correlation id467093 on topic-partition benchmark-0, retrying (2147483645 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,503] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,503] WARN [Producer clientId=producer-1] Got error produce response with correlation id467094 on topic-partition benchmark-0, retrying (2147483645 attempts left). Error: NETWORK_EXCEPTION. Error Message: Disconnected from node0(org.apache.kafka.clients.producer.internals.Sender)[2023-01-11 08:24:19,503] WARN [Producer clientId=producer-1] Received invalid metadata error in produce request on partition benchmark-0 due to org.apache.kafka.common.errors.NetworkException: Disconnected from node0. Going to request metadata update now (org.apache.kafka.clients.producer.internals.Sender)1 records sent, 0.1 records/sec (0.00 MB/sec), 79514.0 ms avg latency, 79514.0 ms max latency.

332549 records sent, 66509.8 records/sec (63.43 MB/sec), 7846.8 ms avg latency, 79530.0 ms max latency.

322133 records sent, 64426.6 records/sec (61.44 MB/sec), 1.2 ms avg latency, 70.0 ms max latency.

326821 records sent, 65364.2 records/sec (62.34 MB/sec), 0.6 ms avg latency, 11.0 ms max latency.

325363 records sent, 65072.6 records/sec (62.06 MB/sec), 0.8 ms avg latency, 36.0 ms max latency.

306613 records sent, 61322.6 records/sec (58.48 MB/sec), 65.0 ms avg latency, 742.0 ms max latency.

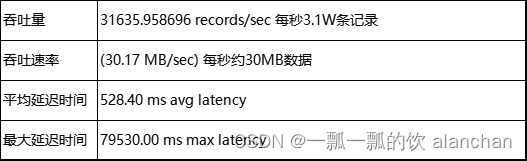

5000000 records sent, 31635.958696 records/sec (30.17 MB/sec), 528.40 ms avg latency, 79530.00 ms max latency, 1 ms 50th, 6 ms 95th, 514 ms 99th, 79492 ms 99.9th.

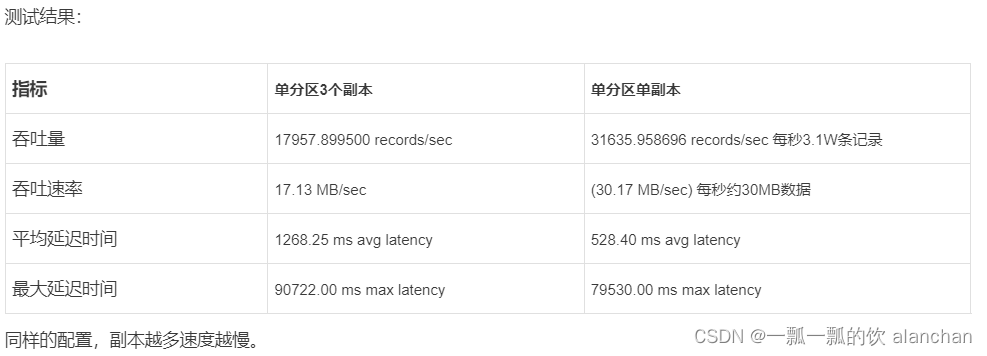

测试结果:

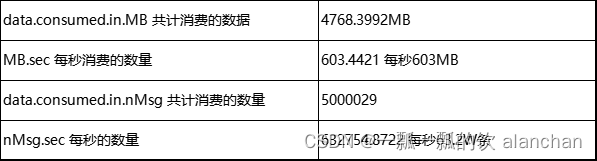

3、消费消息基准测试

cd /usr/local/bigdata/kafka_2.12-3.0.0/bin

kafka-consumer-perf-test.sh --broker-list server1:9092,server2:9092,server3:9092 --topic benchmark --fetch-size 1048576--messages5000000

kafka-consumer-perf-test.sh

--broker-list 指定kafka集群地址

--topic 指定topic的名称

--fetch-size 每次拉取的数据大小

--messages 总共要消费的消息个数

[alanchan@server1 bin]$ kafka-consumer-perf-test.sh --broker-list server1:9092,server2:9092,server3:9092 --topic benchmark --fetch-size 1048576--messages5000000

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2023-01-11 08:29:49:764, 2023-01-11 08:29:57:666, 4768.3992, 603.4421, 5000029, 632754.8722, 464, 7438, 641.0862, 672227.6150

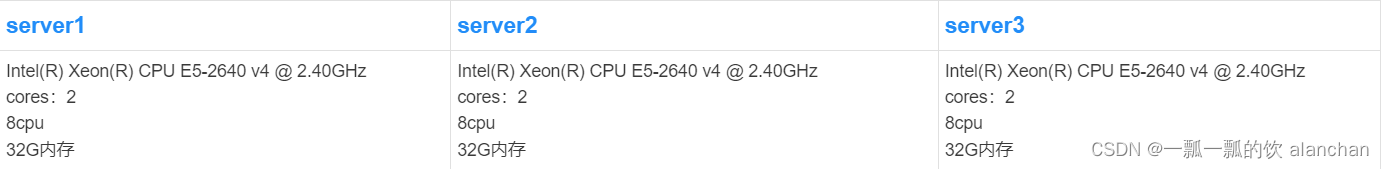

2)、基于3个分区1个副本的基准测试

被测虚拟机:

1、创建topic

kafka-topics.sh --create --bootstrap-server server1:9092 --topic benchmark2 --partitions3 --replication-factor 1[alanchan@server2 bin]$ kafka-topics.sh --create --bootstrap-server server1:9092 --topic benchmark2 --partitions3 --replication-factor 1

Created topic benchmark2.

2、生产消息基准测试

kafka-producer-perf-test.sh --topic benchmark2 --num-records 5000000--throughput-1 --record-size 1000 --producer-props bootstrap.servers=server1:9092,server2:9092,server3:9092 acks=1[alanchan@server2 bin]$ kafka-producer-perf-test.sh --topic benchmark2 --num-records 5000000--throughput-1 --record-size 1000 --producer-props bootstrap.servers=server1:9092,server2:9092,server3:9092 acks=1257548 records sent, 51509.6 records/sec (49.12 MB/sec), 8.7 ms avg latency, 458.0 ms max latency.

300945 records sent, 60189.0 records/sec (57.40 MB/sec), 0.6 ms avg latency, 24.0 ms max latency.

283994 records sent, 56798.8 records/sec (54.17 MB/sec), 1.2 ms avg latency, 73.0 ms max latency.

284728 records sent, 56945.6 records/sec (54.31 MB/sec), 0.8 ms avg latency, 35.0 ms max latency.

284064 records sent, 56812.8 records/sec (54.18 MB/sec), 0.9 ms avg latency, 49.0 ms max latency.

292346 records sent, 58469.2 records/sec (55.76 MB/sec), 0.6 ms avg latency, 15.0 ms max latency.

277664 records sent, 55532.8 records/sec (52.96 MB/sec), 9.5 ms avg latency, 241.0 ms max latency.

153448 records sent, 15032.1 records/sec (14.34 MB/sec), 73.2 ms avg latency, 7609.0 ms max latency.

324281 records sent, 64856.2 records/sec (61.85 MB/sec), 748.0 ms avg latency, 7609.0 ms max latency.

285627 records sent, 57125.4 records/sec (54.48 MB/sec), 16.0 ms avg latency, 336.0 ms max latency.

280778 records sent, 56155.6 records/sec (53.55 MB/sec), 57.4 ms avg latency, 715.0 ms max latency.

68746 records sent, 4559.4 records/sec (4.35 MB/sec), 0.8 ms avg latency, 14076.0 ms max latency.

307633 records sent, 61526.6 records/sec (58.68 MB/sec), 1495.1 ms avg latency, 14084.0 ms max latency.

286165 records sent, 57233.0 records/sec (54.58 MB/sec), 1.7 ms avg latency, 99.0 ms max latency.

290480 records sent, 58096.0 records/sec (55.40 MB/sec), 0.8 ms avg latency, 45.0 ms max latency.

300415 records sent, 60083.0 records/sec (57.30 MB/sec), 0.7 ms avg latency, 36.0 ms max latency.

287411 records sent, 57482.2 records/sec (54.82 MB/sec), 0.6 ms avg latency, 20.0 ms max latency.

285555 records sent, 57111.0 records/sec (54.47 MB/sec), 0.6 ms avg latency, 21.0 ms max latency.

5000000 records sent, 46334.049967 records/sec (44.19 MB/sec), 148.40 ms avg latency, 14084.00 ms max latency, 1 ms 50th, 46 ms 95th, 7316 ms 99th, 14022 ms 99.9th.

分区多效率是会有明显提升的。

3、消费消息基准测试

kafka-consumer-perf-test.sh --broker-list server1:9092,server2:9092,server3:9092 --topic benchmark2 --fetch-size 1048576--messages5000000[alanchan@server2 bin]$ kafka-consumer-perf-test.sh --broker-list server1:9092,server2:9092,server3:9092 --topic benchmark2 --fetch-size 1048576--messages5000000

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2023-01-11 08:54:15:600, 2023-01-11 08:54:25:683, 4768.3716, 472.9120, 5000000, 495884.1615, 472, 9611, 496.1369, 520237.2282

3)、基于1个分区3个副本的基准测试

1、创建topic

kafka-topics.sh --create --bootstrap-server server1:9092 --topic benchmark3 --partitions1 --replication-factor 3[alanchan@server3 bin]$ kafka-topics.sh --create --bootstrap-server server1:9092 --topic benchmark3 --partitions1 --replication-factor 3

Created topic benchmark3.

2、生产消息基准测试

kafka-producer-perf-test.sh --topic benchmark3 --num-records 5000000--throughput-1 --record-size 1000 --producer-props bootstrap.servers=server1:9092,server2:9092,server3:9092 acks=15000000 records sent, 17957.899500 records/sec (17.13 MB/sec), 1268.25 ms avg latency, 90722.00 ms max latency, 1 ms 50th, 416 ms 95th, 89903 ms 99th, 90696 ms 99.9th.

3、消费消息基准测试

以上,完成了kafka介绍、部署、验证以及基准测试内容。

版权归原作者 一瓢一瓢的饮 alanchan 所有, 如有侵权,请联系我们删除。