最近在测试环境spring cloud gateway突然出现了异常,在这里记录一下,直接上干货

1、问题背景

测试环境spring cloud gateway遇到以下异常

DataBufferLimitException: Exceeded limit on max bytes to buffer : 262144(超出了缓冲区的最大字节数限制)

乍一看,问题很简单啊,通过配置加大缓存区不就行了啊,于是就在application.yml加了以下配置

#将缓存区设置为2m

spring:

codec:

max-in-memory-size: 2MB

可是问题又出现了,通过调试发现配置的max-in-memory-size在程序启动初始化确实是生效的。但是有业务调用的时候,此参数的接收值为null,maxInMemorySize还是读取的默认值(256K)。

那咋整,只能从源码入手了。

2、分析源码过程

通过异常日志,可以定位到异常位置

后来发现我们自定义的拦截器获取body的信息是获取方式,代码如下

后来发现我们自定义的拦截器获取body的信息是获取方式,代码如下

因为HandlerStrategies.withDefaults() 是每次都需要重新创建对象,并非是spring注入的对象,所以每次获取的都是默认值,导致配置不生效。

3、解决办法

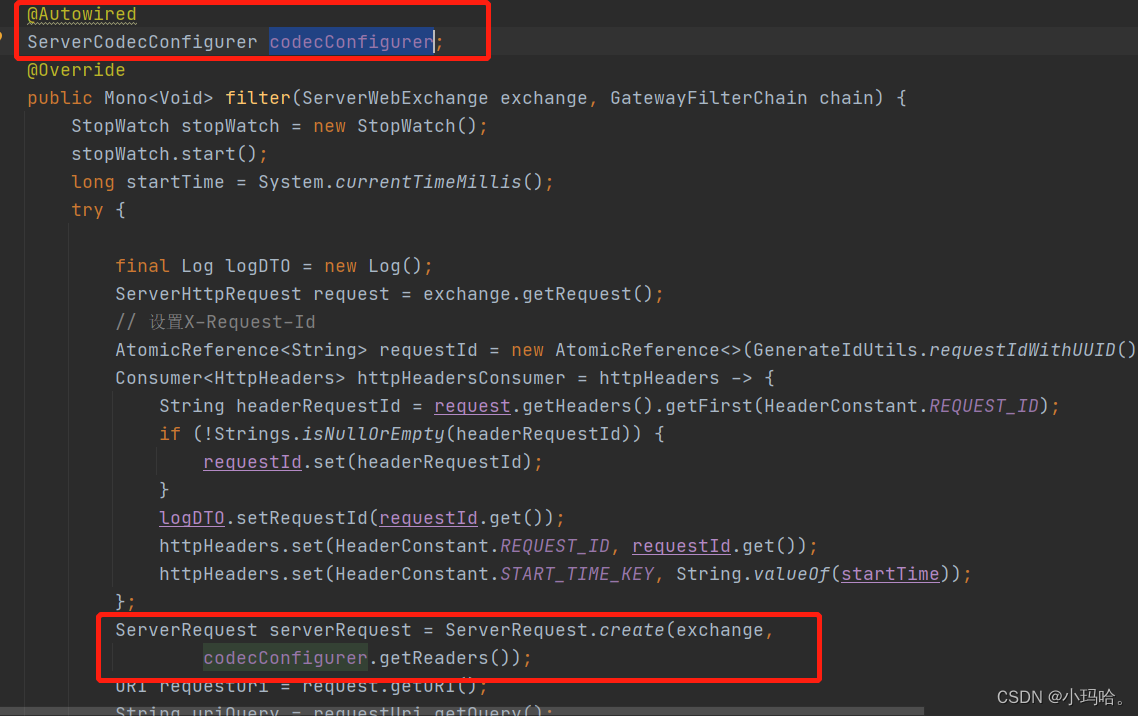

在我们自定的拦截器中注入ServerCodecConfigurer类,通过该类获取配置。这样获取到的就是我们在application.yml中配置的缓存区配置的字节数限制了。

具体代码:

具体代码:

@Component

@Slf4j

public class RequestFilter implements GlobalFilter, Ordered {

@Override

public int getOrder() {

return OrderedConstant.HIGHEST_PRECEDENCE;

}

//手动注入ServerCodecConfigurer

@Autowired

ServerCodecConfigurer codecConfigurer;

@Override

public Mono<Void> filter(ServerWebExchange exchange, GatewayFilterChain chain) {

StopWatch stopWatch = new StopWatch();

stopWatch.start();

long startTime = System.currentTimeMillis();

try {

final Log logDTO = new Log();

ServerHttpRequest request = exchange.getRequest();

// 设置X-Request-Id

AtomicReference<String> requestId = new AtomicReference<>(GenerateIdUtils.requestIdWithUUID());

Consumer<HttpHeaders> httpHeadersConsumer = httpHeaders -> {

String headerRequestId = request.getHeaders().getFirst(HeaderConstant.REQUEST_ID);

if (!Strings.isNullOrEmpty(headerRequestId)) {

requestId.set(headerRequestId);

}

logDTO.setRequestId(requestId.get());

httpHeaders.set(HeaderConstant.REQUEST_ID, requestId.get());

httpHeaders.set(HeaderConstant.START_TIME_KEY, String.valueOf(startTime));

};

// codecConfigurer.getReaders()获取pplication.yml中配置的缓存区配置的字节数

ServerRequest serverRequest = ServerRequest.create(exchange,

codecConfigurer.getReaders());

URI requestUri = request.getURI();

String uriQuery = requestUri.getQuery();

String url = requestUri.getPath() + (!Strings.isNullOrEmpty(uriQuery) ? "?" + uriQuery : "");

HttpHeaders headers = request.getHeaders();

MediaType mediaType = headers.getContentType();

String method = request.getMethodValue().toUpperCase();

// 原始请求体

final AtomicReference<String> requestBody = new AtomicReference<>();

final AtomicBoolean newBody = new AtomicBoolean(false);

if (mediaType != null && LogHelper.isUploadFile(mediaType)) {

requestBody.set("上传文件");

} else {

if (method.equals("GET")) {

if (!Strings.isNullOrEmpty(uriQuery)) {

requestBody.set(uriQuery);

}

} else {

newBody.set(true);

}

}

logDTO.setLevel(Log.LEVEL.INFO);

logDTO.setRequestUrl(url);

logDTO.setRequestBody(requestBody.get());

logDTO.setRequestMethod(method);

logDTO.setIp(IpUtils.getClientIp(request));

ServerHttpRequest serverHttpRequest = exchange.getRequest().mutate().headers(httpHeadersConsumer).build();

ServerWebExchange build = exchange.mutate().request(serverHttpRequest).build();

return build.getSession().flatMap(webSession -> {

logDTO.setSessionId(webSession.getId());

if (newBody.get() && headers.getContentLength() > 0) {

Mono<String> bodyToMono = serverRequest.bodyToMono(String.class);

return bodyToMono.flatMap(reqBody -> {

logDTO.setRequestBody(reqBody);

// 重写原始请求

ServerHttpRequestDecorator requestDecorator = new ServerHttpRequestDecorator(exchange.getRequest()) {

@Override

public Flux<DataBuffer> getBody() {

NettyDataBufferFactory nettyDataBufferFactory = new NettyDataBufferFactory(new UnpooledByteBufAllocator(false));

DataBuffer bodyDataBuffer = nettyDataBufferFactory.wrap(reqBody.getBytes());

return Flux.just(bodyDataBuffer);

}

};

return chain.filter(exchange.mutate()

.request(requestDecorator)

.build()).then(LogHelper.doRecord(logDTO));

});

} else {

return chain.filter(exchange).then(LogHelper.doRecord(logDTO));

}

});

} catch (Exception e) {

log.error("请求日志打印出现异常", e);

return chain.filter(exchange);

}

}

}

版权归原作者 小徐很努力 所有, 如有侵权,请联系我们删除。