一、实验目的

熟悉HBase数据库操作常用的Java API

二、实验平台

- 操作系统:CentOS 8

- Hadoop版本:3.2.3

- HBase版本:2.4.12

- jdk版本:1.8

- Java IDE:eclipse

三、实验过程

1. 创建表

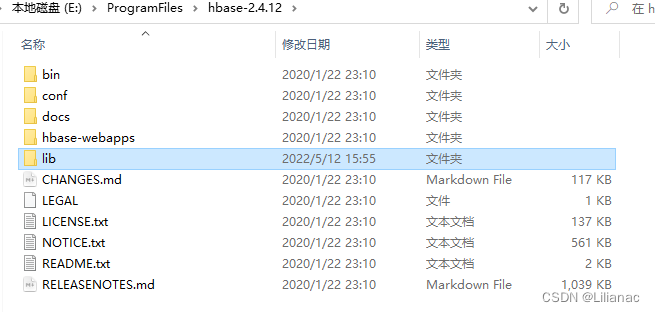

- 创建项目 在eclipse中创建项目,首先将先前下载的压缩包”hbase-2.4.12-tar.bin.gz"解压,然后将其目录下的lib目录下的所有jar包拷贝到项目的lib目录下,然后build to path,最后就可以编写代码了

- 创建表 在HBase种创建dept和emp表,列都为data

importjava.io.IOException;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.hbase.HBaseConfiguration;importorg.apache.hadoop.hbase.TableName;importorg.apache.hadoop.hbase.client.Admin;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptor;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder;importorg.apache.hadoop.hbase.client.Connection;importorg.apache.hadoop.hbase.client.ConnectionFactory;importorg.apache.hadoop.hbase.client.TableDescriptorBuilder;importorg.apache.hadoop.hbase.util.Bytes;publicclassTask{publicvoidcreateTable()throwsException{//获得Configuration对象Configuration config=HBaseConfiguration.create();//连接hbaseConnection connection=ConnectionFactory.createConnection(config);//获取admin对象Admin admin=connection.getAdmin();//定义表名TableName tableName1=TableName.valueOf("dept");TableName tableName2=TableName.valueOf("emp");//定义表对象//HTableDescriptor htd=new HTableDescriptor(tableName);TableDescriptorBuilder tableDescriptor1 =TableDescriptorBuilder.newBuilder(tableName1);TableDescriptorBuilder tableDescriptor2 =TableDescriptorBuilder.newBuilder(tableName2);//定义列族对象//HColumnDescriptor hcd=new HColumnDescriptor("data");ColumnFamilyDescriptor family =ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes("data")).build();//添加// htd.addFamily(hcd); tableDescriptor1.setColumnFamily(family); tableDescriptor2.setColumnFamily(family);//创建表 admin.createTable(tableDescriptor1.build()); admin.createTable(tableDescriptor2.build());}}

2. 添加数据

在HBase中创建表tb_step2,列簇都为data,添加数据:

- 行号分别为:row1,row2;

- 列名分别为:1,2;

- 值分别为:张三丰,张无忌。

importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.hbase.HBaseConfiguration;importorg.apache.hadoop.hbase.TableName;importorg.apache.hadoop.hbase.client.Admin;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptor;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder;importorg.apache.hadoop.hbase.client.Connection;importorg.apache.hadoop.hbase.client.ConnectionFactory;importorg.apache.hadoop.hbase.client.Put;importorg.apache.hadoop.hbase.client.Table;importorg.apache.hadoop.hbase.client.TableDescriptorBuilder;importorg.apache.hadoop.hbase.util.Bytes;publicclassTesk{publicvoidinsertInfo()throwsException{//获得Configuration对象Configuration config=HBaseConfiguration.create();//连接hbaseConnection connection=ConnectionFactory.createConnection(config);//获取admin对象Admin admin=connection.getAdmin();//定义表名TableName tableName=TableName.valueOf("tb_step2");//定义表对象TableDescriptorBuilder tableDescriptor =TableDescriptorBuilder.newBuilder(tableName);//定义列族对象ColumnFamilyDescriptor family =ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes("data")).build();//添加

tableDescriptor.setColumnFamily(family);//创建表

admin.createTable(tableDescriptor.build());Table table = connection.getTable(tableName);//获取Table对象 try{Put put1 =newPut(Bytes.toBytes("row1"));

put1.addColumn(Bytes.toBytes("data"),Bytes.toBytes(String.valueOf(1)),Bytes.toBytes("张三丰"));Put put2 =newPut(Bytes.toBytes("row2"));

put2.addColumn(Bytes.toBytes("data"),Bytes.toBytes(String.valueOf(2)),Bytes.toBytes("张无忌"));

table.put(put1);

table.put(put2);//向表中添加数据}finally{//使用完了要释放资源

table.close();}}}

3. 获取数据

输出t_step3表中行号为row1,列族为data:1的值(以utf-8编码),输出table_step3表中所有行的行名称

importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.hbase.HBaseConfiguration;importorg.apache.hadoop.hbase.TableName;importorg.apache.hadoop.hbase.client.Admin;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptor;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder;importorg.apache.hadoop.hbase.client.Connection;importorg.apache.hadoop.hbase.client.ConnectionFactory;importorg.apache.hadoop.hbase.client.Get;importorg.apache.hadoop.hbase.client.Put;importorg.apache.hadoop.hbase.client.Result;importorg.apache.hadoop.hbase.client.ResultScanner;importorg.apache.hadoop.hbase.client.Scan;importorg.apache.hadoop.hbase.client.Table;importorg.apache.hadoop.hbase.client.TableDescriptorBuilder;importorg.apache.hadoop.hbase.util.Bytes;publicclassTesk{publicvoidqueryTableInfo()throwsException{//获得Configuration对象Configuration config=HBaseConfiguration.create();//连接hbaseConnection connection=ConnectionFactory.createConnection(config);//创建表TableName tableName3 =TableName.valueOf("t_step3");Table table3 = connection.getTable(tableName3);//获取Table对象//获取数据Get get=newGet(Bytes.toBytes("row1"));//通过table对象获取数据Result result = table3.get(get);byte[] valueBytes = result.getValue(Bytes.toBytes("data"),Bytes.toBytes("1"));//获取到的是字节数组 //将字节转成字符串 String valueStr =newString(valueBytes,"utf-8");System.out.println("value:"+ valueStr);TableName tableName3_1 =TableName.valueOf("table_step3");Table table3_1 = connection.getTable(tableName3_1);//获取Table对象Scan scan =newScan();ResultScanner scanner = table3_1.getScanner(scan);try{for(Result scannerResult: scanner){//System.out.println("Scan: " + scannerResult); byte[] row = scannerResult.getRow();System.out.println("rowName:"+newString(row,"utf-8"));}}finally{

scanner.close();}}}

4. 删除表

删除t_step4表

importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.hbase.HBaseConfiguration;importorg.apache.hadoop.hbase.TableName;importorg.apache.hadoop.hbase.client.Admin;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptor;importorg.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder;importorg.apache.hadoop.hbase.client.Connection;importorg.apache.hadoop.hbase.client.ConnectionFactory;importorg.apache.hadoop.hbase.client.Get;importorg.apache.hadoop.hbase.client.Put;importorg.apache.hadoop.hbase.client.Result;importorg.apache.hadoop.hbase.client.ResultScanner;importorg.apache.hadoop.hbase.client.Scan;importorg.apache.hadoop.hbase.client.Table;importorg.apache.hadoop.hbase.client.TableDescriptorBuilder;importorg.apache.hadoop.hbase.util.Bytes;publicclassTesk{publicvoiddeleteTable()throwsException{//获得Configuration对象Configuration config=HBaseConfiguration.create();//连接hbaseConnection connection=ConnectionFactory.createConnection(config);//获取admin对象Admin admin=connection.getAdmin();TableName tableName=TableName.valueOf("t_step4");

admin.disableTable(tableName);

admin.deleteTable(tableName);}}

本文转载自: https://blog.csdn.net/Lilianach/article/details/124732533

版权归原作者 Lilianac 所有, 如有侵权,请联系我们删除。

版权归原作者 Lilianac 所有, 如有侵权,请联系我们删除。