一,

版本问题

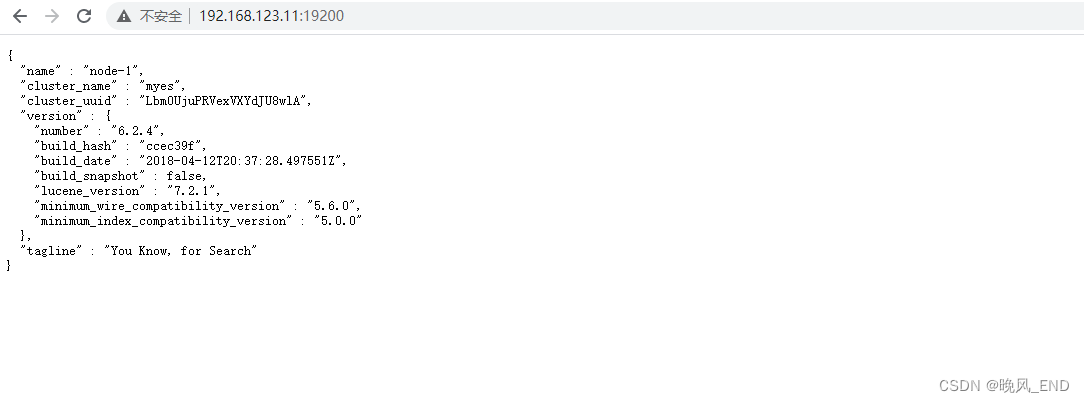

elasticsearch的高低版本划分标准为6.3,该版本之前的为低版本,6.3版本之后的包括6.3为高版本,这么划分主要是在安全性方面也就是x-pack插件的使用部署方面,低版本需要手动安装该安全插件,而高版本无需安装。另一方面,高版本的es漏洞会少一些,而本例中使用的是低版本的最后一个版本6.2.4版本

Java环境使用的是openjdk,版本是1.8.0_392-b08, 在此版本下通过es部署的测试:

[es@node1 bin]$ java -version

openjdk version "1.8.0_392"

OpenJDK Runtime Environment (Temurin)(build 1.8.0_392-b08)

OpenJDK 64-Bit Server VM (Temurin)(build 25.392-b08, mixed mode)

二,

环境问题

本例计划使用四个VMware的虚拟机服务器,操作版本都是centos7,IP分别为192.168.123.11/12/13/14,共计四台服务器,每个服务器的内存都是8G

由于elasticsearch是Java项目,比较吃内存的,因此,内存建议不低于8G,CPU4核即可,要求不太多

还一个关键的环境是时间服务器,这个必须一定要有,不管是实验性质还是生产上使用,时间服务器都不要忽略,本例中由于是在互联网 web环境下,因此,使用的是阿里云的时间服务器

ntp服务中关于时间服务器的配置:

server ntp.aliyun.com iburst

时间服务器正确配置后的验证:

[root@node1 es]# ntpstat

synchronised to NTP server (203.107.6.88) at stratum 3

time correct to within 74 ms

polling server every 1024 s

其次,还有一些常规的设置,例如selinux的关闭,这里就不重复提了

jdk的安装:

vim /etc/profile 在此文件末尾增加:

export JAVA_HOME=/usr/local/jdk

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

tar xvf OpenJDK8U-jdk_x64_linux_hotspot_8u392b08.tar.gz

mv jdk8u392-b08 /usr/local/jdk

##激活变量

source /etc/profile

###测试jdk是否安装成功

[root@node1 ~]# java -version

openjdk version "1.8.0_392"

OpenJDK Runtime Environment (Temurin)(build 1.8.0_392-b08)

OpenJDK 64-Bit Server VM (Temurin)(build 25.392-b08, mixed mode)

三,

软件资源的下载

es的下载:

Elasticsearch 6.3.2 | Elastic

x-pack插件的下载:

https://artifacts.elastic.co/downloads/packs/x-pack/x-pack-6.2.4.zip

openjdk的下载:

Index of /Adoptium/8/jdk/x64/linux/ | 清华大学开源软件镜像站 | Tsinghua Open Source Mirror

四,

elasticsearch集群的部署

新建目录 /data 并将上面下载的es解压后更改名称放置在/data目录下,每个服务器都执行:

mkdir /data

unzip elasticsearch-6.2.4.zip

mv elasticsearch-6.2.4 /data/es

scp -r /data/es 192.168.123.12:/data/

scp -r /data/es 192.168.123.13:/data/

scp -r /data/es 192.168.123.14:/data/

新建用户es,该用户可以不设置密码,后期仅使用su命令切换即可

useradd es

离线方式安装x-pack插件:

期间需要输入两次y 确认安装

[root@node1 bin]# /data/es/bin/elasticsearch-plugin install file:///data/es/bin/x-pack-6.2.4.zip

-> Downloading file:///data/es/bin/x-pack-6.2.4.zip

[=================================================] 100%

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: plugin requires additional permissions @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

* java.io.FilePermission \\.\pipe\* read,write

* java.lang.RuntimePermission accessClassInPackage.com.sun.activation.registries

* java.lang.RuntimePermission getClassLoader

* java.lang.RuntimePermission setContextClassLoader

* java.lang.RuntimePermission setFactory

* java.net.SocketPermission * connect,accept,resolve

* java.security.SecurityPermission createPolicy.JavaPolicy

* java.security.SecurityPermission getPolicy

* java.security.SecurityPermission putProviderProperty.BC

* java.security.SecurityPermission setPolicy

* java.util.PropertyPermission * read,write

See http://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html

for descriptions of what these permissions allow and the associated risks.

Continue with installation? [y/N]y

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: plugin forks a native controller @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

This plugin launches a native controller that is not subject to the Java

security manager nor to system call filters.

Continue with installation? [y/N]y

Elasticsearch keystore is required by plugin [x-pack-security], creating...

-> Installed x-pack with: x-pack-core,x-pack-deprecation,x-pack-graph,x-pack-logstash,x-pack-ml,x-pack-monitoring,x-pack-security,x-pack-upgrade,x-pack-watcher

[root@node1 bin]# echo $?

0

安装完毕后会看到bin目录以及config等等目录下都有x-pack了

修改elasticsearch的主配置文件:

这两个配置在哪个服务器上执行就以实际的情况为准,例如,在192.168.123.12上执行,就是node-2,192.168.123.12

node.name: node-1

network.host: 192.168.123.11

cat >/data/es/config/elasticsearch.yml <<EOF

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

cluster.name: myes

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: node-1

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

path.data: /data/es/data

#

# Path to log files:

#

path.logs: /var/log/es/

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 192.168.123.11

#

# Set a custom port for HTTP:

#

http.port: 19200

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when new node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

discovery.zen.ping.unicast.hosts: ["node-1", "node-2"]

#

# Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / 2 + 1):

#

#discovery.zen.minimum_master_nodes:

#

# For more information, consult the zen discovery module documentation.

#

# ---------------------------------- Gateway -----------------------------------

#

# Block initial recovery after a full cluster restart until N nodes are started:

#

#gateway.recover_after_nodes: 3

#

# For more information, consult the gateway module documentation.

#

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#

#action.destructive_requires_name: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: false

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-methods : OPTIONS, HEAD, GET, POST, PUT, DELETE

http.cors.allow-headers : X-Requested-With,X-Auth-Token,Content-Type,Content-Length

EOF

根据配置文件,创建日志文件写入目录,并赋属组 es 给/data/es 这个目录,递归赋属组:

mkdir /var/log/es

chown -Rf es. /data/es/

chown -Rf es. /var/log/es/

创建启动脚本:

cat >/etc/init.d/es<<EOF

#!/bin/bash

#chkconfig:2345 60 12

#description: elasticsearch

es_path=/data/es

es_pid=`ps aux|grep elasticsearch | grep -v 'grep elasticsearch' | awk '{print $2}'`

case "$1" in

start)

su - es -c "$es_path/bin/elasticsearch -d"

echo "elasticsearch startup"

;;

stop)

kill -9 $es_pid

echo "elasticsearch stopped"

;;

restart)

kill -9 $es_pid

su - es -c "$es_path/bin/elasticsearch -d"

echo "elasticsearch startup"

;;

*)

echo "error choice ! please input start or stop or restart"

;;

esac

exit $?

EOF

启动脚本赋权:

chown -Rf es. /etc/init.d/es

启动elasticsearch后,查看日志,可以看到13 14 服务器加入了集群,但这些日志并不直观:

[root@node1 bin]# tail -f /var/log/es/myes.log

[2023-12-10T00:52:34,441][INFO ][o.e.c.m.MetaDataUpdateSettingsService] [node-1] [.monitoring-es-6-2023.12.09/4GLFZLlsRH6nj4ZKIUsxvw] auto expanded replicas to [1]

[2023-12-10T00:52:34,441][INFO ][o.e.c.m.MetaDataUpdateSettingsService] [node-1] [.watcher-history-7-2023.12.09/_WVuCnwrSlGtYaLbSAfbLg] auto expanded replicas to [1]

[2023-12-10T00:52:35,028][INFO ][o.e.x.w.WatcherService ] [node-1] paused watch execution, reason [no local watcher shards found], cancelled [0] queued tasks

[2023-12-10T00:52:35,305][INFO ][o.e.x.w.WatcherService ] [node-1] paused watch execution, reason [new local watcher shard allocation ids], cancelled [0] queued tasks

[2023-12-10T00:52:35,982][INFO ][o.e.c.r.a.AllocationService] [node-1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[.monitoring-es-6-2023.12.09][0]] ...]).

[2023-12-10T00:52:40,831][INFO ][o.e.c.s.MasterService ] [node-1] zen-disco-node-join[{node-3}{kZxWJkP1Tjqo1DkDLcKg0w}{uZU4sePKSkuDZaxFl8_J_A}{192.168.123.13}{192.168.123.13:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true}], reason: added {{node-3}{kZxWJkP1Tjqo1DkDLcKg0w}{uZU4sePKSkuDZaxFl8_J_A}{192.168.123.13}{192.168.123.13:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true},}

[2023-12-10T00:52:41,494][INFO ][o.e.c.s.ClusterApplierService] [node-1] added {{node-3}{kZxWJkP1Tjqo1DkDLcKg0w}{uZU4sePKSkuDZaxFl8_J_A}{192.168.123.13}{192.168.123.13:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true},}, reason: apply cluster state (from master [master {node-1}{Ihs-2_jwTte3q7zd82z9cg}{XX2DgvdSR_yGZ886Ao2n4w}{192.168.123.11}{192.168.123.11:9300}{ml.machine_memory=8975544320, ml.max_open_jobs=20, ml.enabled=true} committed version [34] source [zen-disco-node-join[{node-3}{kZxWJkP1Tjqo1DkDLcKg0w}{uZU4sePKSkuDZaxFl8_J_A}{192.168.123.13}{192.168.123.13:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true}]]])

[2023-12-10T00:52:46,046][INFO ][o.e.c.s.MasterService ] [node-1] zen-disco-node-join[{node-4}{xBsEmrIhSQWgLauziJ-YTg}{YUChKHGFTPWJQy8m0HANQA}{192.168.123.14}{192.168.123.14:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true}], reason: added {{node-4}{xBsEmrIhSQWgLauziJ-YTg}{YUChKHGFTPWJQy8m0HANQA}{192.168.123.14}{192.168.123.14:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true},}

[2023-12-10T00:52:46,691][INFO ][o.e.c.s.ClusterApplierService] [node-1] added {{node-4}{xBsEmrIhSQWgLauziJ-YTg}{YUChKHGFTPWJQy8m0HANQA}{192.168.123.14}{192.168.123.14:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true},}, reason: apply cluster state (from master [master {node-1}{Ihs-2_jwTte3q7zd82z9cg}{XX2DgvdSR_yGZ886Ao2n4w}{192.168.123.11}{192.168.123.11:9300}{ml.machine_memory=8975544320, ml.max_open_jobs=20, ml.enabled=true} committed version [36] source [zen-disco-node-join[{node-4}{xBsEmrIhSQWgLauziJ-YTg}{YUChKHGFTPWJQy8m0HANQA}{192.168.123.14}{192.168.123.14:9300}{ml.machine_memory=8370089984, ml.max_open_jobs=20, ml.enabled=true}]]])

[2023-12-10T00:52:46,747][INFO ][o.e.x.w.WatcherService ] [node-1] paused watch execution, reason [no local watcher shards found], cancelled [0] queued tasks

OK,现在可以设置密码了:

[root@node1 bin]# /data/es/bin/x-pack/setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,kibana,logstash_system.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]:

Reenter password for [elastic]:

Enter password for [kibana]:

Reenter password for [kibana]:

Enter password for [logstash_system]:

Reenter password for [logstash_system]:

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [elastic]

下面用直观点的方法:

可以看到,集群是绿色的健康状态,当然,因为我们设置了密码,因此,需要输入上面步骤设置的密码,这一下安全有保证了

[root@node1 bin]# curl -XGET http://192.168.123.11:19200/_cat/health -uelastic

Enter host password for user 'elastic':

1702141169 00:59:29 myes green 4 4 12 5 0 0 0 0 - 100.0%

可以看到集群是四个节点,node-1是主节点,集群搭建成功!!!!!

[root@node1 bin]# curl -XGET http://192.168.123.11:19200/_cat/nodes -uelastic

Enter host password for user 'elastic':

192.168.123.11 32 58 2 0.24 0.26 0.26 mdi * node-1

192.168.123.12 39 73 1 0.32 0.24 0.25 mdi - node-2

192.168.123.13 31 59 1 0.37 0.34 0.32 mdi - node-3

192.168.123.14 34 84 2 1.21 0.65 0.36 mdi - node-4

浏览器输入IP+端口,输入账号密码后可以看到节点状态:

五,

密码的删除和重置

通过api修改密码:

低版本的es修改密码只有通过api来修改了,高版本是有修改密码的工具elasticsearch-reset-password,下面讲述一下如何通过api修改elastic这个用户的密码

用户权限说明:

其中,用户权限分别如下:

elastic 账号:拥有 superuser 角色,是内置的超级用户。

kibana 账号:拥有 kibana_system 角色,用户 kibana 用来连接 elasticsearch 并与之通信。Kibana 服务器以该用户身份提交请求以访问集群监视 API 和 .kibana 索引。不能访问 index。

logstash_system 账号:拥有 logstash_system 角色。用户 Logstash 在 Elasticsearch 中存储监控信息时使用。

[root@node1 bin]# curl -H "Content-Type:application/json" -XPOST -u elastic 'http://192.168.123.11:19200/_xpack/security/user/elastic/_password' -d '{ "password" : "123456" }'

Enter host password for user 'elastic':

{}

修改密码的时候需要校验一下原密码,修改成功后会返回一个空值,如上所示,密码就修改为123456了

重置密码

删除security索引即可再次通过命令/data/es/bin/x-pack/setup-passwords interarchive生成密码,例如下图,通过es-head删除索引

六,

es-head的部署和使用

下载地址:

https://github.com/liufengji/es-head

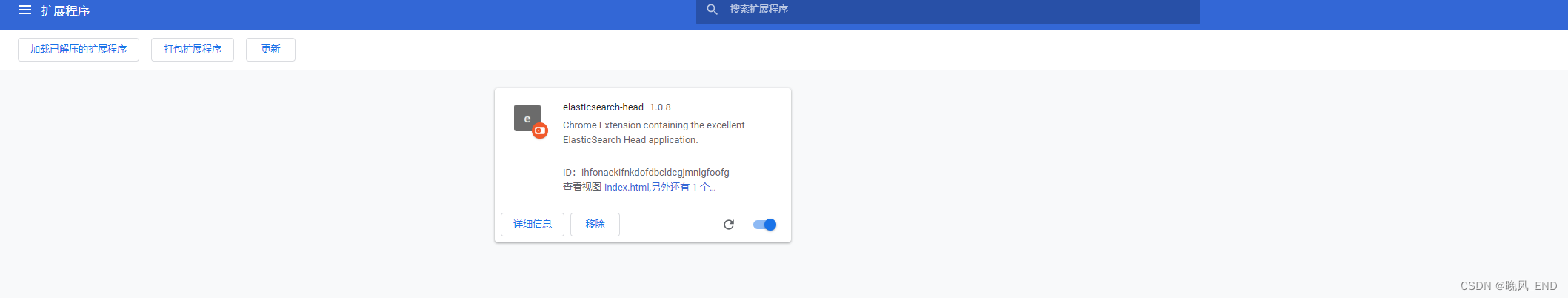

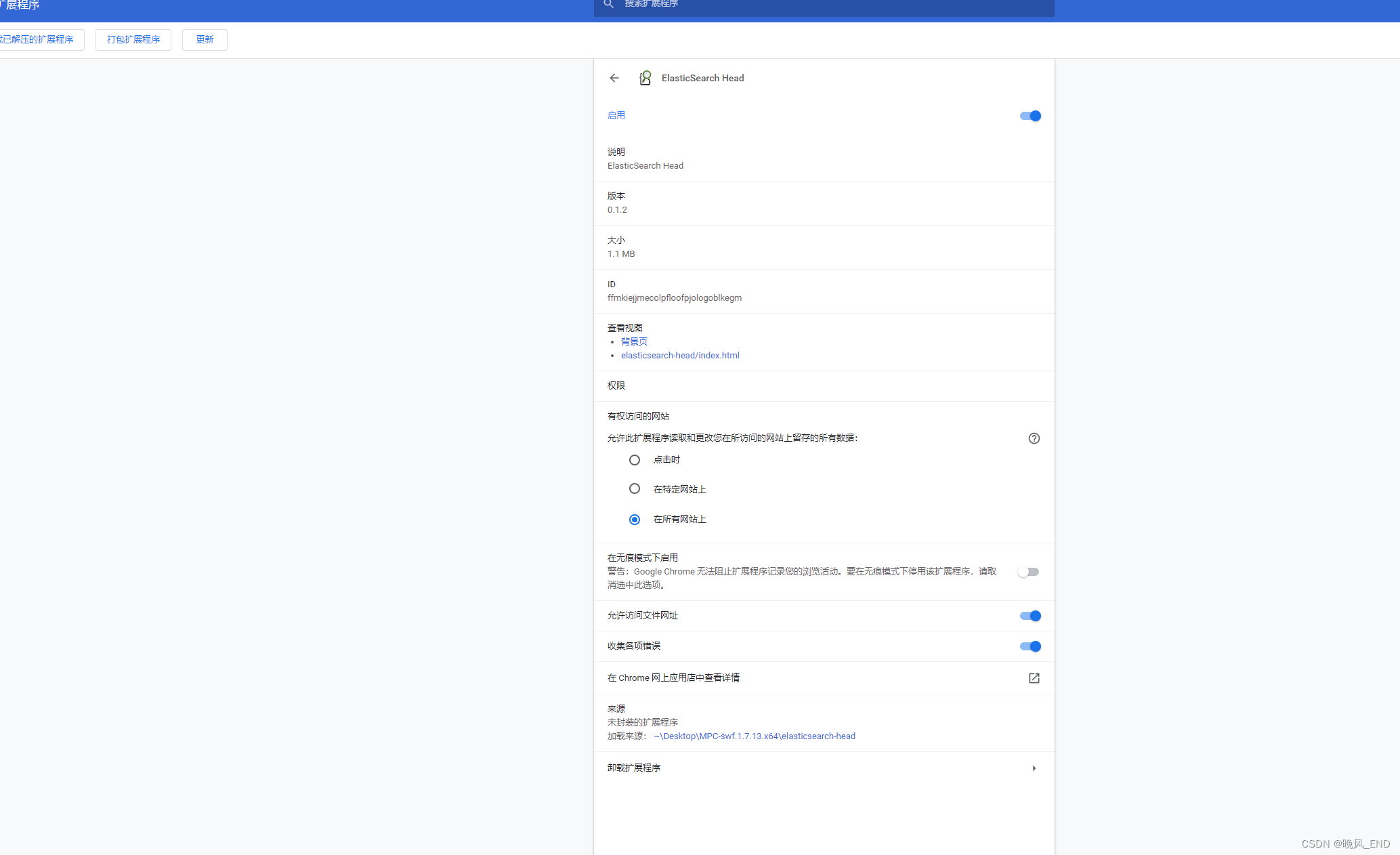

本例使用的是1.0.8和0.1.2

安装步骤:

打开谷歌浏览器的插件管理页面,或者直接在浏览器输入chrome://extensions/

上面下载的文件 elasticsearch-head-master.zip解压,如果发现有crx后缀的文件,修改后缀为rar解压

点击左上角的加载已解压的扩展程序,定位到解压后的目录

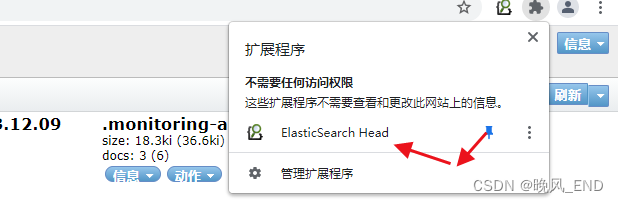

开始使用:

点击连接,然后会弹出要求输入账号和密码的窗口,输入后即可进入head的界面

OK,低版本的es集群安装和安全增强(密码设置)以及es-head插件安装就好了

版权归原作者 晚风_END 所有, 如有侵权,请联系我们删除。