参考

https://blog.csdn.net/m53931422/article/details/103633452

https://www.cnblogs.com/jasonlam/p/7928179.html

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+UDF

https://medium.com/@jackgoettle23/building-a-hive-user-defined-function-f6abe92f6e56

https://blog.csdn.net/qq_73339471/article/details/140763754

https://blog.csdn.net/cuichunchi/article/details/109772461

https://blog.csdn.net/weixin_35852328/article/details/86509506

https://cn.vuejs.org/

https://element.eleme.cn/#/zh-CN

https://echarts.apache.org/zh/index.html

https://spring.io/

https://baomidou.com/

本文的视频版本:https://www.bilibili.com/video/BV1itYLe1E8y,讲解更详细

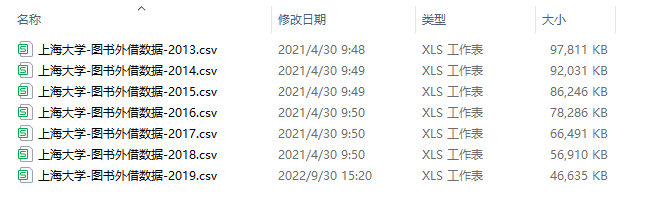

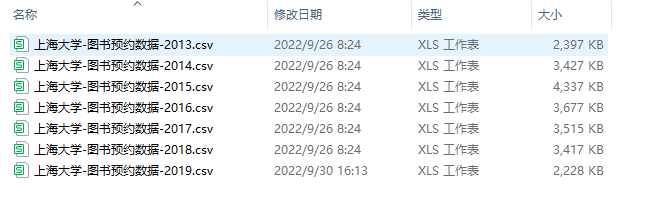

数据

图书外借数据图书预约数据读者入馆数据字段说明备注字段说明备注字段说明****备注UNIVERSITY_ID学校代码10280UNIVERSITY_ID学校代码UNIVERSITY_ID学校代码10280PATRON_ID读者IDPATRON_ID读者IDPATRON_ID读者IDSTUDENT_GRADE学生年级STUDENT_GRADE学生年级STUDENT_GRADE学生年级PATRON_DEPT读者所在院系PATRON_DEPT读者所在院系PATRON_DEPT读者所在院系PATRON_TYPE读者类型PATRON_TYPE读者类型PATRON_TYPE读者类型LOAN_DATE外借日期20130422OPEN_DATE预约日期20131231VISIT_TIME入馆时间2013-03-2520:54:42LOAN_HOUR外借时间1506OPEN_HOUR预约时间1015VISIT_SUBLIBRARY入馆地点01、02、03、04 - 宝山校区05、06 - 延长校区07、08、09 - 嘉定校区DUE_DATE到期日期20130522REQUEST_DATE预约兴趣期开始日期20131231VISIT_TYPE出馆/入馆无该字段DUE_HOUR到期时间2400END_REQUEST_DATE预约兴趣期结束日期20140228RETURNED_DATE归还日期20130427HOLD_DATE预约满足日期20140102RETURNED_HOUR归还时间1512END_HOLD_DATE预约保留日期20140109RETURNED_LOCATION归还地点无该字段RECALL_STATUS预约催还状态无该字段RENEWAL_NO续借次数RECALL_DATE催还日期无该字段LASTRENEW_DATE最后续借日期PROCESSING_DAYS满足时间长度无该字段RECALL_DATE催还日期EVENT_TYPE预约类型无该字段RECALL_DUE_DATE催还后应还日期FULFILLED预约需求是否满足Y - 等待中N - 已满足HOLD_DAYS外借天数无该字段PICKUP_LOCATION取书点详见附录1OVERDUE_DAYS逾期天数无该字段SUBLIBRARY图书所在分馆/馆藏地详见附录1SUBLIBRARY图书所在分馆/馆藏地详见附录1ITEM_ID单册唯一记录号ITEM_ID单册唯一记录号ITEM_STATUS单册状态详见附录2ITEM_STATUS单册状态详见附录2ITEM_CALLNO单册索书号ITEM_CALLNO单册索书号PUBLISH_YEAR图书出版年PUBLISH_YEAR图书出版年AUTHOR图书作者AUTHOR图书作者TITLE图书题名TITLE图书题名PRESS图书出版社PRESS图书出版社ISBN图书ISBN号ISBNISBN号ID自动生成序号,主键ID自动生成序号,主键

共 1.25G

共 512MB

共 22.4 MB

乱码和字段乱序缺失

2019 年的外借数据的字符集为非 UTF-8,

在 Linux 上:

[jxd@102 lend_out]$ file-i lend_out_2019.csv

lend_out_2019.csv: text/plain;charset=unknown-8bit

vim 文件后,:set fileencoding=utf-8 设置文件的编码

[jxd@102 lend_out]$ file-i lend_out_2019.csv

lend_out_2019.csv: text/plain;charset=utf-8

发现没有解决,不是 Linux 上的乱码,经过排查,其它所有文件在 windows 环境下都为 utf-8 格式,这个文件为 GB2312 编码,使用 notepad++ 打开,设置编码为 utf-8,拷贝全文,新建一个 csv 文件,粘贴,新的 csv 文件的格式就为 utf-8 了。

2019 年外借数据存在缺失 id 字段、字段乱序的情况,导入到 MySQL 中,添加 id 字段,并置为 null (id 字段没有用),以新的字段顺序查询并将依据结果创建新表,再将数据导出为 csv 即可。

2019 年预约数据存在字段乱序情况,用上面的方法处理即可。

csv 的表头问题

每一个 csv 文件的表头都是字段名,导入到 Hive 中会被当作数据,需要删除,在 Linux 中,使用如下命令删除文件的第一行:

sed-i'1d'<fileName>

建表以及加载数据

createdatabase shanghai_edu location '/warehouse/shanghai_edu';// 创建时间维度表CREATE EXTERNAL TABLE`dim_date`(`date_key` STRING COMMENT'id',`date_ymd` STRING COMMENT'日期 yy-MM-dd',`date_ch` STRING COMMENT'中文日期',`dim_year` STRING COMMENT'年',`quarter_id`TINYINTCOMMENT'季度数字',`quarter_ch` STRING COMMENT'中文季度',`year_month` STRING comment'年月',`dim_month` STRING comment'月份',`dim_day` STRING COMMENT'一年中的第几天',`dim_month_day` STRING COMMENT'一个月中的第几天',`dim_week` STRING COMMENT'一年中的第几周 星期一是星期的第一天',`year_week` STRING COMMENT'年周',`dim_week1` STRING COMMENT'一年中的第几周 星期天是星期的第一天',`dim_year1` STRING COMMENT'对应 dim_week1 的年份',`dim_weekday` STRING COMMENT'一周的中的第几天',`weekday_ch` STRING COMMENT'星期几',`holiday_name` STRING COMMENT'节假日名称',`is_day_off`TINYINTCOMMENT'是否放假 1表示放假,0表示不放假 2表示调班')COMMENT'时间维度表'ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

location '/warehouse/shanghai_edu/dim/dim_date';loaddatalocal inpath '/opt/project/shanghai_edu/data/dim/dim_date.csv'intotable shanghai_edu.dim_date;// 创建分场馆维度表CREATE EXTERNAL TABLEifnotexists shanghai_edu.dim_sub_library

(`sub_library_or_pickup_location` STRING COMMENT'sub_library 或 pickup_location',`sub_library_location_name` STRING COMMENT'分场馆所在地址')COMMENT'分场馆维度表'

STORED AS PARQUET

LOCATION '/warehouse/shanghai_edu/dim/dim_sub_library';// 创建一张存储格式为 csv 的临时表CREATETABLE shanghai_edu.csv_table

(

sub_library_or_pickup_location STRING,

sub_library_location_name STRING

)ROW FORMAT DELIMITED

FIELDSTERMINATEDBY','LINESTERMINATEDBY'\n'

STORED AS TEXTFILE;// 将数据导入到临时表loaddatalocal inpath '/opt/project/shanghai_edu/data/dim/dim_sublibrary.csv'intotable shanghai_edu.csv_table;INSERT overwrite TABLE shanghai_edu.dim_sub_library

SELECT*FROM shanghai_edu.csv_table;droptable shanghai_edu.csv_table;// 创建入馆表CREATE external table`ods_enter`(`university_id`varchar(100)comment'学校代码 10280',`patron_id`varchar(100)comment'读者ID',`student_grade`varchar(100)comment'学生年级',`patron_dept`varchar(100)comment'读者所在院系',`patron_type`varchar(100)comment'读者类型',`visit_time`varchar(100)comment'入馆时间',`visit_sub_library`varchar(100)comment'入馆地点')COMMENT'入馆表'

partitioned by(`year` string)ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'WITH SERDEPROPERTIES ("separatorChar"=",","quoteChar"="\"","escapeChar"="\\")

location '/warehouse/shanghai_edu/ods/ods_enter';loaddatalocal inpath '/opt/project/shanghai_edu/data/enter/enter_2013.csv'intotable shanghai_edu.ods_enter partition(year='2013');loaddatalocal inpath '/opt/project/shanghai_edu/data/enter/enter_2014.csv'intotable shanghai_edu.ods_enter partition(year='2014');loaddatalocal inpath '/opt/project/shanghai_edu/data/enter/enter_2015.csv'intotable shanghai_edu.ods_enter partition(year='2015');loaddatalocal inpath '/opt/project/shanghai_edu/data/enter/enter_2016.csv'intotable shanghai_edu.ods_enter partition(year='2016');loaddatalocal inpath '/opt/project/shanghai_edu/data/enter/enter_2017.csv'intotable shanghai_edu.ods_enter partition(year='2017');loaddatalocal inpath '/opt/project/shanghai_edu/data/enter/enter_2018.csv'intotable shanghai_edu.ods_enter partition(year='2018');loaddatalocal inpath '/opt/project/shanghai_edu/data/enter/enter_2019.csv'intotable shanghai_edu.ods_enter partition(year='2019');// 创建外借表CREATE external TABLE`ods_lend_out`(`university_id`varchar(100)comment'学校代码 10280',`item_id`varchar(100)comment'单册唯一记录号',`loan_date`varchar(100)comment'外借日期',`sub_library`varchar(100)comment'图书所在分馆/馆藏地',`due_date`varchar(100)comment'到期日期',`loan_hour`varchar(100)comment'外借时间',`due_hour`varchar(100)comment'到期时间',`returned_date`varchar(100)comment'归还日期',`item_status`varchar(100)comment'单册状态',`returned_hour`varchar(100)comment'归还时间',`last_renew_date`varchar(100)comment'最后续借日期',`renewal_no`varchar(100)comment'续借次数',`recall_date`varchar(100)comment'催还日期',`item_call_no`varchar(100)comment'单册索书号',`recall_due_date`varchar(100)comment'催还后应还日期',`author`varchar(100)comment'图书作者',`publish_year`varchar(100)comment'图书出版年',`press`varchar(100)comment'图书出版社',`title`varchar(100)comment'图书题名',`ISBN`varchar(100)comment'isbn',`patron_id`varchar(100)comment'读者ID',`patron_type`varchar(100)comment'读者类型',`student_grade`varchar(100)comment'学生年级',`id`varchar(100)comment'id',`patron_dept`varchar(100)comment'读者所在院系')COMMENT'外借表'

partitioned by(`year` string)ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'WITH SERDEPROPERTIES ("separatorChar"=",","quoteChar"="\"","escapeChar"="\\")

location '/warehouse/shanghai_edu/ods/ods_lend_out';loaddatalocal inpath '/opt/project/shanghai_edu/data/lend_out/lend_out_2013.csv'intotable shanghai_edu.ods_lend_out partition(year='2013');loaddatalocal inpath '/opt/project/shanghai_edu/data/lend_out/lend_out_2014.csv'intotable shanghai_edu.ods_lend_out partition(year='2014');loaddatalocal inpath '/opt/project/shanghai_edu/data/lend_out/lend_out_2015.csv'intotable shanghai_edu.ods_lend_out partition(year='2015');loaddatalocal inpath '/opt/project/shanghai_edu/data/lend_out/lend_out_2016.csv'intotable shanghai_edu.ods_lend_out partition(year='2016');loaddatalocal inpath '/opt/project/shanghai_edu/data/lend_out/lend_out_2017.csv'intotable shanghai_edu.ods_lend_out partition(year='2017');loaddatalocal inpath '/opt/project/shanghai_edu/data/lend_out/lend_out_2018.csv'intotable shanghai_edu.ods_lend_out partition(year='2018');loaddatalocal inpath '/opt/project/shanghai_edu/data/lend_out/lend_out_2019.csv'intotable shanghai_edu.ods_lend_out partition(year='2019');// 创建预约表CREATE external table ods_subscribe

(`university_id`varchar(100)comment'学校代码 10280',`open_date`varchar(100)comment'预约日期',`open_hour`varchar(100)comment'预约时间',`end_request_date`varchar(100)comment'预约兴趣期结束日期',`request_date`varchar(100)comment'预约兴趣期开始日期',`end_hold_date`varchar(100)comment'预约保留日期',`hold_date`varchar(100)comment'预约满足日期',`pickup_location`varchar(100)comment'取书点',`item_status`varchar(100)comment'单册状态',`sub_library`varchar(100)comment'图书所在分馆/馆藏地',`fulfilled`varchar(100)comment'预约需求是否满足',`item_call_no`varchar(100)comment'单册索书号',`item_id`varchar(100)comment'单册唯一记录号',`author`varchar(100)comment'图书作者',`publish_year`varchar(100)comment'图书出版年',`press`varchar(100)comment'图书出版社',`title`varchar(100)comment'图书题名',`ISBN`varchar(100)comment'图书 ISBN 号',`patron_id`varchar(100)comment'读者 ID',`patron_type`varchar(100)comment'读者类型',`student_grade`varchar(100)comment'学生年级',`id`varchar(100)comment'id',`patron_dept`varchar(100)comment'读者所在院系')COMMENT'预约表'

partitioned by(`year` string)ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'WITH SERDEPROPERTIES ("separatorChar"=",","quoteChar"="\"","escapeChar"="\\")

location '/warehouse/shanghai_edu/ods/ods_subscribe';loaddatalocal inpath '/opt/project/shanghai_edu/data/subscribe/subscribe_2013.csv'intotable shanghai_edu.ods_subscribe partition(year='2013');loaddatalocal inpath '/opt/project/shanghai_edu/data/subscribe/subscribe_2014.csv'intotable shanghai_edu.ods_subscribe partition(year='2014');loaddatalocal inpath '/opt/project/shanghai_edu/data/subscribe/subscribe_2015.csv'intotable shanghai_edu.ods_subscribe partition(year='2015');loaddatalocal inpath '/opt/project/shanghai_edu/data/subscribe/subscribe_2016.csv'intotable shanghai_edu.ods_subscribe partition(year='2016');loaddatalocal inpath '/opt/project/shanghai_edu/data/subscribe/subscribe_2017.csv'intotable shanghai_edu.ods_subscribe partition(year='2017');loaddatalocal inpath '/opt/project/shanghai_edu/data/subscribe/subscribe_2018.csv'intotable shanghai_edu.ods_subscribe partition(year='2018');loaddatalocal inpath '/opt/project/shanghai_edu/data/subscribe/subscribe_2019.csv'intotable shanghai_edu.ods_subscribe partition(year='2019');

使用 SQL 分析数据

外借次数、外借时间和用户类型的关系

CREATE EXTERNAL TABLEifnotexists shanghai_edu.adm_lo_cnt_time_with_type_dd

(`patron_type` STRING COMMENT'读者类型',`lend_out_cnt`bigintCOMMENT'借出数',`avg_lend_out_cnt`doublecomment'平均借出数',`min_duration`doublecomment'最小借出时间',`avg_duration`doublecomment'平均借出时间',`max_duration`doublecomment'最大借出时间')COMMENT'外借次数、外借时间和用户类型的关系'

STORED AS PARQUET

LOCATION '/warehouse/shanghai_edu/adm/adm_lo_cnt_time_with_type_dd';insert overwrite table shanghai_edu.adm_lo_cnt_time_with_type_dd

select patron_type

,count(*) lend_out_cnt

,round(count(*)/count(DISTINCT(patron_id)),2) avg_lend_out_cnt

,round(min((unix_timestamp(returned_date,'yyyyMMdd')- unix_timestamp(loan_date,'yyyyMMdd'))/(60*60*24)),2) min_duration -- 单位为天,round(AVG((unix_timestamp(returned_date,'yyyyMMdd')- unix_timestamp(loan_date,'yyyyMMdd'))/(60*60*24)),2) avg_duration

,round(MAX((unix_timestamp(returned_date,'yyyyMMdd')- unix_timestamp(loan_date,'yyyyMMdd'))/(60*60*24)),2) max_duration

from shanghai_edu.ods_lend_out

groupby patron_type;

入馆次数、预约次数和读者类型的关系

CREATE EXTERNAL TABLEifnotexists shanghai_edu.adm_ent_subscribe_cnt_with_type

(`patron_type` STRING COMMENT'读者类型',`enter_cnt`bigintCOMMENT'总入馆次数/预约次数',`avg_lend_out_cnt`doublecomment'平均入馆次数/平均预约次数')COMMENT'入馆次数、预约次数和读者类型的关系'

partitioned by(stat_type STRING COMMENT'统计类型(enter/subscribe)')

STORED AS PARQUET

LOCATION '/warehouse/shanghai_edu/adm/adm_ent_subscribe_cnt_with_type';set hive.exec.dynamic.partition.mode=nonstrict;insert overwrite table shanghai_edu.adm_ent_subscribe_cnt_with_type partition(stat_type)select patron_type,count(*) enter_cnt,round(count(*)/count(DISTINCT(patron_id)),2) avg_enter_cnt,'enter' stat_type

from shanghai_edu.ods_enter

groupby patron_type

unionallselect patron_type,count(*) sub_cnt,round(count(*)/count(DISTINCT(patron_id)),2) avg_sub_cnt,'subscribe' stat_type

from shanghai_edu.ods_subscribe

groupby patron_type

;

各分馆各年份的预约不满足率

CREATE EXTERNAL TABLEifnotexists shanghai_edu.adm_subscribe_no_satisfy_lib_year_stat

(`no_fulfilled_ratio`doubleCOMMENT'预约不满足率',`sub_library_location_name` string COMMENT'分馆位置',`subscribe_year` string comment'预约年份')COMMENT'各分馆各年份的预约不满足率统计表'

STORED AS PARQUET

LOCATION '/warehouse/shanghai_edu/adm/adm_subscribe_no_satisfy_lib_year_stat';insert overwrite table shanghai_edu.adm_subscribe_no_satisfy_lib_year_stat

selectround(sum(if(fulfilled ='不满足',1,0))/count(1),4) no_fulfilled_ratio

, sub_library_location_name

, subscribe_year

from(// @formatter:offselectCASEWHEN fulfilled ='N'THEN'不满足'WHEN fulfilled ='Y'THEN'满足'ELSE'不知道'ENDAS fulfilled

,coalesce(b.sub_library_location_name,'-') sub_library_location_name

,coalesce(substr(open_date,1,4),'-') subscribe_year

from shanghai_edu.ods_subscribe a

leftjoin shanghai_edu.dim_sub_library b

on a.sub_library = b.sub_library_or_pickup_location

// @formatter:on) tb_tmp

GROUPBY sub_library_location_name, subscribe_year

with cube;

预约不到的 top10 书籍

CREATE EXTERNAL TABLEifnotexists shanghai_edu.adm_subscribe_no_satisfy_top

(`ISBN` string COMMENT'isbn',`cnt`bigintCOMMENT'预约不到次数')COMMENT'预约不到的 top10 书籍'

STORED AS PARQUET

LOCATION '/warehouse/shanghai_edu/adm/adm_subscribe_no_satisfy_top';insert overwrite table shanghai_edu.adm_subscribe_no_satisfy_top

select

ISBN

,count(1) cnt

from shanghai_edu.ods_subscribe

where fulfilled ='N'and ISBN !=''groupby ISBN

orderby cnt desclimit10;

入馆时间分布

CREATEFUNCTION parseHour AS'com.jxd.udf.ParseHour'USING JAR 'hdfs:///udf/yelp-udf-1.0-SNAPSHOT.jar';

reload function;show functions;dropfunctionifexists formatVisitTime;DESCRIBEFUNCTION formatVisitTime;CREATEFUNCTION formatVisitTime AS'com.jxd.udf.FormatVisitTime'USING JAR 'hdfs:///udf/yelp-udf-1.0-SNAPSHOT.jar';CREATE EXTERNAL TABLEifnotexists shanghai_edu.adm_enter_time_stat

(`holiday_name` string COMMENT'节日名称',`visit_hour` string COMMENT'入馆时间(小时)',`cnt`bigintcomment'入馆数量')COMMENT'入馆时间分布统计表'

STORED AS PARQUET

LOCATION '/warehouse/shanghai_edu/adm/adm_enter_time_stat';// @formatter:offinsert overwrite table shanghai_edu.adm_enter_time_stat

select holiday_name

, visit_hour

,count(1) cnt

from(selectif(b.holiday_name isnotnulland b.holiday_name !='', b.holiday_name,'非节假日') holiday_name

, parseHour(a.visit_time) visit_hour

from shanghai_edu.ods_enter a

leftjoin shanghai_edu.dim_date b on formatVisitTime(a.visit_time)= b.date_key

) tb_tmp

GROUPBY holiday_name, visit_hour

with cube;;

hive udf

使用 java 编写一个 hive 的 udf,打包。

将 jar 包上传到 hdfs 上:

hadoop fs -mkdir /udf

hadoop fs -put yelp-udf-1.0-SNAPSHOT.jar /udf/

进入 hive 终端中,使用如下命令创建永久函数:

CREATE FUNCTION upper_case_udf AS 'com.jxd.udf.UpperCaseUDF' USING JAR 'hdfs:///udf/yelp-udf-1.0-SNAPSHOT.jar';

hive 终端当前所处的数据库就是这个函数的默认存放数据库,例如:default,如果是在 default 数据库中创建的 udf,在 default 数据库中使用 udf 就可以不携带数据库前缀,如果是在其他数据库中使用这个函数,就需要使用指定这个函数所在的数据库前缀来使用这个函数,比如:select default.upper_case_udf(‘aaa’);

upper_case_udf 是函数名,com.jxd.udf.UpperCaseUDF 是这个 udf 的类全限定名,最后是 jar 包对应的 hdfs 地址。

可以使用如下命令刷新函数:

RELOAD FUNCTION;

使用如下命令查询某个 udf 的描述:

DESCRIBEFUNCTION<function_name>;

使用如下命令删除函数:

DROPFUNCTION upper_case_udf;

如何在 datagrip 中更新函数:

- 执行删除函数的命令:

DROPFUNCTION upper_case_udf;

- 关闭项目,File -> Close Project

- 重新创建函数

CREATEFUNCTION upper_case_udf AS'com.jxd.udf.UpperCaseUDF'USING JAR 'hdfs:///udf/yelp-udf-1.0-SNAPSHOT.jar';

导出 hive 数据到 mysql

本次使用 sqoop 将数据导出到 mysql:

bin/sqoop export\--connect"jdbc:mysql://node112:3306/shanghai_edu?useUnicode=true&characterEncoding=utf-8"\--username root \--password123456\--table adm_lo_cnt_time_with_type_dd \

--num-mappers 1\

--hcatalog-database shanghai_edu \

--hcatalog-table adm_lo_cnt_time_with_type_dd

--hive-partition-key <partition_key>\

--warehouse-dir <hdfs_path_to_hive_table>

导出分区表的所有数据:

bin/sqoop export\--connect"jdbc:mysql://node112:3306/shanghai_edu?useUnicode=true&characterEncoding=utf-8"\--username root \--password123456\--table adm_ent_subscribe_cnt_with_type \

--num-mappers 1\

--hcatalog-database shanghai_edu \

--hcatalog-table adm_ent_subscribe_cnt_with_type

--hive-partition-key stat_type \

--warehouse-dir /warehouse/shanghai_edu/adm/adm_ent_subscribe_cnt_with_type

问题

windows 上的中文文件名的文件上传到 centos 后变成乱码

原因在于,Windows 的文件名中文编码默认为 GBK,压缩或者上传后,文件名还会是 GBK 编码,而 Linux 中默认文件名编码为 UTF8,由于编码不一致所以导致了文件名乱码的问题,解决这个问题需要对文件名进行转码。

安装 convmv:

yum install convmv

使用 convmv 将当前文件夹下的所有文件名转为 udf-8 编码:

convmv -f gbk -t utf-8 --notest-r ./

版权归原作者 叶 落 所有, 如有侵权,请联系我们删除。