问题描述

集群环境总共有2个NN节点,3个JN节点,40个DN节点,基于hadoop-3.3.1的版本。集群采用的双副本,未使用ec纠删码。

问题如下:

bin/hdfs fsck -list-corruptfileblocks /

The list of corrupt files under path '/' are:

blk_1073779849 /warehouse/hive/customer_demographics/data-m-00488

blk_1073783404 /warehouse/hive/store_sales/data-m-00680

blk_1073786196 /warehouse/hive/catalog_sales/data-m-00015

blk_1073789561 /warehouse/hive/catalog_sales/data-m-00433

blk_1073798994 /warehouse/hive/web_sales/data-m-00214

blk_1073799110 /warehouse/hive/store_sales/data-m-00366

blk_1073800126 /warehouse/hive/web_sales/data-m-00336

blk_1073801710 /warehouse/hive/store_sales/data-m-00974

blk_1073807710 /warehouse/hive/inventory/data-m-01083

blk_1073809488 /warehouse/hive/store_sales/data-m-01035

blk_1073810929 /warehouse/hive/catalog_sales/data-m-01522

blk_1073811947 /warehouse/hive/customer_address/data-m-01304

blk_1073816024 /warehouse/hive/catalog_sales/data-m-01955

blk_1073819563 /warehouse/hive/web_sales/data-m-01265

blk_1073821438 /warehouse/hive/store_sales/data-m-01662

blk_1073822433 /warehouse/hive/web_sales/data-m-01696

blk_1073827763 /warehouse/hive/web_sales/data-m-01880

......

因为出现了missing block块高于namenode安全模式启动的阈值,导致namenode起来一直处于安全模式

namenode 的启动日志

2023-02-23 18:50:38,336 INFO org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream: Fast-forwarding stream 'http://journalnode-2:8480/getJournal?jid=cdp1&segmentTxId=225840498&storageInfo=-66%3A2104128967%3A1665026133759%3Acdp&inProgressOk=true' to transaction ID 225840498

2023-02-23 18:50:38,338 INFO org.apache.hadoop.hdfs.StateChange: STATE* Safe mode ON.

The reported blocks 3102206 needs additional 729 blocks to reach the threshold 0.9990 of total blocks 3106041.

The minimum number of live datanodes is not required. Safe mode will be turned off automatically once the thresholds have been reached.

2023-02-23 18:50:38,456 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Loaded 1 edits file(s) (the last named http:/journalnode-2:8480/getJournal?jid=cdp1&segmentTxId=225840498&storageInfo=-66%3A2104128967%3A1665026133759%3Acdp&inProgressOk=true, http://journalnode-1:8480/getJournal?jid=cdp1&segmentTxId=225840498&storageInfo=-66%3A2104128967%3A1665026133759%3Acdp&inProgressOk=true) of total size 1122.0, total edits 8.0, total load time 119.0 ms

2023-02-23 18:51:31,403 WARN org.apache.hadoop.hdfs.server.namenode.NameNode: Allowing manual HA control from 172.16.0.216 even though automatic HA is enabled, because the user specified the force flag

问题解决思路

找到缺失块所在的DN节点

集群在写数据的时候,Namenode会记录将数据块分配到那几个DN节点上,日志格式如下:

INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073807710, replicas=172.16.0.244:9866, 172.16.0.227:9866 for /warehouse/hive/inventory/data-m-01083

所以通过这个日志信息可以去全局查找日志中块缺失的相关信息,找到块所在的dn节点,然后登录到dn节点,找寻是否数据还在。

如果上述是双副本的,一个block块会有两个DN节点存储,能报上述missing block的信息,那么说明namenode中元数据记录的文件快和所有DN心跳的汇报块信息对不上,即DN汇报上来的块信息缺少了, 所以要么是blk对应的两个节点的数据都不见了(被删除或者清理了),或者两个节点同时宕机了,无法对外提供服务了。

接着上述的思路,去hdfs上排查这两个节点是否正常。

方式一:通过hdfs web界面上去查看dn的节点是否状态正常

确实有几个节点同时宕机了,上述只显示一个节点,是因为事后其他节点被我手动修复了。

方式二:通过命令行来排查

[root@namenode-2-0 hadoop-3.3.1]# bin/hdfs dfsadmin -report -dead

Configured Capacity: 63271003226112(57.54 TB)

Present Capacity: 62179794033930(56.55 TB)

DFS Remaining: 28580973518090(25.99 TB)

DFS Used: 33598820515840(30.56 TB)

DFS Used%: 54.03%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

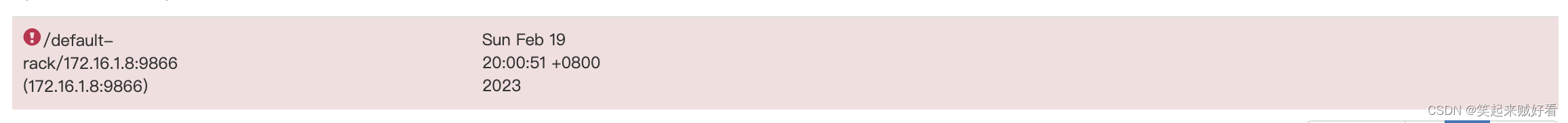

Dead datanodes (1):

Name: 172.16.1.8:9866 (datanode-16)

Hostname: 172.16.1.8

Decommission Status : Normal

Configured Capacity: 1437977346048(1.31 TB)

DFS Used: 754816651264(702.98 GB)

Non DFS Used: 22427271168(20.89 GB)

DFS Remaining: 660731326464(615.35 GB)

DFS Used%: 52.49%

DFS Remaining%: 45.95%

Configured Cache Capacity: 0(0 B)

Cache Used: 0(0 B)

Cache Remaining: 0(0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 0

Last contact: Sun Feb 1920:00:51 CST 2023

Last Block Report: Sun Feb 1914:24:53 CST 2023

Num of Blocks: 0

通过上述方式,能得出此节点有异常,进而可以到上述节点恢复数据

但是此时还无法判断,此节点上就一定存在着丢失数据块。

还需要继续登录到此节点上,在datanode数据目录下

BP-344496998-172.16.0.216-1665026133759/current/

find . -name “blk_*”

排查一下丢失的数据块是否还在此节点上。

数据恢复

重启宕机的DN节点

如果不是很严重的情况,比如硬件(硬盘,内存条,网络)等原因导致的节点宕机,那么登录到该节点,重启一下服务,DN服务正常启动后,会自动report自己本身机器上管理的blk_*块给到namenode,那么namenode就会自动恢复上述元数据信息。

bin/hdfs --daemon start datanode

查看日志。

节点无法启动,恢复数据

如果节点因为其他外部原因,导致没办法启动datanode服务,并且blk块数据还在硬盘上,那么此时只能通过拷贝blk块,并且手动触发 dn 节点汇报

正常 dn 节点 172.16.1.6 目录 /data/current/BP-344496998-172.16.0.216-1665026133759

损坏的 dn 节点 172.16.1.8 目录 /data/current/BP-344496998-172.16.0.216-1665026133759

操作步骤:

- 拷贝数据节点

- 手动触发汇报机制

拷贝数据节点脚本

ssh [email protected]

scp [email protected]:/data/current/BP-344496998-172.16.0.216-1665026133759 /data/current/BP-344496998-172.16.0.216-1665026133759

cd /data/current/BP-344496998-172.16.0.216-1665026133759

foriin`find.-name'blk_*'-type f`;doj=${i%/*}echo"mv ${i} .${j}"mv-f${i}.${j}done# 最终形成的数据拷贝命令mv ./current/finalized/subdir31/subdir31/blk_1105166085_31429045.meta ../current/finalized/subdir31/subdir31

......

最后触发DN汇报

bin/hdfs dfsadmin -triggerBlockReport172.16.1.6:9876

最后数据完全恢复,missing block 问题也得到解决。

版权归原作者 笑起来贼好看 所有, 如有侵权,请联系我们删除。