Python Selenium 爬虫通过network抓包response获取新增职位信息,并定时推送给邮箱

背景

在获取某些网站的招聘信息时,没有给出岗位的发布时间信息,导致用户无法识别每天新增的职位信息,大量的职位混杂一起,无法识别热点职位,错失最新的招募信息.

问题

通过对信息发布网站调研发现.

在网页上的信息是不全的,通过Chrome开发者工具找到最详细的数据是在network种的Fetch/XHR请求的返回体,所以用传统的find element by Xpath具有一定的局限性,这里利用selenium的performance日志能抓取network的包返回信息,从而得到需要的数据.

解决方案

- 通过selenium performance日志抓取所有Method = Network.responseReceived 的返回.

- 拉取到返回包的RequestID,并通过driver.execute_cdp_cmd以RequestId作为参数,重新请求获得RequestBody.

- 将所有的职位信息解析并落库.

- 按照时间切片,每天统计出相比于昨天新增的岗位信息.

- 通过Crontab定时发送邮件,每日推送.

代码

import time

import pymysql

import json

import datetime

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import smtplib

from email.mime.text import MIMEText

from email.header import Header

defspiderMain():

db = pymysql.connect(host='localhost', user='root', password='', database='databases', port=3306)

cursor = db.cursor()

cursor.execute("select * from bytedance where create_date = '%s'"% datetime.date.today())

data = cursor.fetchone()if data isnotNone:print('今天已经存在数据,直接发邮件... ')returnprint(' 今天没有数据 开始爬数据... ')

options = Options()

options.set_capability('goog:loggingPrefs',{'performance':'ALL'})# 打开浏览器

driver = webdriver.Chrome(options=options)defprocess_browser_log_entry(entry):

response = json.loads(entry['message'])['message']return response

driver.get('需要爬取的URL')

time.sleep(10)

browser_log = driver.get_log('performance')

events =[process_browser_log_entry(entry)for entry in browser_log]

events =[event for event in events

if'Network.responseReceived'in event['method']and'response'in event['params'].keys()and'posts'in event['params']['response']['url']]

resp = driver.execute_cdp_cmd('Network.getResponseBody',{'requestId': events[0]['params']['requestId']})# print(resp)

bodyjson = json.loads(resp['body'])for jobAttrs in bodyjson['data']['job_post_list']:

uuid = jobAttrs['id']

jobUrl ='https://jobs.?.com/experienced/position/'+ uuid +'/detail'

job_name = jobAttrs['title']

description = jobAttrs['description']

requirement = jobAttrs['requirement']# print(uuid + job_name + description + requirement)

sql =f'insert into bytedance (`uuid`,`name`,`description`,url,job_attr,jd,create_date) values (%s,%s,%s,%s,%s,%s,%s)'

today = datetime.date.today()

cursor.execute(sql,(uuid, job_name, description, jobUrl,"", requirement, today))

db.commit()

db.close()

driver.quit()defgetTodayNewDatum():

db = pymysql.connect(host='localhost', user='root', password='', database='databases', port=3306)

cursor = db.cursor()

sql_today ="select * from bytedance where create_date = '%s'"% datetime.date.today()

sql_yes ="select * from bytedance where create_date = '%s'"% getYesterday()

cursor.execute(sql_today)

data_today = cursor.fetchall()

cursor.execute(sql_yes)

data_yes = cursor.fetchall()print(len(data_today))print(len(data_yes))

list_yeskey =[]for data_y in data_yes:

list_yeskey.append(data_y[1]+ data_y[2])

ret =[]for data_t in data_today:if(data_t[1]+ data_t[2]notin list_yeskey):

ret.append(data_t)return ret

defgetYesterday():

today = datetime.date.today()

oneday = datetime.timedelta(days=1)

yesterday = today - oneday

return yesterday

defsendMail(datum):#设置服务器所需信息#163邮箱服务器地址

mail_host ='smtp.163.com'#163用户名

mail_user =''#密码(部分邮箱为授权码)

mail_pass =''#邮件发送方邮箱地址

sender =''#邮件接受方邮箱地址,注意需要[]包裹,这意味着你可以写多个邮件地址群发

receivers =['']

mail_msg ="""

<p> 统计的 ❤️ 新增岗位详情</p>

"""for data in datum:

subStr =""

jobHerf = data[8]

jobName = data[2]

subStr = subStr +'<p><a href=\"%s\">%s</a></p>'%(jobHerf,jobName)

subStr+='<p><p>---job description---</p>'

jobDes = data[3]for descri in jobDes.split('\n'):

subStr+='<li>%s</li>'%(descri)

subStr+='</p>'

subStr +='<p><p>---job requirement---</p>'

jobJd = data[4]for jd in jobJd.split('\n'):

subStr +='<li>%s</li>'%(jd)

subStr +='</p>'

mail_msg = mail_msg + subStr

message = MIMEText(mail_msg,'html','utf-8')#邮件主题

subject ='ByteDance 今日新增产品职位-深圳'

message['Subject']= Header(subject,'utf-8')#发送方信息

message['From']= sender

#接受方信息

message['To']= receivers[0]#登录并发送邮件try:

smtpObj = smtplib.SMTP()#连接到服务器

smtpObj.connect(mail_host,25)#登录到服务器

smtpObj.login(mail_user,mail_pass)#发送

smtpObj.sendmail(

sender,receivers,message.as_string())#退出

smtpObj.quit()print('success')except smtplib.SMTPException as e:print('error',e)#打印错误

spiderMain()

datum = getTodayNewDatum()

sendMail(datum)

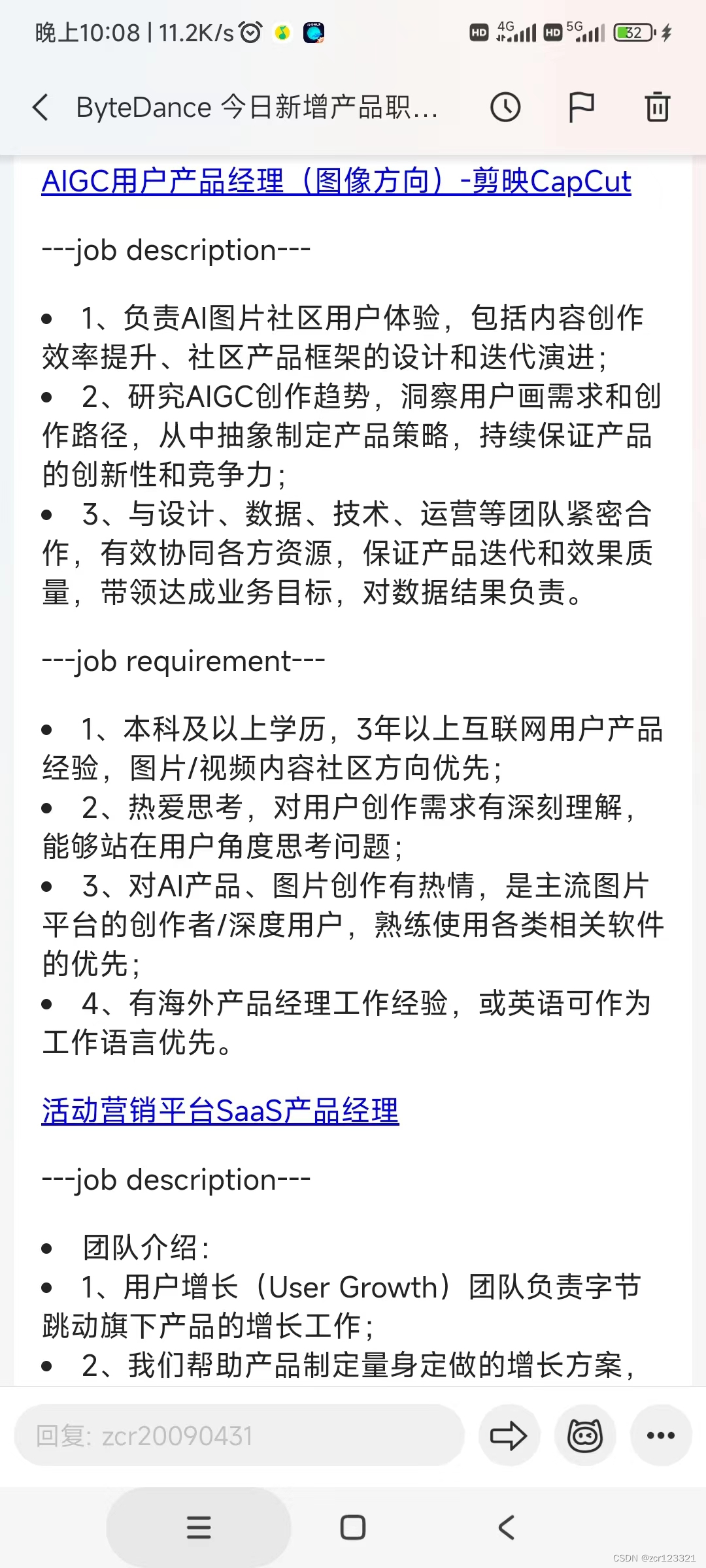

效果

版权归原作者 zcr123321 所有, 如有侵权,请联系我们删除。