1.背景介绍

selenium通过驱动浏览器,模拟浏览器的操作,进而爬取数据。此外,还需要安装浏览器驱动,相关步骤自行解决。

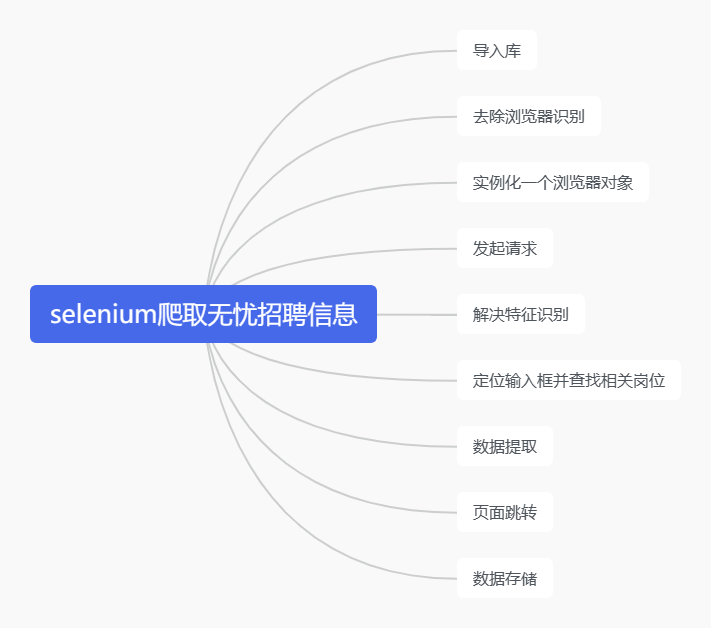

思维导图:

2.导入库

import csv

import random

import time

from time import sleep

from selenium import webdriver

from selenium.webdriver import ActionChains

from selenium.webdriver import ChromeOptions

from selenium.webdriver.common.by import By

3.去除浏览器识别

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

option.add_experimental_option('detach', True)

去除浏览器上方的“Chrome正受到自动测试软件的控制”字眼。

4.实例化一个浏览器对象(传入浏览器的驱动程序)

driver = webdriver.Chrome(options=option)

5. 发起请求

driver.get("https://www.51job.com/")

time.sleep(2) #防止加载缓慢,休眠2秒

6.解决特征识别

script = 'Object.defineProperty(navigator, "webdriver", {get: () => false,});'

driver.execute_script(script)

没有出现验证框或验证滑块,说明已经成功屏蔽selenium识别。

7.定位输入框并查找相关职位

driver.find_element(By.XPATH, '//*[@id="kwdselectid"]').click()

driver.find_element(By.XPATH, '//*[@id="kwdselectid"]').clear()

driver.find_element(By.XPATH, '//*[@id="kwdselectid"]').send_keys('老师')

driver.find_element(By.XPATH, '/html/body/div[3]/div/div[1]/div/button').click()

# driver.implicitly_wait(10)

time.sleep(5)

print(driver.current_url)

输入关键词“老师”,根据自我需要进行更改。

8.利用xpath和css选择器提取数据

jobData = driver.find_elements(By.XPATH, '//*[@id="app"]/div/div[2]/div/div/div[2]/div/div[2]/div/div[2]/div[1]/div')

for job in jobData:

jobName = job.find_element(By.CLASS_NAME, 'jname.at').text

time.sleep(random.randint(5, 15) * 0.1)

jobSalary = job.find_element(By.CLASS_NAME, 'sal').text

time.sleep(random.randint(5, 15) * 0.1)

jobCompany = job.find_element(By.CLASS_NAME, 'cname.at').text

time.sleep(random.randint(5, 15) * 0.1)

company_type_size = job.find_element(By.CLASS_NAME, 'dc.at').text

time.sleep(random.randint(5, 15) * 0.1)

company_status = job.find_element(By.CLASS_NAME, 'int.at').text

time.sleep(random.randint(5, 15) * 0.1)

address_experience_education = job.find_element(By.CLASS_NAME, 'd.at').text

time.sleep(random.randint(5, 15) * 0.1)

try:

job_welf = job.find_element(By.CLASS_NAME, 'tags').get_attribute('title')

except:

job_welf = '无数据'

time.sleep(random.randint(5, 15) * 0.1)

update_date = job.find_element(By.CLASS_NAME, 'time').text

time.sleep(random.randint(5, 15) * 0.1)

print(jobName, jobSalary, jobCompany, company_type_size, company_status, address_experience_education, job_welf,

update_date)

因为防止网站防爬,获取数据的同时,让程序休眠随机长度的时间。(根据自我需要设定合适的时间长度)

在工作福利提取过程中,有的职位并没有这一选项,所以对其try...except...

9.定位页面输入框并实现跳转

xpath定位页码输入框,输入页码,完成跳转。为了防止反爬,每一操作后对程序休眠随机长度的时间。

driver.find_element(By.XPATH, '//*[@id="jump_page"]').click()

time.sleep(random.randint(10, 30) * 0.1)

driver.find_element(By.XPATH, '//*[@id="jump_page"]').clear()

time.sleep(random.randint(10, 40) * 0.1)

driver.find_element(By.XPATH, '//*[@id="jump_page"]').send_keys(page)

time.sleep(random.randint(10, 30) * 0.1)

driver.find_element(By.XPATH,

'//*[@id="app"]/div/div[2]/div/div/div[2]/div/div[2]/div/div[3]/div/div/span[3]').click()

10.数据存储

将提取的数据保存进csv中,以追加写入的方式。

with open('wuyou_teacher.csv', 'a', newline='') as csvfile:

writer = csv.writer(csvfile)

writer.writerow(

[jobName, jobSalary, jobCompany, company_type_size, company_status, address_experience_education,

job_welf,

update_date])

版权归原作者 吃颗枸杞吧 所有, 如有侵权,请联系我们删除。