Spring AI介绍

Spring

AI是AI工程师的一个应用框架,它提供了一个友好的API和开发AI应用的抽象,旨在简化AI应用的开发工序,例如开发一款基于ChatGPT的对话应用程序。

- 项目地址:https://github.com/spring-projects-experimental/spring-ai

- 文档地址:https://docs.spring.io/spring-ai/reference/

目前该项目已经集成了OpenAI、Azure OpenAI、Hugging

Face、Ollama等API。不过,对于集成了OpenAI接口的项目,只要再搭配One-API项目,就可以调用目前主流的大语言模型了。

使用介绍

在介绍如何使用Spring AI开发一个对话接口之前,我先介绍下ChatGPT应用的开发原理。

首先,ChatGPT是OpenAI推出的一款生成式人工智能大语言模型,OpenAI为了ChatGPT能够得到广泛应用,向开发者提供了ChatGPT的使用接口,开发者只需使用OpenAI为开发者提供的Key,向OpenAI提供的接口地址发起各种形式的请求就可以使用ChatGPT。因此,开发一款ChatGPT应用并不是让你使用人工智能那套技术进行训练和开发,而是作为搬运工,通过向OpenAI提供的ChatGPT接口发起请求来获取ChatGPT响应,基于这一流程来开发的。

第一种方式采用openai

1.聊天问答

<?xml version="1.0" encoding="UTF-8"?><projectxmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>3.2.4</version><relativePath/><!-- lookup parent from repository --></parent><groupId>com.example</groupId><artifactId>ai</artifactId><version>0.0.1-SNAPSHOT</version><name>ai</name><description>ai</description><properties><java.version>17</java.version></properties><dependencyManagement><dependencies><dependency><groupId>org.springframework.ai</groupId><artifactId>spring-ai-bom</artifactId><version>0.8.1-SNAPSHOT</version><type>pom</type><scope>import</scope></dependency></dependencies></dependencyManagement><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope></dependency><dependency><groupId>org.springframework.ai</groupId><artifactId>spring-ai-openai</artifactId></dependency><dependency><groupId>org.springframework.ai</groupId><artifactId>spring-ai-openai-spring-boot-starter</artifactId></dependency><dependency><groupId>org.springframework.ai</groupId><artifactId>spring-ai-ollama-spring-boot-starter</artifactId></dependency></dependencies><repositories><repository><id>spring-milestones</id><name>Spring Milestones</name><url>https://repo.spring.io/milestone</url><snapshots><enabled>false</enabled></snapshots></repository><repository><id>spring-snapshots</id><name>Spring Snapshots</name><url>https://repo.spring.io/snapshot</url><releases><enabled>false</enabled></releases></repository></repositories><build><plugins><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId></plugin></plugins></build></project>

application.yml

spring:ai:openai:api-key: sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

base-url: xxxxxxxxxxxxxxxxxxx

chat:options:#model: gpt-3.5-turbotemperature: 0.3F

controller

/**

* spring-ai 自动装配的,可以直接注入使用

*/@ResourceprivateOpenAiChatClient openAiChatClient;/**

* 调用OpenAI的接口

*

* @param msg 我们提的问题

* @return

*/@RequestMapping(value ="/ai/chat")publicStringchat(@RequestParam(value ="msg")String msg){String called = openAiChatClient.call(msg);return called;}/**

* 调用OpenAI的接口

*

* @param msg 我们提的问题

* @return

*/@RequestMapping(value ="/ai/chat2")publicObjectchat2(@RequestParam(value ="msg")String msg){ChatResponse chatResponse = openAiChatClient.call(newPrompt(msg));return chatResponse.getResult().getOutput().getContent();}/**

* 调用OpenAI的接口

*

* @param msg 我们提的问题

* @return

*/@RequestMapping(value ="/ai/chat3")publicObjectchat3(@RequestParam(value ="msg")String msg){//可选参数在配置文件中配置了,在代码中也配置了,那么以代码的配置为准,也就是代码的配置会覆盖掉配置文件中的配置ChatResponse chatResponse = openAiChatClient.call(newPrompt(msg,OpenAiChatOptions.builder()//.withModel("gpt-4-32k") //gpt的版本,32k是参数量.withTemperature(0.4F)//温度越高,回答得比较有创新性,但是准确率会下降,温度越低,回答的准确率会更好.build()));return chatResponse.getResult().getOutput().getContent();}/**

* 调用OpenAI的接口

*

* @param msg 我们提的问题

* @return

*/@RequestMapping(value ="/ai/chat4")publicObjectchat4(@RequestParam(value ="msg")String msg){//可选参数在配置文件中配置了,在代码中也配置了,那么以代码的配置为准,也就是代码的配置会覆盖掉配置文件中的配置Flux<ChatResponse> flux = openAiChatClient.stream(newPrompt(msg,OpenAiChatOptions.builder()//.withModel("gpt-4-32k") //gpt的版本,32k是参数量.withTemperature(0.4F)//温度越高,回答得比较有创新性,但是准确率会下降,温度越低,回答的准确率会更好.build()));

flux.toStream().forEach(chatResponse ->{System.out.println(chatResponse.getResult().getOutput().getContent());});return flux.collectList();//数据的序列,一序列的数据,一个一个的数据返回}

2.图片问答

spring:ai:openai:api-key: ${api-key}base-url: ${base-url}image:options:model: gpt-4-dalle

quality: hd

n:1height:1024width:1024

controller

packagecom.bjpowernode.controller;importjakarta.annotation.Resource;importorg.springframework.ai.image.ImagePrompt;importorg.springframework.ai.image.ImageResponse;importorg.springframework.ai.openai.OpenAiImageClient;importorg.springframework.ai.openai.OpenAiImageOptions;importorg.springframework.web.bind.annotation.RequestMapping;importorg.springframework.web.bind.annotation.RequestParam;importorg.springframework.web.bind.annotation.RestController;@RestControllerpublicclassImageController{@ResourceprivateOpenAiImageClient openAiImageClient;@RequestMapping("/ai/image")privateObjectimage(@RequestParam(value ="msg")String msg){ImageResponse imageResponse = openAiImageClient.call(newImagePrompt(msg));System.out.println(imageResponse);String imageUrl = imageResponse.getResult().getOutput().getUrl();//把图片进行业务处理return imageResponse.getResult().getOutput();}@RequestMapping("/ai/image2")privateObjectimage2(@RequestParam(value ="msg")String msg){ImageResponse imageResponse = openAiImageClient.call(newImagePrompt(msg,OpenAiImageOptions.builder().withQuality("hd")//高清图像.withN(1)//生成1张图片.withHeight(1024)//生成的图片高度.withWidth(1024)//生成的图片宽度.build()));System.out.println(imageResponse);String imageUrl = imageResponse.getResult().getOutput().getUrl();//把图片进行业务处理return imageResponse.getResult().getOutput();}}

3.录音转文字

spring:ai:openai:api-key: ${api-key}base-url: ${base-url}

controller

packagecom.bjpowernode.controller;importjakarta.annotation.Resource;importorg.springframework.ai.openai.OpenAiAudioTranscriptionClient;importorg.springframework.core.io.ClassPathResource;importorg.springframework.web.bind.annotation.RequestMapping;importorg.springframework.web.bind.annotation.RestController;@RestControllerpublicclassTranscriptionController{@ResourceprivateOpenAiAudioTranscriptionClient openAiAudioTranscriptionClient;@RequestMapping(value ="/ai/transcription")publicObjecttranscription(){//org.springframework.core.io.Resource audioFile = new ClassPathResource("jfk.flac");org.springframework.core.io.Resource audioFile =newClassPathResource("cat.mp3");String called = openAiAudioTranscriptionClient.call(audioFile);System.out.println(called);return called;}}

4.文字转录音

packagecom.bjpowernode.controller;importcom.bjpowernode.util.FileUtils;importjakarta.annotation.Resource;importorg.springframework.ai.openai.OpenAiAudioSpeechClient;importorg.springframework.web.bind.annotation.RequestMapping;importorg.springframework.web.bind.annotation.RestController;@RestControllerpublicclassTTSController{@ResourceprivateOpenAiAudioSpeechClient openAiAudioSpeechClient;@RequestMapping(value ="/ai/tts")publicObjecttts(){String text ="2023年全球汽车销量重回9000万辆大关,同比2022年增长11%。分区域看,西欧(14%)、中国(12%)两大市场均实现两位数增长。面对这样亮眼的数据,全球汽车行业却都对2024年的市场前景表示悲观,宏观数据和企业体感之前的差异并非中国独有,在汽车市场中,这是共性问题。";byte[] bytes = openAiAudioSpeechClient.call(text);FileUtils.save2File("D:\\SpringAI\\test.mp3", bytes);return"OK";}@RequestMapping(value ="/ai/tts2")publicObjecttts2(){String text ="Spring AI is an application framework for AI engineering. Its goal is to apply to the AI domain Spring ecosystem design principles such as portability and modular design and promote using POJOs as the building blocks of an application to the AI domain.";byte[] bytes = openAiAudioSpeechClient.call(text);FileUtils.save2File("D:\\SpringAI\\test2.mp3", bytes);return"OK";}}

packagecom.bjpowernode.util;importjava.io.*;publicclassFileUtils{publicstaticbooleansave2File(String fname,byte[] msg){OutputStream fos =null;try{File file =newFile(fname);File parent = file.getParentFile();boolean bool;if((!parent.exists())&&(!parent.mkdirs())){returnfalse;}

fos =newFileOutputStream(file);

fos.write(msg);

fos.flush();returntrue;}catch(FileNotFoundException e){returnfalse;}catch(IOException e){

e.printStackTrace();returnfalse;}finally{if(fos !=null){try{

fos.close();}catch(IOException e){}}}}}

5.多模态AI问答

packagecom.bjpowernode.controller;importjakarta.annotation.Resource;importorg.springframework.ai.chat.ChatClient;importorg.springframework.ai.chat.ChatResponse;importorg.springframework.ai.chat.messages.Media;importorg.springframework.ai.chat.messages.UserMessage;importorg.springframework.ai.chat.prompt.Prompt;importorg.springframework.ai.openai.OpenAiChatOptions;importorg.springframework.ai.openai.api.OpenAiApi;importorg.springframework.util.MimeTypeUtils;importorg.springframework.web.bind.annotation.RequestMapping;importorg.springframework.web.bind.annotation.RestController;importjava.util.List;@RestControllerpublicclassMultiModelController{@ResourceprivateChatClient chatClient;@RequestMapping(value ="/ai/multi")publicObjectmulti(String msg,String imageUrl){UserMessage userMessage =newUserMessage(msg,List.of(newMedia(MimeTypeUtils.IMAGE_PNG, imageUrl)));ChatResponse response = chatClient.call(newPrompt(userMessage,OpenAiChatOptions.builder().withModel(OpenAiApi.ChatModel.GPT_4_VISION_PREVIEW.getValue()).build()));System.out.println(response.getResult().getOutput());return response.getResult().getOutput().getContent();}}

第二种方式采用Ollama

Spring AI 不仅提供了与 OpenAI 进行API交互,同样支持与 Ollama 进行API交互。Ollama

是一个发布在GitHub上的项目,专为运行、创建和分享大型语言模型而设计,可以轻松地在本地启动和运行大型语言模型。

windows可以下载ollama,你可以用linux都行,我这里图方便

下载好了打开命令台 你可以自己选model

https://ollama.com/library

ollama run llama2-chinese

然后yml配置

spring:ai:ollama:base-url: http://127.0.0.1:11434chat:# 要跟你刚刚ollama run llama2-chinese 后面这个模块一模一样才行model: llama2-chinese

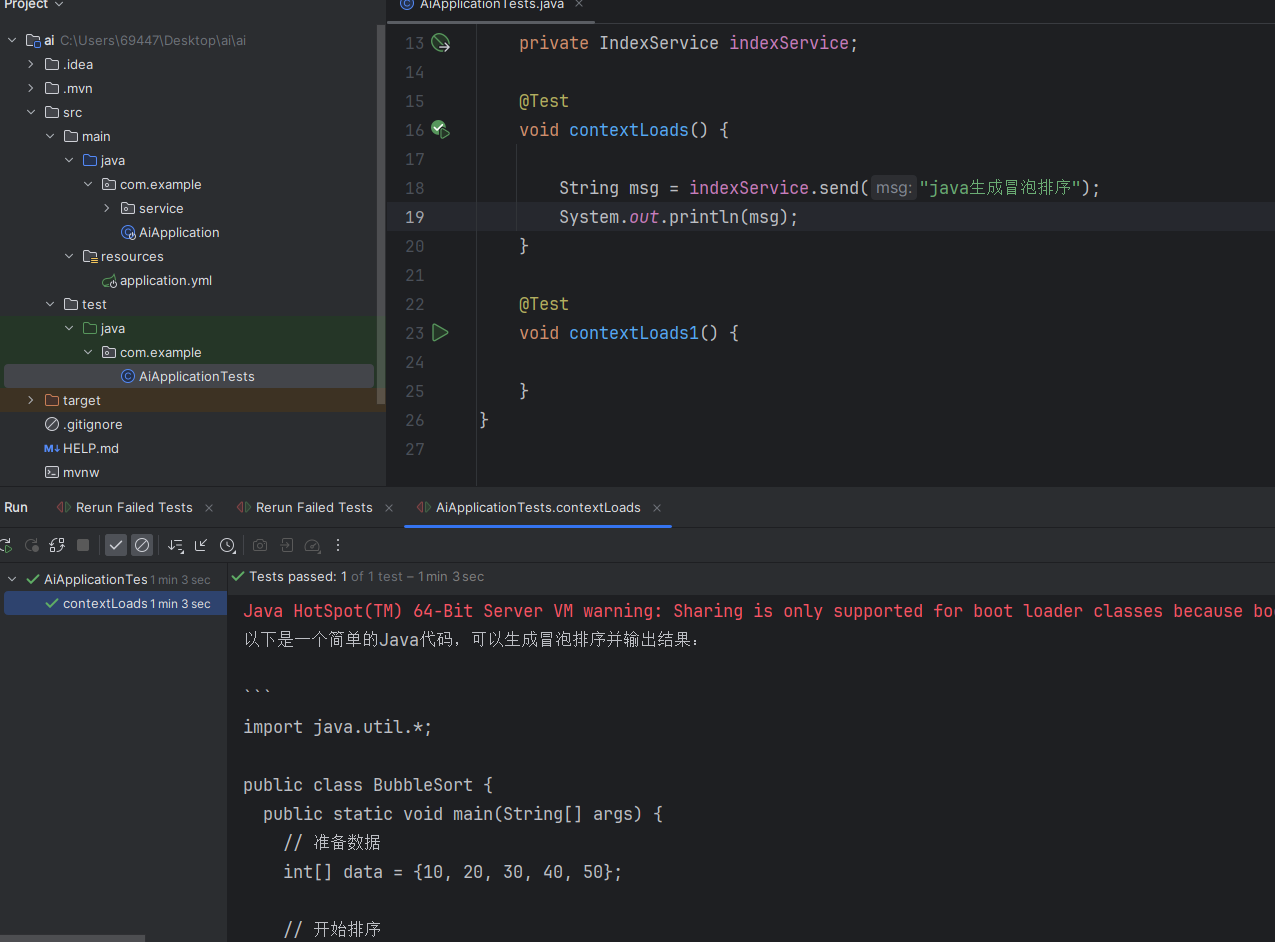

IndexServiceImpl

packagecom.example.service.impl;importcom.example.service.IndexService;importorg.springframework.ai.ollama.OllamaChatClient;importorg.springframework.ai.openai.OpenAiChatClient;importorg.springframework.beans.factory.annotation.Autowired;importorg.springframework.stereotype.Service;@ServicepublicclassIndexServiceImplimplementsIndexService{@AutowiredprivateOllamaChatClient ollamaChatClient;@OverridepublicStringsend(String msg){/*ChatResponse chatResponse = ollamaChatClient.call(new Prompt(msg, OllamaOptions.create()

.withModel("qwen:0.5b-chat") //使用哪个大模型

.withTemperature(0.4F))); //温度,温度值越高,准确率下降,温度值越低,准确率会提高

System.out.println(chatResponse.getResult().getOutput().getContent());

return chatResponse.getResult().getOutput().getContent();*/// Prompt prompt = new Prompt(new UserMessage(msg));// return ollamaChatClient.stream(prompt);return ollamaChatClient.call(msg);}}

linux部署ollama

docker run -d-v /root/ollama:/root/.ollama -eOLLAMA_ORIGINS='*'-p11434:11434 --name ollama ollama/ollama

Open WebUI

https://github.com/open-webui/open-webui

lobe-chat

https://github.com/lobehub/lobe-chat

docker run -d-p31526:3210 -eOPENAI_API_KEY=sk-xxxx -eACCESS_CODE=lobe66 --name lobe-chat lobehub/lobe-chat

版权归原作者 刘明同学呀 所有, 如有侵权,请联系我们删除。